Recently, competition among chatbot models has reignited. Models like Bard and Gemini have sparked anticipation with each release, hoping to surpass GPT-4, but they haven’t quite succeeded. However, many claim that the newly released Claude-3 family by Anthropic provides responses far superior to GPT-4. This has led to speculation that OpenAI might soon release a 4.5 version .

While the future remains uncertain, it’s clear that LLMs are continually evolving. Researchers are now seeking new architectures beyond the Transformer-based language model structure and suggesting novel learning methodologies.

One such recent study is titled Social Learning: Towards Collaborative Learning with Large Language Models. This paper describes a model structure where AI teaches other AI. Let’s delve into how AI collaborates to learn.

Social Learning: AI Teaching AI

This research idea stems from the psychological concept of “Social Learning” by renowned scholar Albert Bandura. Social learning refers to the acquisition of new behaviors or knowledge by observing and imitating others. It’s a natural process for humans, who often learn from media without direct experience. Children, for example, often learn by observing their parents or teachers, which is why adults should be mindful of their behavior around children. Google researchers aimed to apply this social learning theory to LLM learning, enabling LLMs to transfer knowledge to other models.

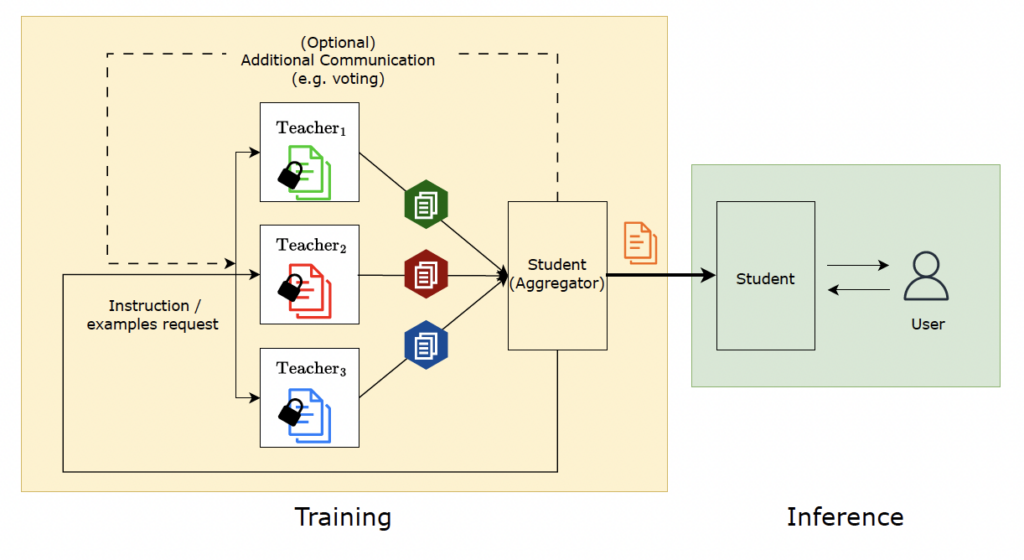

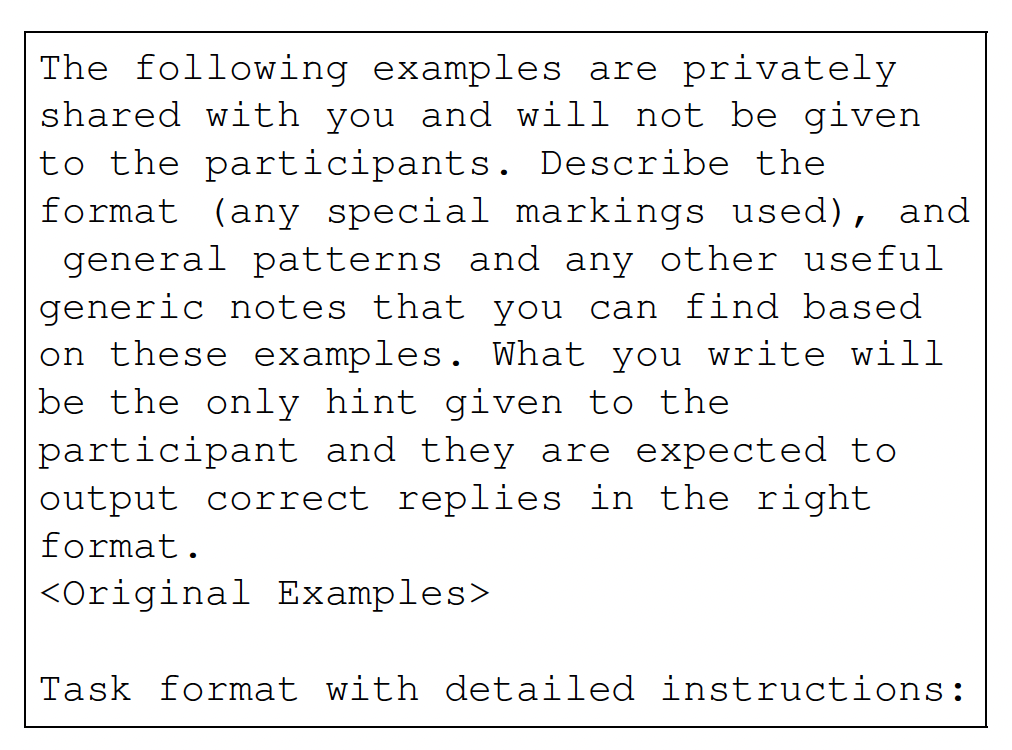

Understanding the social learning theory makes it easier to grasp the learning structure of the Social Learning model. Researchers assigned AI agents the roles of ‘Teacher’ and ‘Student.’ The teacher agent learns content and transfers it to the student agent, enabling the latter to learn. But if the goal is to create a smart language model, why involve a student agent when the teacher agent already possesses the knowledge?

Addressing Privacy Issues

One major issue with LLMs is the potential for personal information leaks. If LLMs learn from private data, there is a risk of exposing sensitive information, as has happened in the past. While completely removing personal data can prevent this, such AI might struggle to address personal problems in the real world.

For instance, imagine receiving an SMS with your name and birthdate along with a suspicious link. You want AI’s help to determine if it’s spam but worry about the personal information involved. Additionally, if the AI lacks relevant data in its training, it might struggle to make an accurate judgment.

Social Learning: Towards Collaborative Learning with Large Language Models (Mohtashami et al. 2024)

This is where the Social Learning model can be useful. Teacher agents, having learned from personal data, erase this data and generate fictional information. The Student Aggregator, given multiple examples, extracts good cases and provides prompts to the student agent during te inference stage. This process allows the AI to offer appropriate responses to user requests without risking personal information leaks.

The Utility of Social Learning

This learning method is closely related to the increasingly popular Federated Learning. Federated Learning involves learning from decentralized data, coordinated and managed by servers, where data remains distributed across devices, and only the necessary information is collected centrally for updates.

Social Learning shares similar characteristics, as original data is not sent to a centralized server but processed within individual devices. Moreover, since it is used only for inference without updating the model’s weights, it is considered safer. Researchers highlight three advantages of this method:

Advantages of Social Learning over Federated Learning:

Advantages of Social Learning over Federated Learning:

- All components can be implemented regardless of the specific model, meaning different models can be used for Teacher and Student agents without issues.

- Text is more concise than gradient updates required for learning, saving costs and making it easier to transmit over the network.

- Text is much more interpretable than gradients, allowing for clear understanding of what the teacher is teaching the student.

To assess how well Social Learning protects data, researchers examined how similar the data generated by the teacher model was to actual personal data, finding the leakage rate to be less than 0.1% of the total dataset size.

Using a method commonly employed in Federated Learning called Secret Sharer, researchers measured how much personal information was retained during training. Secret Sharer involves embedding secret data points, or “canaries,” in the training dataset to gauge how much sensitive data is remembered.

For example, if “the verification code is 1234” is included as a canary, the AI should recognize the pattern “verification code” while concealing the sensitive information “1234.” This method demonstrated that using teacher agents to transfer information is safer than directly delivering information to student agents.

AI research initially aimed to mimic the human brain’s signal transmission structure. While modern deep learning may not perfectly simulate the brain, AI learning methodologies often resemble human processes. Techniques from educational psychology, such as reinforcement and social learning, prove effective in AI learning.

The results of these learning methods also mirror human experiences. For instance, the emergent abilities of LLMs highlight that AI has reached a level where it can act as a helpful assistant, if not yet fully human-like. Nonetheless, proving the utility of new learning methods suggests that new architectures and paradigm shifts are on the horizon, promising further advancements in AI capabilities.