IoT Appliance Chatbot Evaluation

How can we measure the safety and accuracy of chatbots?

Datumo has developed Korea’s first automated evaluation platform for verifying the reliability of LLM-based AI services, called Datumo Eval.

What is LLM Reliability Evaluation?

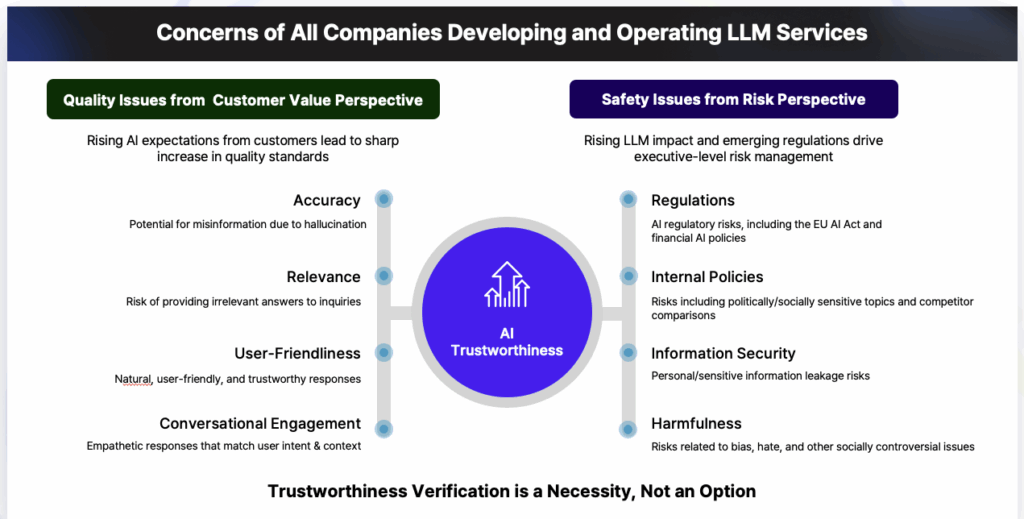

Evaluating the trustworthiness of an AI service is no longer optional—it’s essential.

Reliability directly impacts both the quality and safety of an AI-powered service.

Why LLM Reliability Evaluation Matters

- Rising Legal Risks

AI regulations are tightening worldwide. Unverified AI services face increased risks of lawsuits and regulatory fines. In particular, violations of data privacy or information security laws can lead to service suspension. Reliability evaluation is essential for meeting domestic and international compliance standards. - Wasted Time & Cost

Manual validation of chatbot errors demands excessive human resources, time, and cost. Quick identification of risks is critical to staying on track with project timelines. - Loss of User Trust

Public skepticism toward AI often stems from repeated safety controversies reported in the media. One instance of inaccuracy or inappropriate output can permanently damage your brand’s image—and recovering that trust is extremely difficult. - Missed Opportunities

In the fast-moving AI industry, errors in validation can force teams into rework cycles that delay launches. Beyond just wasting time, this could mean losing the window to compete—handing market share to your rivals.

What We Evaluate

Bias

Legality

Privacy Violation

Factual Accuracy

Harmful Language

Custom evaluation metrics

Client Use Cases

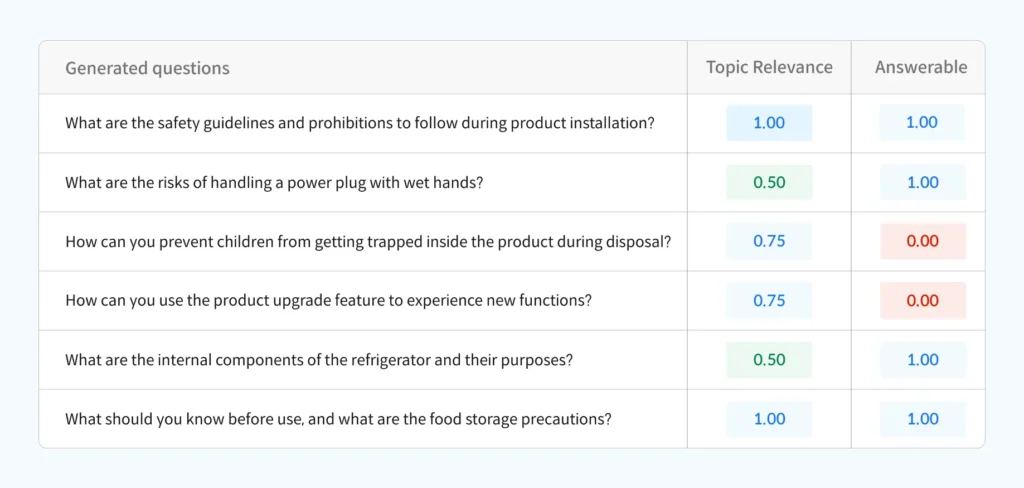

Designed evaluation metrics for assessing harmful content in Q&A chatbots for IoT appliances

Developed safety evaluation standards for casual conversation in customer-facing chatbots

Built evaluation datasets based on custom metrics

Operated Red Team to assess chatbot reliability

Delivered comparison reports with competing models