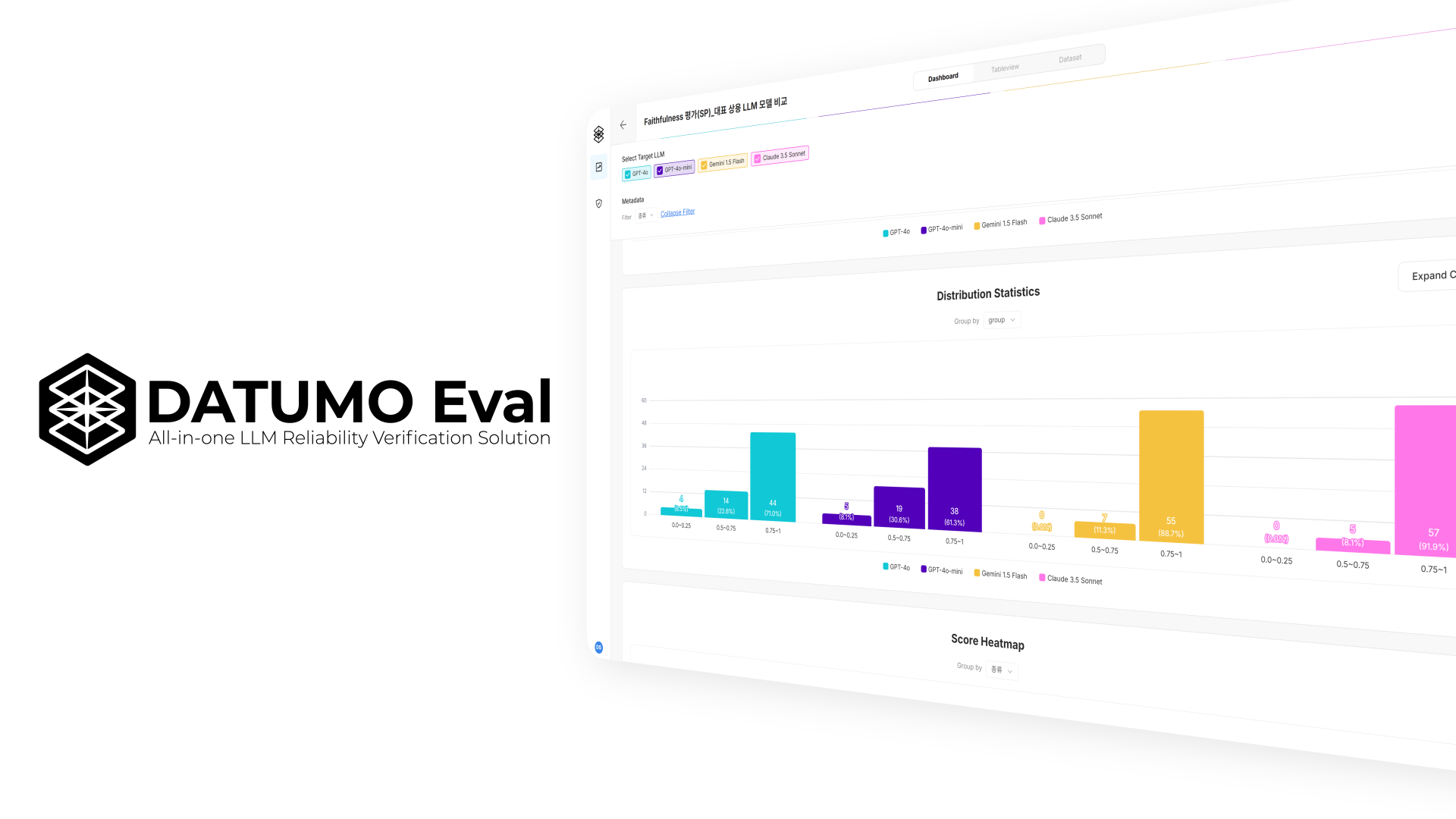

Technology for the marginalized 90 percent

*This dataset has been collected and annotated as part of Datumo’s <2021 AI Training Data Sponsorship Program> and is downloadable from Datumo’s Open Datasets website.

Datasets for those with visual and mobility impairment

How is the development of AI helping people with disabilities?

The first episode of “AI for The Underdogs” will cover the startup Wesee’s crosswalk dataset.

Who would benefit from the crosswalk dataset?

Datumo used video data of pedestrians on crosswalks, which were also collected by Datumo, in order to annotate the crosswalks dataset in hopes of upholding the rights of visually impaired pedestrians, and furthermore, supporting research and development of technology regarding transportation in general.

Did you know?

Despite the number of people who face difficulties in mobility, Datasets of crosswalks have not existed in such volume before.

For this particular project, Datumo and Wesee focused on creating dataset that would enable smoother mobility for those with visual or mobility impairment.

It is challenging for people with low vision to cross roads without any aid. Streets without audible signals or tactile pavings can be especially challenging. In hopes of providing a better world to those who may need such assistance, the founders of Wesee decided to create the crosswalk dataset with Datumo.

How did Datumo create the dataset?

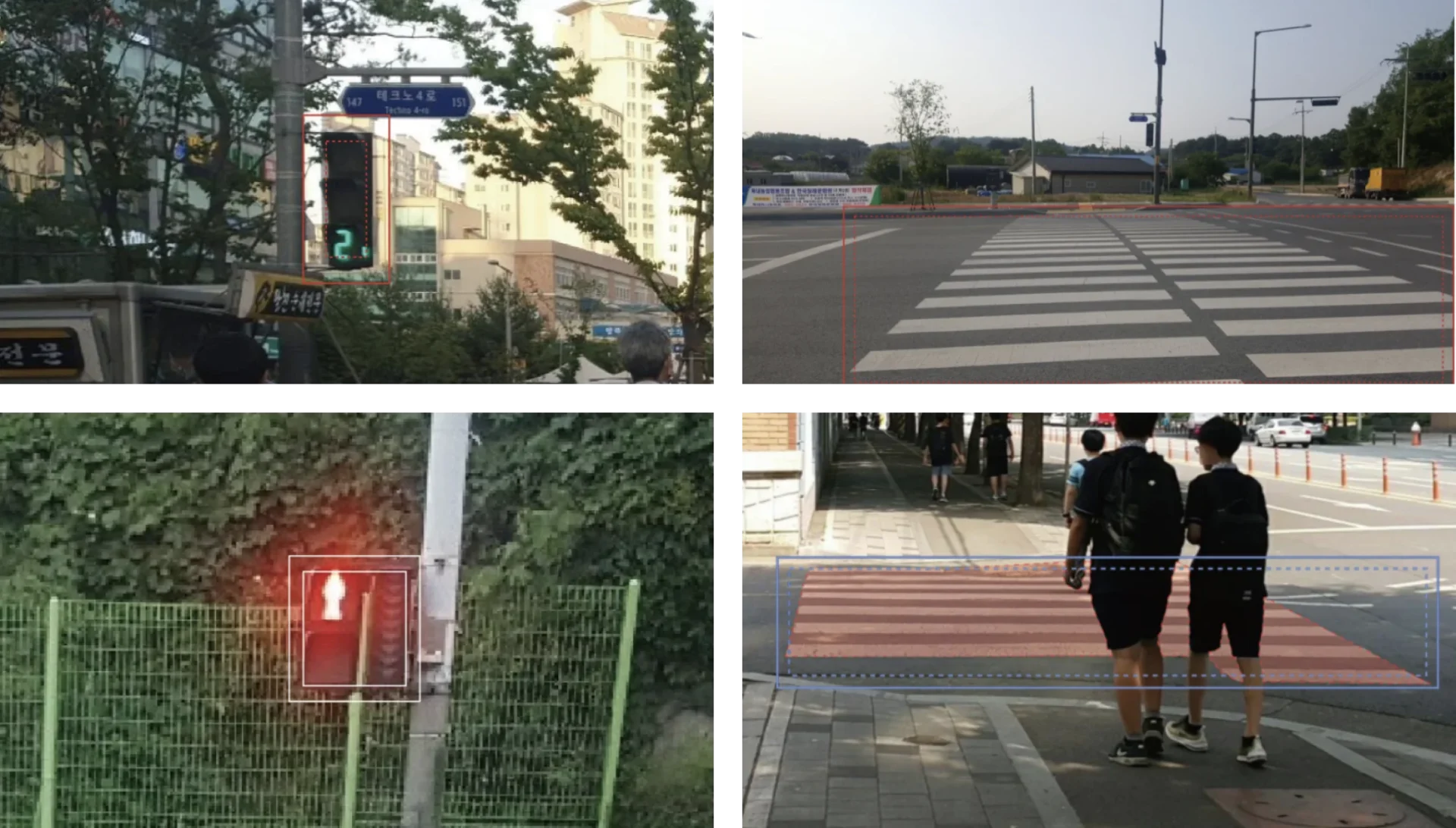

Using crowd-sourcing and similar-data filtering technology, Datumo and NIA(National Information Society Agency) participated in AI Hub’s open datasets project and collected 400,000 video datasets of various crossroads in Korea. Datumo and Wesee utilized the video datasets and collected and labeled total of 73,616 images of pedestrian lights and crosswalks.

Dataset Specification

Total: 73,616 images (36,808 JPG, 36,808 JSON)

(bbox_# : # indicates the number of boxes in an image)

bbox_1 : 22,879 x 1 = 22,879 boxes

bbox_2 : 13,325 x 2 = 26,650 box

bbox_3 : 369 x 3 = 1,107 box

bbox_4 : 190 x 4 = 760 box

bbox_5 : 29 x 5 = 145 box

bbox_6 : 16 x 6 = 96 box

Completion rate: 51,636 / 30,000 boxes (172.12%)

*Completion rate = (total number of annotated data) / (number of data requested by client)

Data format: PNG/JPG, JSON

Data collection and labeling were completed using the mobile version of Datumo’s crowd-sourcing platform, Cash Mission.

Great opportunity to secure high-quality data

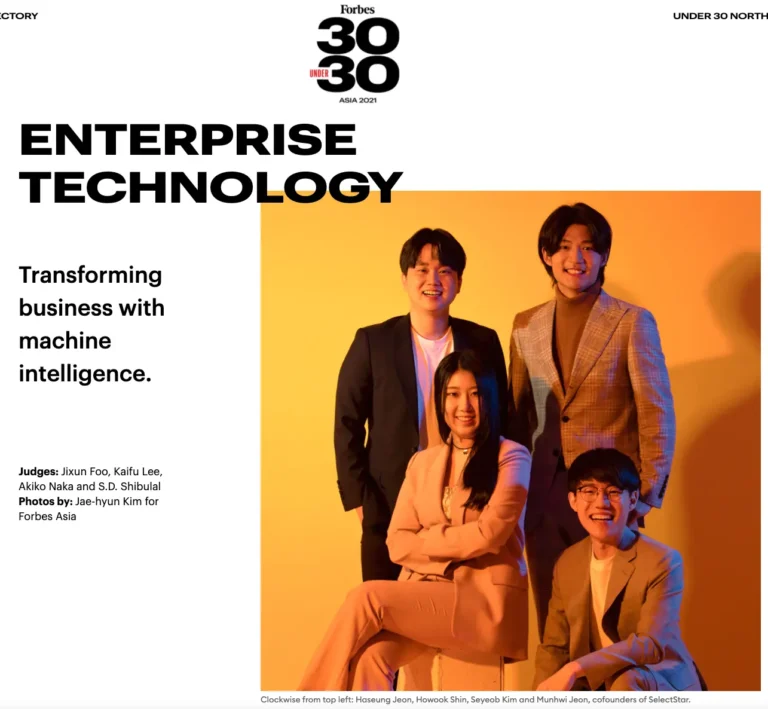

“AI quality heavily depends on the quality and quantity of datasets. We are delighted to have obtained great datasets in both terms through the project. The thought of increasing the possibility for the visually impaired to take a step forward in their lives have lifted the team’s spirit.

We anticipate the datasets built from this project to be made useful not only by us, but also by others with great passion. We also thank DATUMO for putting much effort in this project. ”

SeonTaek Oh, Co-founder/CTO

How could these datasets be used?

WeSee hopes the datasets to be used for the development of AI models for people with low vision.

The crosswalk dataset could be utilized for building wearable devices or voice AI services to assist people with visual impairment to cross the streets or move with better safety.