LLM evaluation is more complex than it might seem. Since the emergence of ChatGPT (November 30, 2022) [1], a wave of Large Language Models (LLMs) has been released as if in competition. Just in 2024 alone, we’ve seen the launch of Gemini 1.5 pro (February 15) [2], LLaMA 3 (April 18) [3], GPT-4o (May 13) [4], Claude 3.5 Sonnet (June 21) [5], and LLaMA 3.1 (June 23) [6]. Each new model claims to outperform its predecessors. But how do we actually measure what’s better or worse?

To compare the performance of two models, you could place them side by side or give them a ‘test’ to compare scores—this is what we call LLM Evaluation. Why is LLM evaluation important? Simply put, it helps us verify whether the LLMs are functioning as we want them to. We set specific goals for the LLM and then assess how well it achieves those goals. For example, how accurately does it translate, answer questions, solve scientific problems, or respond fairly without social biases?

LLMs have diverse capabilities, making it difficult to evaluate them with just one metric. Beyond functionality, we must also assess the quality of the generated text. Recently, alignment with “human preferences or values” has become an important evaluation criterion. It’s particularly crucial to ensure that the LLM does not leak personal information, generate harmful content, reinforce biases, or spread misinformation.

LLM Evaluation Process

LLM evaluation process can be broadly divided into three parts [7]: what to evaluate, with what data to evaluate, and how to evaluate. Let’s dive into each.

1. What to Evaluate

What to evaluate depends on the specific capabilities of the LLM and which aspects of the LLM’s performance are of interest. There are various metrics for evaluating the LLM’s core ability to generate text:

- Accuracy: Does the generated content align with facts?

- Fluency: Is the sentence natural and easy to read?

- Coherence: Is the content logically consistent?

- Relevance: How well does the text align with the topic?

- Naturalness: Does the content appear to be written by a human?

- Factual Consistency: Is the information accurate?

- Bias: Does the generated content contain social biases?

- Harmfulness: Is there harmful content in the generated output?

- Robustness: Does it produce correct outputs even with incorrect input?

In addition to generation capabilities, traditional NLP tasks like sentiment analysis, text classification, natural language inference, translation, summarization, dialogue, question answering, and named entity recognition are also important evaluation factors. Besides traditional NLP task evaluation, expertise in STEM (Science, Technology, Engineering, Mathematics) fields or understanding of specific domains (e.g., finance, law, medicine) may also be assessed.

2. What Data to Use for Evaluation

‘What data to use for evaluation’ is about selecting the test for evaluation. You can think of the data as the test questions. For fairness, all models should take the same test, so we use the same dataset. These datasets are called benchmark datasets, and most models are evaluated using these benchmark datasets. When a new model claims, “We’re the best,” it often means, “We scored the highest on a benchmark dataset.” Let’s explore some representative benchmark datasets.

MMLU (Massive Multitask Language Understanding)

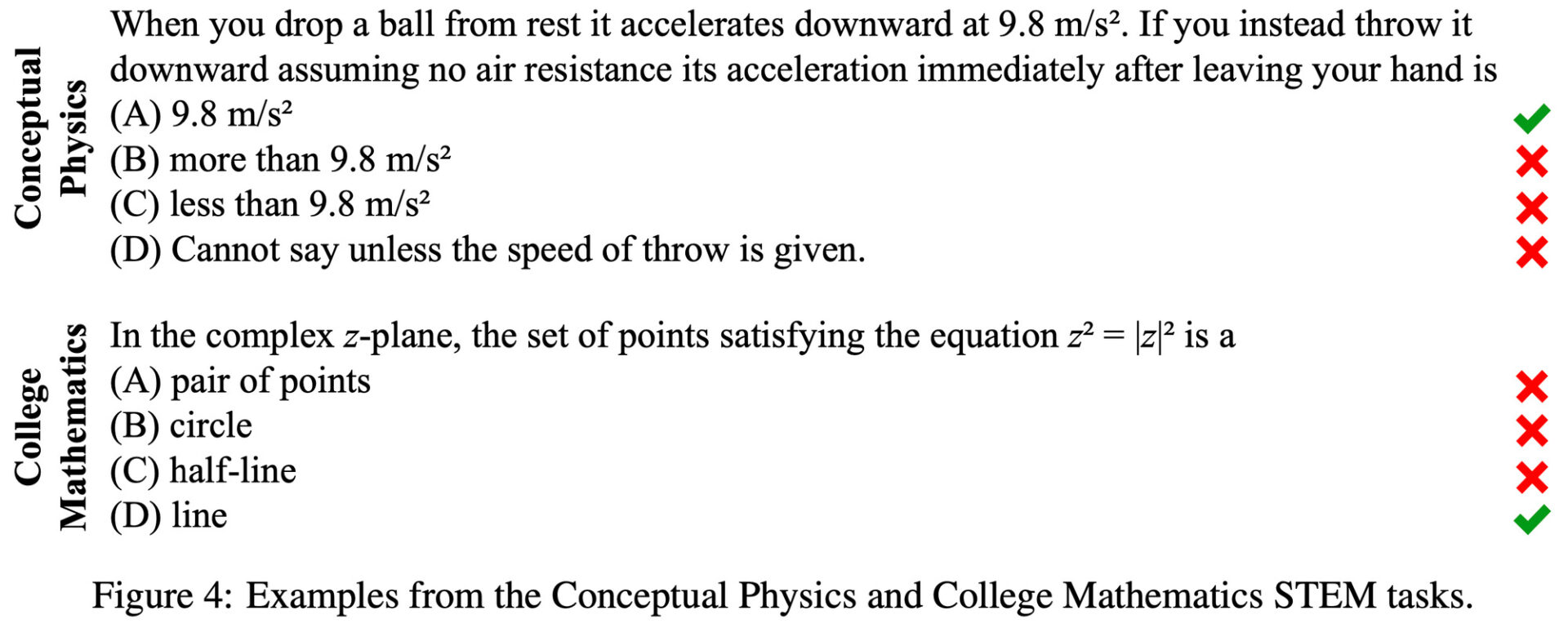

Created by UC Berkeley, this benchmark, as the name suggests, evaluates comprehensive language understanding [8]. It includes 57 different topics, ranging from STEM, humanities, and social sciences to more specialized fields like law and ethics. With around 16,000 questions, the difficulty ranges from elementary to expert level, making it a tool to assess a model’s diverse language understanding. The questions are mostly multiple-choice. Want a sneak peek at some real data?

(Image Source) [8]

The baseline for random choice (guessing) is 25%, non-expert humans scored 34.5% accuracy, and GPT-3 achieved 43.9% accuracy back in 2020. As of August 2024, the top scorer on the MMLU benchmark is Gemini 1.0 Ultra [9], with a 90.0% accuracy, slightly surpassing human expert accuracy of 89.8%, highlighting the rapid advancements in LLMs over just four years.

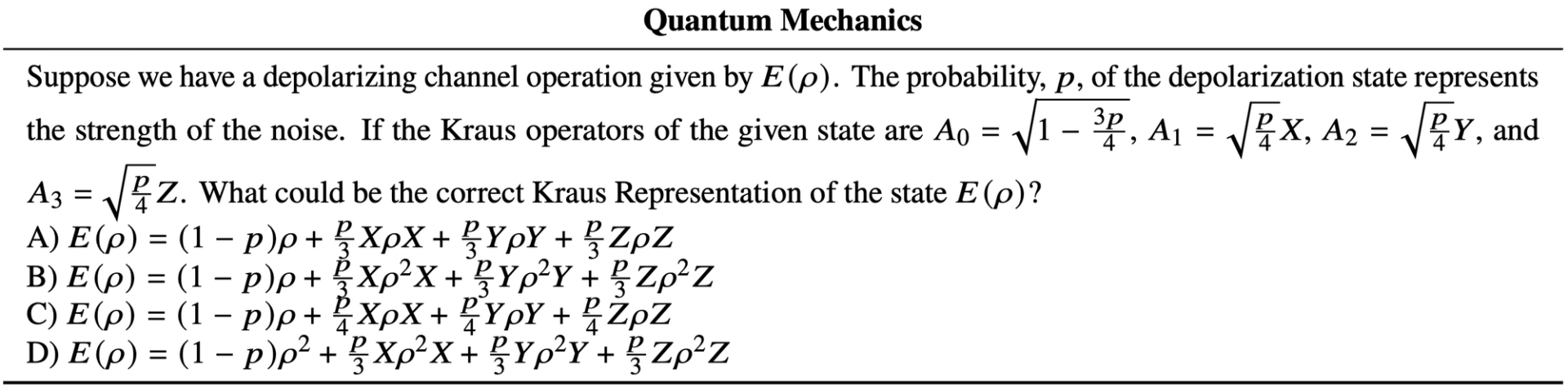

GPQA (A Graduate-Level Google-Proof Q&A Benchmark)

Google’s GPQA, as the name suggests, is a benchmark dataset composed of graduate-level questions [10]. It consists of 448 multiple-choice questions crafted by experts in physics, chemistry, and biology. The problem-solving accuracy for PhD holders or doctoral students in these fields is 65%, while highly trained non-experts score only 34%. GPQA is a very challenging benchmark. As of November 2023, GPT-4 scored 39% accuracy, highlighting its difficulty. Here’s a sample of the actual data. The question alone is tough enough to understand.

(Image Source) [10]

As of August 2024, the top scorer on the GPQA benchmark is Claude 3.5 Sonnet [5], with an accuracy of 59.4%. While this score still lags behind the recent MMLU score of 90.0%, the rapid progress in AI suggests that GPQA scores will also quickly improve.

HumanEval

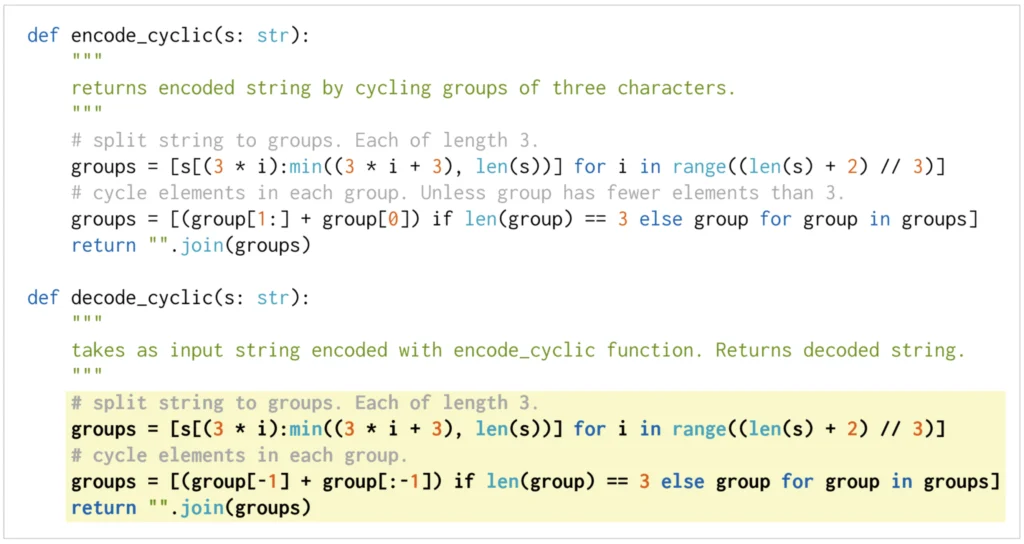

Developed by OpenAI, this dataset evaluates how well a model can generate Python functions from Python docstrings [11]. It consists of 164 programming tasks, with an average of 7.7 tests per problem. These tasks evaluate understanding of programming languages, algorithms, and basic mathematics, resembling typical software interview questions.

How do you evaluate the quality of function generation? HumanEval assesses accuracy by checking whether the generated code passes predefined unit tests. If the code generated by the model passes these unit tests, it’s considered correct. This approach focuses on the practical ability of the model to generate accurate and functional code, making it a more valuable evaluation method than merely comparing the code text. Here’s a sample of the actual data.

(Image Source) [11]

The code on the white background is the input prompt, and the code on the yellow background is the output generated by the model.

OpenAI created the Codex-12B model to tackle this challenge, and it successfully solved 28.8% of the tasks. In 2021, the GPT-3 model had a success rate close to 0%. Currently, the top scorer on the HumanEval benchmark is the LDB model, with an accuracy of 98.2% [12].

Benchmark Datasets

There are many different benchmark datasets for evaluating LLMs. Let’s take a look at some:

- ARC Challenge: A benchmark that evaluates a model’s reasoning ability through elementary school-level (U.S. grades 3-9) science exams.

- HellaSwag: A benchmark that assesses common sense reasoning by predicting various scenarios that could occur in everyday situations.

- TrustfulQA: A benchmark designed to evaluate the trustworthiness of a model’s answers.

All the datasets introduced so far are based on English. So, how can we evaluate a model’s Korean language capabilities?

Of course, there are Korean benchmark datasets available. One representative dataset is the Open Ko-LLM Leaderboard2 [13], created and managed jointly by SelectStar and Upstage. Open Ko-LLM Leaderboard (version 1) [14] is a dataset that translates existing English benchmark datasets such as ARC, HellaSwag, MMLU, and TrustfulQA into Korean. Leaderboard2 includes translated datasets like GPQA, GSM8K, and a newly added KorNAT [15] dataset, created by SelectStar, which evaluates how well the social values and basic knowledge of Koreans are reflected. This will be very useful when evaluating the Korean models you’ve created!

3. How to Evaluate

Finally, let’s talk about how to evaluate. Evaluation methods are broadly divided into automatic evaluation and human evaluation.

Automatic Evaluation

Automatic evaluation can be further divided into model-based evaluation and evaluation using LLMs. The most representative example of model-based evaluation is the accuracy-based assessment, where you determine how many multiple-choice questions the model gets right, as seen in the benchmark datasets above. It’s simple and straightforward. Another model-based evaluation method exists where each question has a correct (or reference) text, and the model evaluates the similarity to that reference. This approach was commonly used for traditional NLP tasks like translation or summarization, utilizing evaluation metrics such as perplexity, BLEU, ROUGE, and METEOR. These metrics compare the generated text with the reference text at the token level to quantify how predictable the text is and how well it reflects the reference content.

However, these evaluation metrics have limitations, such as not considering the context of the generated text (since they only compare tokens) or not aligning with actual human judgments. Therefore, alternative evaluation methods have recently been explored, namely using LLMs for evaluation. In this approach

, another LLM acts as a ‘judge’ to evaluate the text generated by the target LLM. The ‘judge LLM’ is given a prompt with the evaluation criteria and the generated text, and it assigns scores directly. Various methods, such as LLM-derived metrics, Prompting LLMs, Fine-tuning LLMs, and Human-LLM Collaborative Evaluation, are being researched [16]. Each method has its pros and cons, so the choice depends on the evaluation purpose and context.

Human Evaluation

Human evaluation, as the name suggests, involves humans, rather than models, directly evaluating the output. This approach can capture subjective content or nuances that automatic evaluation might miss and also consider ethical issues. It allows for more detailed assessment of the text’s naturalness, accuracy, and coherence. Human evaluation can be further divided into expert evaluation and crowdsourcing evaluation, depending on the evaluators. Expert evaluation involves reviewers with domain-specific knowledge assessing the model’s output. For example, experts in finance, law, or medicine can evaluate the model’s answers in their respective fields. This allows for much more accurate evaluation than general user assessments. On the other hand, crowdsourcing involves general users evaluating the model’s output based on fluency, accuracy, and appropriateness.

A downside to human evaluation is its lack of scalability. It requires significant time and money for humans to review and evaluate each question and answer, making large-scale evaluations challenging. Domain experts are already few in number and more costly than general evaluators, making large-scale expert evaluation even more difficult. Another drawback is inconsistency. Different evaluators may have different standards and interpretations, leading to inconsistent evaluation results. Cultural and personal differences can create high variability between evaluators, reducing the stability of the assessment.

As models continue to surpass human capabilities in more areas, evaluation standards and methodologies must evolve as well. It’s essential to figure out how to incorporate complex factors like ethics, multilingual abilities, and real-world applicability into evaluations.

When we consider not only superior performance but also the impact on real-life applications, we can truly say we’re creating AI that benefits humanity.

- https://openai.com/index/chatgpt/

- Gemini 1.5 pro, https://blog.google/technology/ai/google-gemini-next-generation-model-february-2024/

- LLaMA 3, https://ai.meta.com/blog/meta-llama-3/

- GPT-4o, https://openai.com/index/hello-gpt-4o/

- Claude 3.5 Sonnet, https://www.anthropic.com/news/claude-3-5-sonnet

- LLaMA 3.1, https://ai.meta.com/blog/meta-llama-3-1/

- https://arxiv.org/abs/2307.03109

- https://arxiv.org/abs/2009.03300

- https://deepmind.google/technologies/gemini/ultra/

- https://arxiv.org/abs/2311.12022

- https://arxiv.org/abs/2107.03374

- https://arxiv.org/abs/2402.16906

- https://huggingface.co/spaces/upstage/open-ko-llm-leaderboard

- https://huggingface.co/spaces/choco9966/open-ko-llm-leaderboard-old

- https://arxiv.org/abs/2402.13605

- https://arxiv.org/abs/2402.01383