On July 29th, Meta unveiled SAM (Segment Anything Model) 2. It has been 1 year and 4 months since the initial release of SAM in April 2023. Let’s take a closer look at how much it has changed! 🧐

Segmentation's foundational model, SAM

As its name suggests, SAM aims to “Segment Anything.” Segmentation refers to distinguishing objects from the background and identifying the specific areas of certain objects. A simple example is the background removal feature in video conferencing.

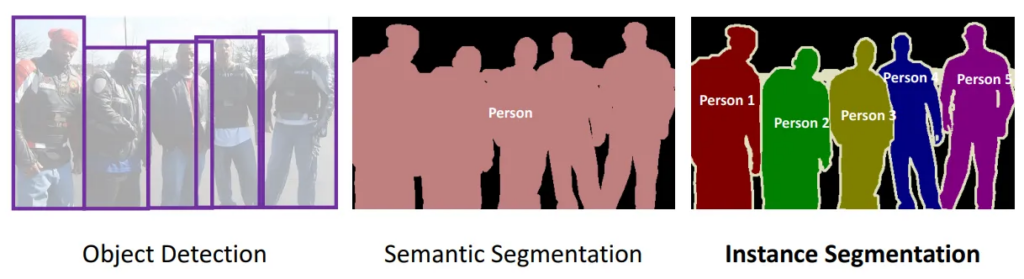

For this, the criteria for dividing objects must be clear. Depending on these criteria, segmentation tasks can be further classified. For instance, as shown in the image, segmentation can be done by semantic units (e.g., all people) or by instance units (e.g., each individual person), depending on the goal.

Towards Data Science- Single Stage Instance Segmentation — A Review (https://towardsdatascience.com/single-stage-instance-segmentation-a-review-1eeb66e0cc49)

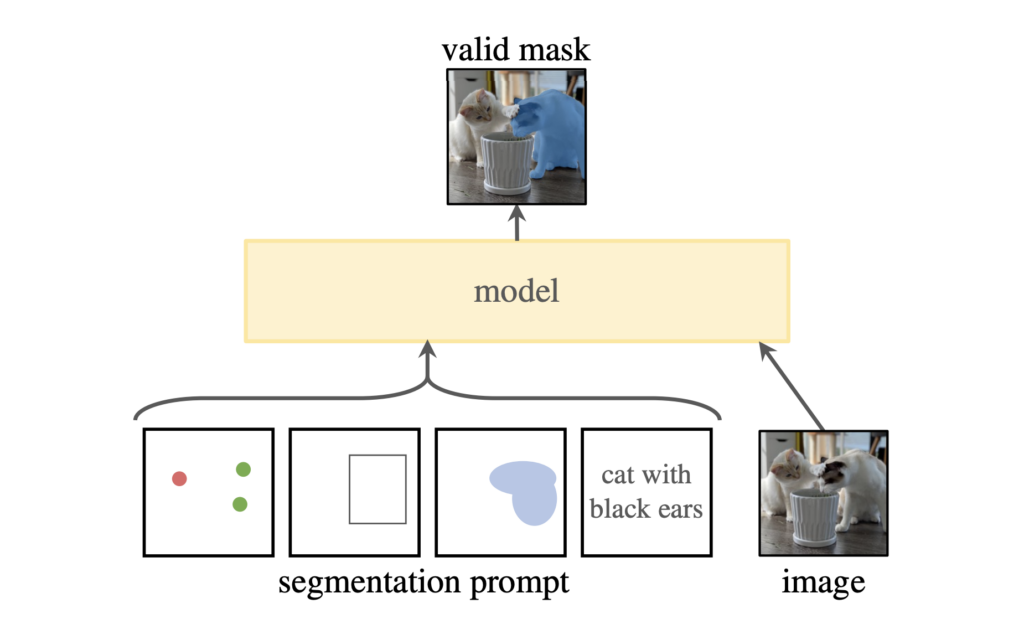

Even with the same image, the purpose of segmentation can vary depending on the context. To understand the user’s exact intent, a type of “prompt” is required. Image models can accept a wider range of prompts than language models. Examples of visual prompts include specific object points or the result of masking the desired area.

Various Segmentation Prompt examples. Source: Segment Anything (Kirillov et al., 2023) https://arxiv.org/abs/2304.02643

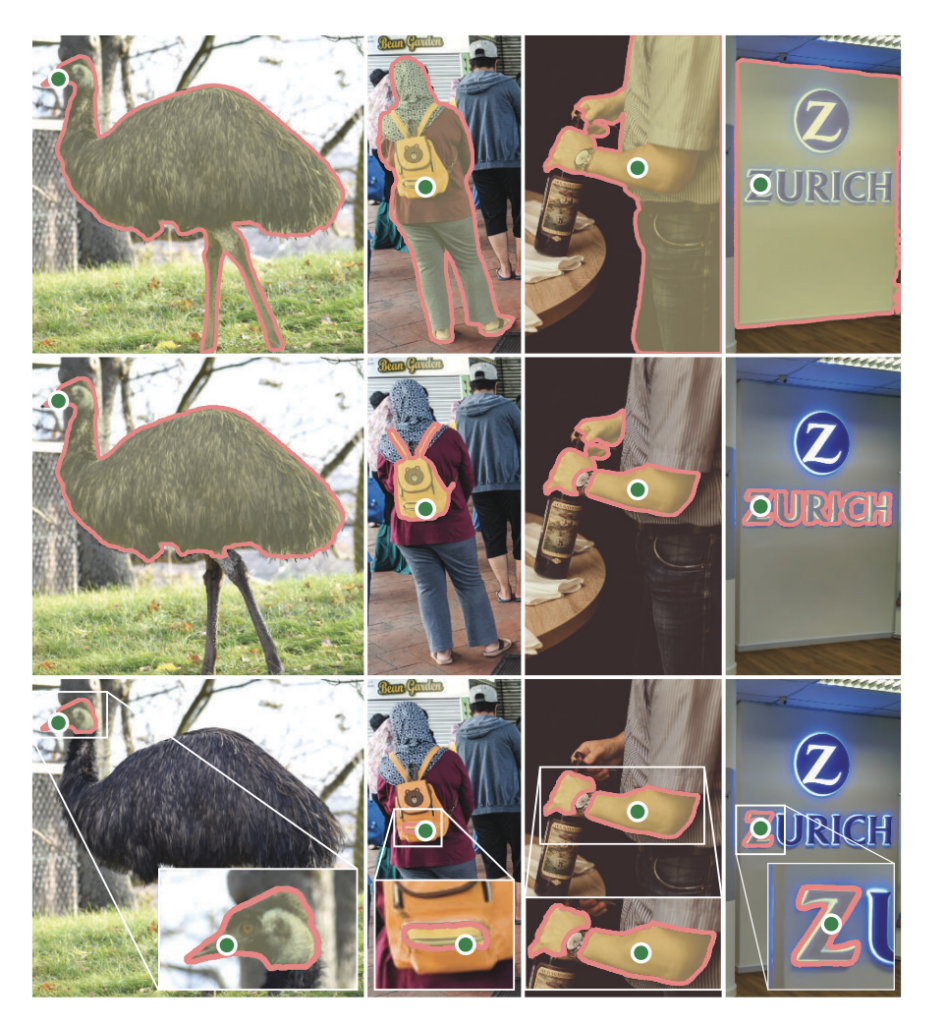

The challenge of the segmentation task lies in ambiguity. Even if prompts are provided, it can be difficult to determine exactly what the user wants based on a single point or mask area. For example, if you click on clothing in an image of a person, it may be unclear whether you intend to select the person or just the clothing itself. To address this issue, SAM predicts multiple targets(whole, part, subpart) for a single input, allowing for more flexible interpretations of the user’s intent.

SAM predicts and presents results by estimating the whole, part, and subpart of an object. Source: Segment Anything (Kirillov et al., 2023) https://arxiv.org/abs/2304.02643

SAM 2, What Has Changed?

What’s different about SAM2? The answer lies in the title of the paper: SAM 2: Segment Anything in Images and Videos. SAM 2 now supports segmentation in videos.

Videos aren’t just a collection of images; they require the additional dimension of ‘time.’ Even as time progresses, SAM 2 must consistently segment the same object, making this a much more complex task. Let’s take a closer look at the overall structure of SAM 2.

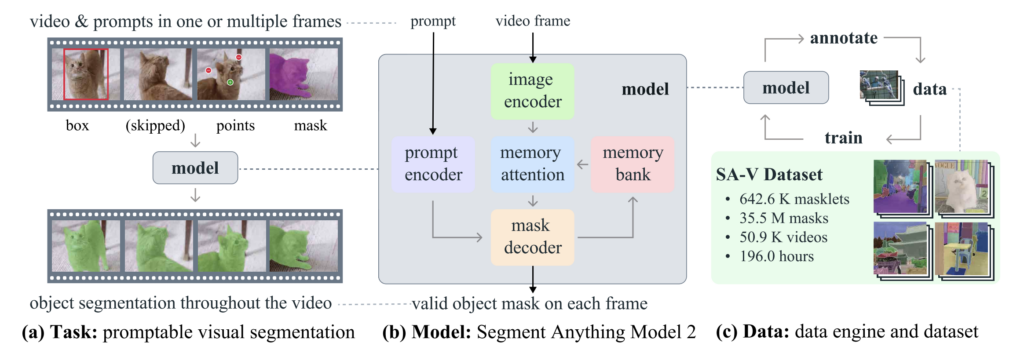

In video data, prompts such as boxes, points, and masks are provided as inputs. These prompts are then fed into a model composed of an image encoder and a prompt encoder, which decodes the masking area.

The changes in this model are specifically tailored for predicting masks in video. Notable components in the model include Memory Attention and a Memory Bank. Memory Attention combines the features of the current frame with the features and predictions from previous frames, while the Memory Bank stores past prediction information of the target object in the video. As mentioned earlier, temporal consistency is crucial in video. To maintain this, the researchers designed the model to incorporate information from previous frames when predicting the mask for the next frame.

The Data Engine Behind SAM2

To build a massive dataset of 642.6K masklets (prompts like boxes, points, etc.), 35.5M masks, and 50.9K videos, Meta utilized its own data engine. SAM 2’s data engine is divided into three stages:

1. Stage 1: The first stage relies on the data engine proposed during SAM’s development, where human annotators manually label data. Though time-consuming and expensive, this ensures high-quality data.

2. Stage 2: SAM 2 is introduced using the SAM 2 Mask version, where only masks are used as prompts. After drawing the masks with SAM in the first stage, SAM 2 Mask propagates these masks to other frames in the video, maintaining consistency along the time axis. In later frames, annotators use tools like ‘brush’ and ‘eraser’ to refine the masks and feed them back into SAM 2 Mask, creating a data generation and learning loop for SAM 2.

3. Stage 3: In the final stage, the fully functional SAM 2 comes to place. Annotators only need to click on frames that require edits, eliminating the need for starting from scratch. SAM 2 allows mask editing without re-annotation, thus enabling the creation of a large, high-quality dataset while mastering video masking techniques, ultimately leading to the creation of SAM 2.

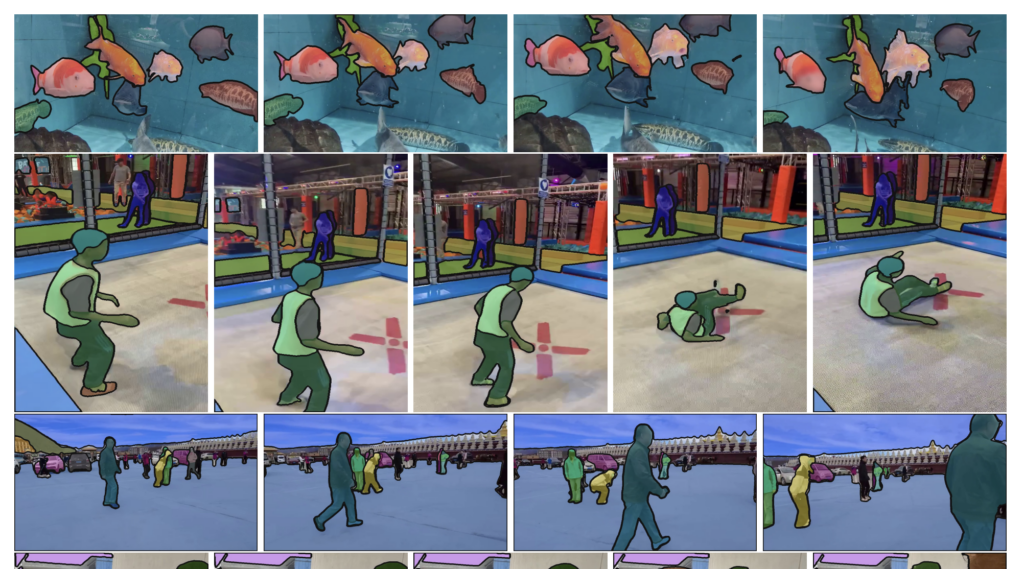

SA-V Dataset example

The foundational model for image segmentation, SAM, has evolved once again to now conquer video as well. Just as SAM inspired various research avenues, SAM 2 is expected to have a significant impact as well. We can look forward to advancements in everyday video editing technologies and research dealing with video modalities. 🌟