Why are medical school programs so long worldwide? The answer lies in the immense volume of knowledge medical professionals must master. Medicine isn’t just about facts—it’s about navigating complex relationships, countless variables, and making precise, reliable decisions under pressure.

Complicating matters further, medical information is vast and fragmented, spread across research papers, databases, and clinical records. Finding the right data, verifying connections, and drawing trustworthy conclusions is no easy task.

To address this challenge, Harvard University and other institutions developed the KGARevion agent. Unlike traditional systems that merely retrieve information, KGARevion verifies relationships, corrects errors, and delivers highly reliable answers.

Let’s take a closer look at how KGARevion tackles these challenges.

KGARevion: Why Do We Need It?

Let’s take a closer look at the limitations KGARevion aims to overcome.

The Limitations of RAG

Traditional RAG (Retrieval-Augmented Generation) systems retrieve documents and generate responses, but they may struggle to explain complex relationships clearly. This is because RAG focuses on retrieving independent chunks of information rather than interconnected, relational data. In fields like medicine, where relationships must be explicitly validated and errors can have serious consequences, this approach often falls short.

The Limitations of LLMs

As we’ve explored in our previous letter, LLMs can suffer from hallucinations and incorrect and/or shallow reasoning. Following complex logical pathways and ensuring deep, reliable reasoning remains a significant challenge.

The Limitations of Knowledge Graphs (KG)

Knowledge graphs excel at providing clear relational information but cannot generate answers on their own. They simply map connections between data points without the ability to synthesize or articulate a response.

How does KGARevion propose to address these three challenges? Let’s dive deeper.

How KGARevion Works

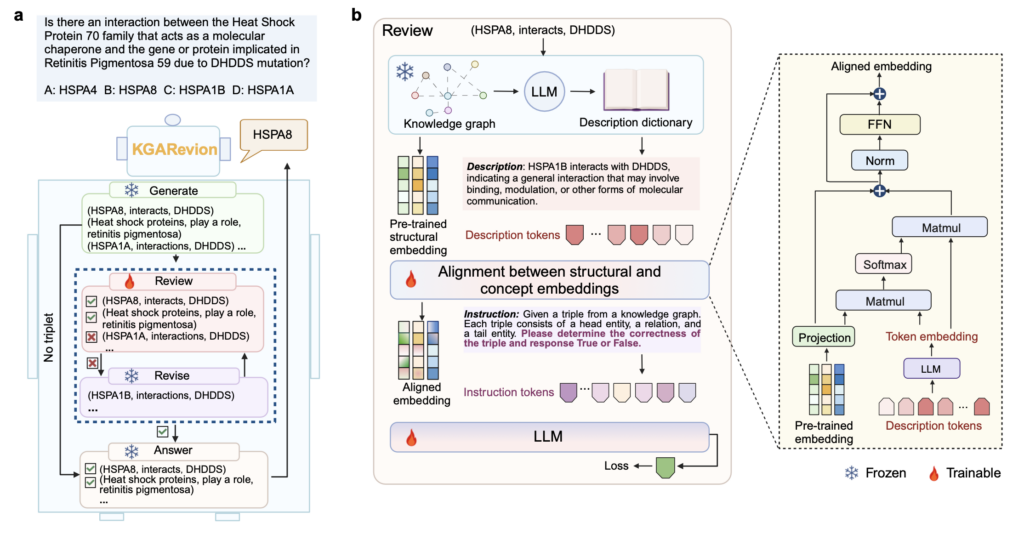

KGARevion follows four key stages: generation, verification, correction, and answer synthesis. Let’s dive into the first stage in detail.

1. Generate

The process begins by identifying the question type. Questions generally fall into two categories: choice-aware (requiring selection from multiple options) and non-choice-aware (yes/no type questions).

Depending on the question type, KGARevion uses an LLM to extract three key elements, forming what’s known as a triplet: subject, relation, and object.

Let’s look at two examples:

Example 1: Choice-Aware Question

Q: “Which protein inhibits the progression of Retinitis Pigmentosa 59?”

(A) HSPA8

(B) CRYAB

(C) Heat Shock Protein 70

Generated Triplet: (Retinitis Pigmentosa 59, inhibits, HSPA8)

Example 2: Non-Choice-Aware Question

Q: “Is Retinitis Pigmentosa 59 related to HSPA8?”

(A) Yes

(B) No

Generated Triplet: (Retinitis Pigmentosa 59, related to, HSPA8)

In this stage, KGARevion effectively breaks down the question into clear, analyzable components, laying the foundation for the next steps: verification, correction, and answer generation.

a) The overview of KGAREVION. b) The architecture of fine-tuning stage in the Review action, where embeddings get from KGs are structural embeddings, while concept embeddings from LLMs.

2. Review

At this stage, the Knowledge Graph (KG) steps in to verify the relationships identified by the LLM-generated triplets.

Using its pre-existing knowledge network, the KG checks:

- Whether the relationship exists in the graph.

- Whether the relationship is reliable and valid.

Verification Example:

Triplet: (Retinitis Pigmentosa 59, inhibits, HSPA8)

KG Verification Result: The relationship “HSPA8 inhibits Retinitis Pigmentosa 59” is confirmed as valid and trustworthy by the KG.

Error Detection Example:

Triplet: (Retinitis Pigmentosa 59, promotes, HSPA8)

→ The KG does not confirm this relationship, identifying it as an error.

3. Revise

In this step, errors or missing relationships detected during the verification process are corrected or supplemented. The revised information is then used by the LLM to refine the generated response.

Correction Example:

Initial Triplet: (Retinitis Pigmentosa 59, promotes, HSPA8)

KG Verification Result: Relationship error detected.

Revised Triplet: (Retinitis Pigmentosa 59, inhibits, HSPA8)

Through this process, inconsistencies are resolved, and the foundation for accurate responses is reinforced.

4. Answer

In the final stage, the LLM generates a natural and coherent answer based on the relationships verified and corrected by the KG.

Answer Example:

Triplet: (Retinitis Pigmentosa 59, inhibits, HSPA8)

Final Answer: “The HSPA8 protein is likely to inhibit the progression of Retinitis Pigmentosa 59.”

This structured approach—generation, verification, revision, and response—ensures that KGARevion produces trustworthy, accurate, and contextually relevant answers, even in complex fields like medical research.

How Much More Accurate is KGARevion?

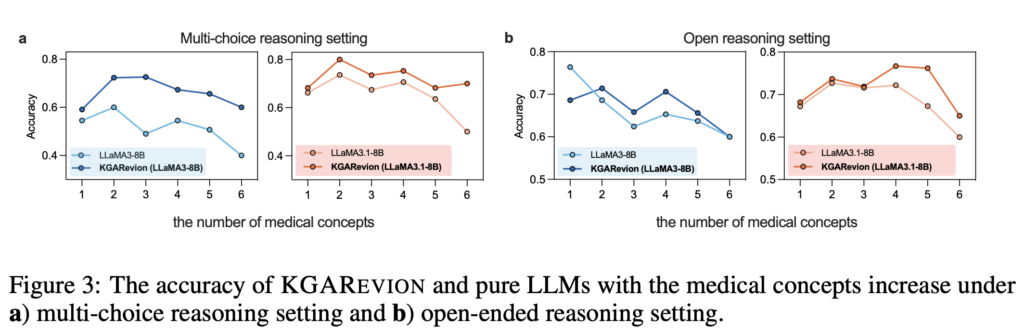

KGARevion has proven to deliver exceptional accuracy and reliability in tackling complex medical questions. According to the Abstract of the paper, it achieved an average accuracy improvement by over 5.2% on four gold-standard medical QA datasets and up to a 10.4% improvement on newly introduced datasets. Notably, KGARevion consistently excelled across different question formats, including Multi-Choice Reasoning and Open-Ended Reasoning. The graph below highlights its stable and reliable performance across both types of questions.

Lorem Ipsum is simply dummy text of the printing and typesetting industry.

KGARevion effectively addresses the challenges of multi-step reasoning and verification errors, which traditional LLM and RAG-based models often struggle to overcome.

KGARevion overcomes the limitations of traditional LLM and RAG models in the complex and high-stakes field of healthcare. By generating optimized triplets, verifying relationships, and correcting errors, it delivers accurate and reliable answers. Its proven performance across multiple medical QA datasets underscores its significant potential.

This year, ‘agents’ have emerged as a key focus in AI, and KGARevion stands out as a promising example of their growing impact.