Although there are different categories of neural networks, each having different topology and architecture, the underlying concept of every type is the same — i.e. being similar in action and structure to the human brain. We will be focusing on two types of neural networks, Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) in this tutorial. Moreover, our goal is to tell you about the different types of datasets that are available online and which you can utilize for your own CNNs and RNNs projects. So, let’s begin!

What is a Neural Network?

Neural Networks form the basis of Deep Learning which in turn is a subfield of Machine Learning. Like we said before, in these networks, the algorithms are inspired by the human brain. Basically, a neural network takes in data (as input), trains itself to recognize the patterns within the data (learning), and then uses this learning (learned weights) to predict outputs for a new set of data which is similar in nature to the input data (output).

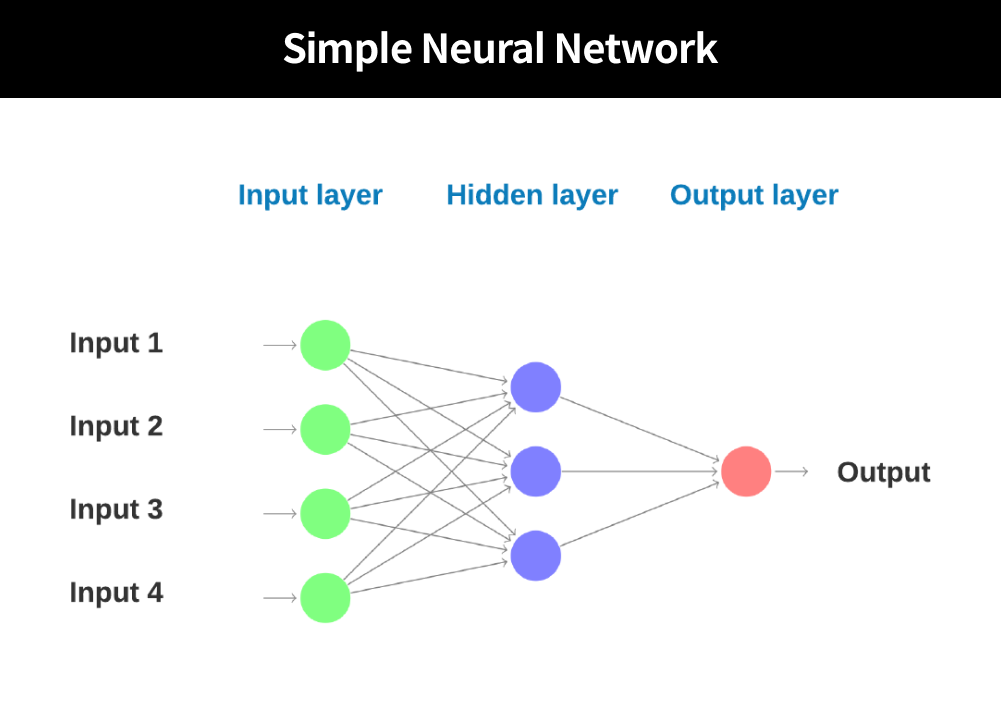

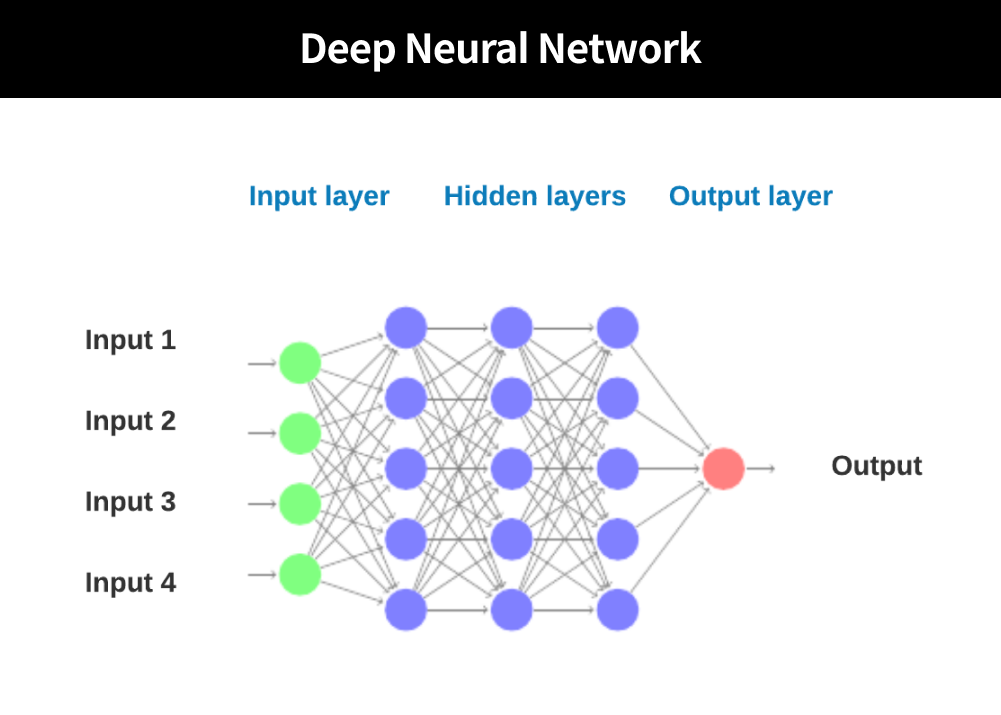

Neural networks are made up of layers of neurons where each neuron acts as the core processing unit of the network. The Simple Neural Network has roughly three layers:

- The input layer receives the input data.

- The hidden layer exists between the input and the output layers and performs the computation required by the network.

- The output layer predicts the final output.

Simple visualized differences between Simple Neural Network and Deep Neural Network

Neural networks can be more complex and this complexity is added by the addition of more hidden layers. A neural network that is made up of more than three layers i.e. has one input layer, several hidden layers, and one output layer is known as a Deep Neural Network. These networks are what support and underpin the idea and concepts of Deep Learning where the model basically trains itself to process and predict from data.

Then, how does a Deep Neural Network work? One quick way to understand the working of a deep neural network can be done using the example of handwritten digit recognition, MNIST tutorial from our previous article (click here to learn more!). We won’t go into detail about this tutorial here, but be sure to check it out!

What are the Different Types of Neural Networks?

Like we said before, there are a whole bunch of neural networks that differ by topology and structure and are used for different purposes. Some common examples include Perceptrons, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Hopfield Network, and so on. In this tutorial, we will be shedding light on CNNs and RNNs.

Convolutional Neural Networks (CNNs):

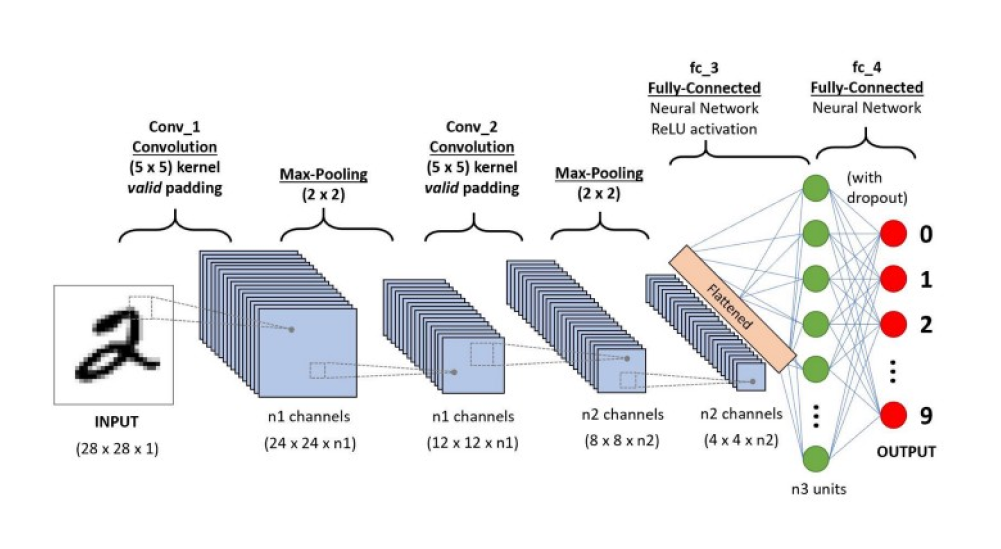

CNNs are quite different from other deep neural networks as they treat data as spatial. They are commonly applied to image processing problems as they are able to detect patterns in images, but can also be used for other types of input like audio. CNNs have the following layers:

– Convolution

– Activation Layer (typically use ReLU)

– Pooling

– Fully Connected

CNNs are typically used to compare images piece by piece. The pieces that a CNN looks for are called features. CNNs are able to detect similarities between different images much better as compared to whole image matching schemes because their technique is to find rough feature matches in roughly the same position in two or more images.

What sets a CNN apart from other deep neural networks is the fact that in CNNs, input data is fed through convolutional layers. In these layers, instead of having neurons being connected to every neuron in the previous layer, a neuron is instead only connected to other neurons that are close to it i.e., each neuron only concerns itself with neighboring neurons. This connectivity pattern is inspired by the organization of the animal visual cortex and greatly simplifies the connections in a network and enables it to uphold the spatial aspect of a dataset.

In the ReLU layer, every negative value from the filtered images is replaced by 0 to avoid these values from summing up to 0. The ReLU transform function activates a neuron only if the input is above a certain value i.e., if the input is below 0, the output is 0. Next is the Pooling layer in which the image stack is shrunk to a smaller size. The final layer is the fully connected layer where the actual classification happens. Here, the filtered and shrunken images are put together into a single list and the predictions are made.

Recurrent Neural Networks (RNNs):

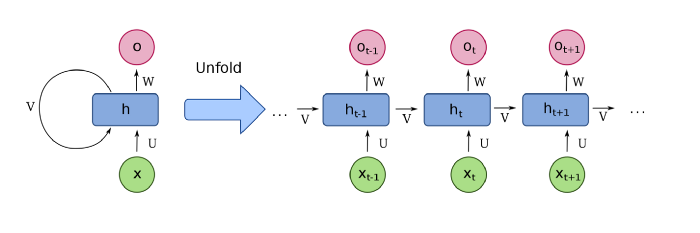

RNNs are a type of neural network which are designed to recognize patterns in sequences of data e.g. in text, handwriting, spoken words, etc. Apart from language modeling and translation, RNNs are also used in speech recognition, image captioning, etc.

RNNs are unique in the sense that in RNNs, every neuron in a hidden layer receives an input with a specific delay in time. This is particularly useful for situations where previous information is needed in current iterations to make a decision. For example, if you are trying to predict the next word in a sentence, you first need to know the previously used words. To access previous information, RNNs contain loops that allow the previous information to persist. You can think of an RNN as multiple copies of the same network, where each copy is passing a message/information to the next document.

In RNNs, the model size does not increase with the increased input size and can process inputs and share any lengths and weights across time. RNNs have some drawbacks as well. Firstly, the computational speed is slow because the model takes historical information into account. Also, RNNs cannot remember information from a long time ago and cannot consider any future input for the current state.

Training Datasets for Deep Learning

There are a lot of training datasets that you can find online and can make use of in your Deep Learning tasks and projects be it image datasets, natural language processing datasets, audio/speech datasets, etc. Some examples of such datasets include:

- MNIST Dataset can be used for handwritten digit recognition. It consists of 60,000 training samples and 10,000 test samples of handwritten digits.

- MS COCO is large-scale object detection, segmentation, and captioning dataset.

- IMDB Reviews is a dataset regarding movies and is used for binary sentiment classification.

- WordNet which is a large dataset containing English synsets which are groups of synonyms that describe different concepts

- Million Song Dataset is a huge audio dataset containing popular music tracks.

Apart from finding training datasets online, you can only go for ready to use datasets offered by Machine Learning libraries like TensorFlow and Scikit-learn. Such libraries allow you to download preprocessed datasets and load them directly into your programs for use.

Finding the proper dataset just suitable for your needs is quite difficult, and sometimes not even possible. It is not only difficult to gather, but also difficult to have it pre-processed for your specific needs. Often, it is more efficient to find another service that does these laborious works for you. For this, we could be your perfect solution!