The release of text-to-image models in 2022 marked the mainstream adoption of generative AI. Models like MidJourney and DALL-E generated excitement with their capabilities and have since paved the way for advancements in models that generate not only images but also videos and 3D content. Today, we introduce Marigold, a model that has achieved state-of-the-art (SOTA) results in depth estimation using Stable Diffusion.

Depth Estimation Models

For those new to computer vision, the term “depth estimation” may sound unfamiliar. In this context, depth refers to the perceived distance between objects. Although photos are two-dimensional, we can estimate how far objects are from one another within an image. Objects of the same size appear larger when closer and smaller when farther away. Depth estimation, a field within vision technology, determines depth information for a three-dimensional space using only a two-dimensional image.

This technology finds applications in various industries. For example, self-driving cars rely on depth estimation to recognize surrounding objects using only a camera, without additional sensors. While the car can identify objects in a 2D image, it cannot easily measure the distance between itself and those objects. In such cases, depth estimation enables the car to calculate these distances based solely on the 2D image.

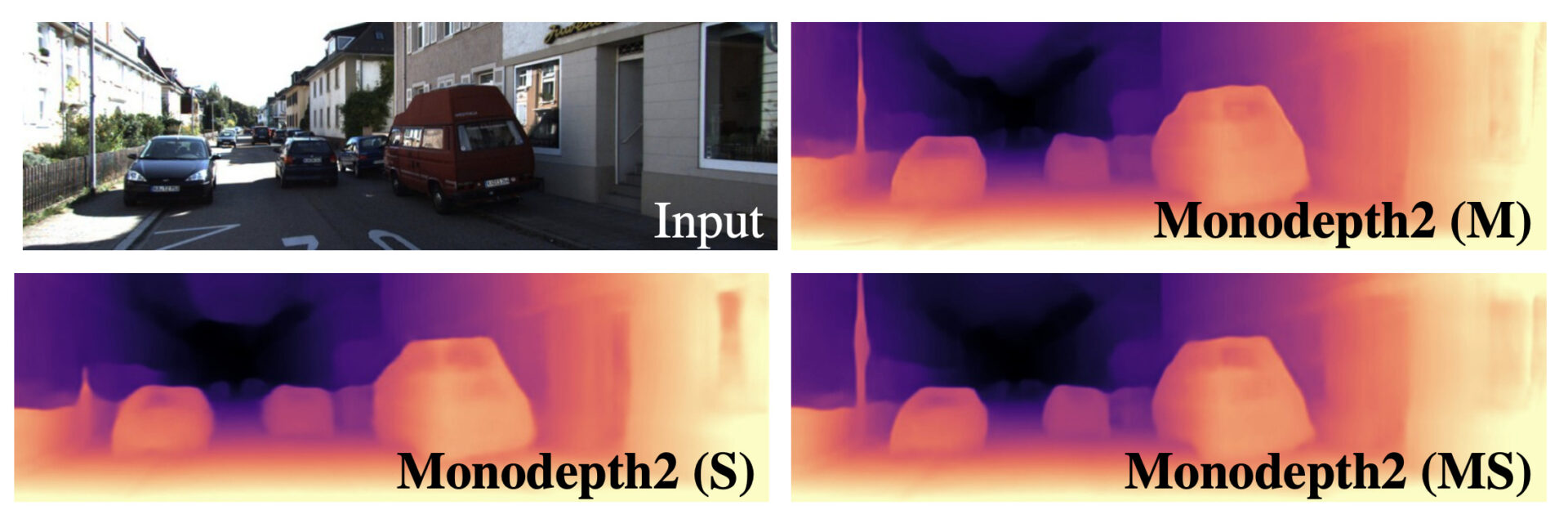

Source: Digging Into Self-Supervised Monocular Depth Estimation (Godard et al., 2018)

Binocular depth estimation

Let’s dive deeper into depth estimation technology. How do humans perceive the distance of objects? We see the world through two eyes, each capturing slightly different visual information. Our brains process these differences to perceive depth. Similarly, in computer vision, two or more cameras capture the same scene from different angles. By comparing these images, computers estimate depth, a method known as binocular depth estimation.

Monocular depth estimation

Interestingly, humans can also gauge distance with just one eye. We rely on the apparent size of objects and our accumulated experience to make these judgments. Computers mimic this process as well. When they estimate depth using a single 2D image, the technique is referred to as monocular depth estimation.

The Principle and Structure of Marigold Using Stable Diffusion

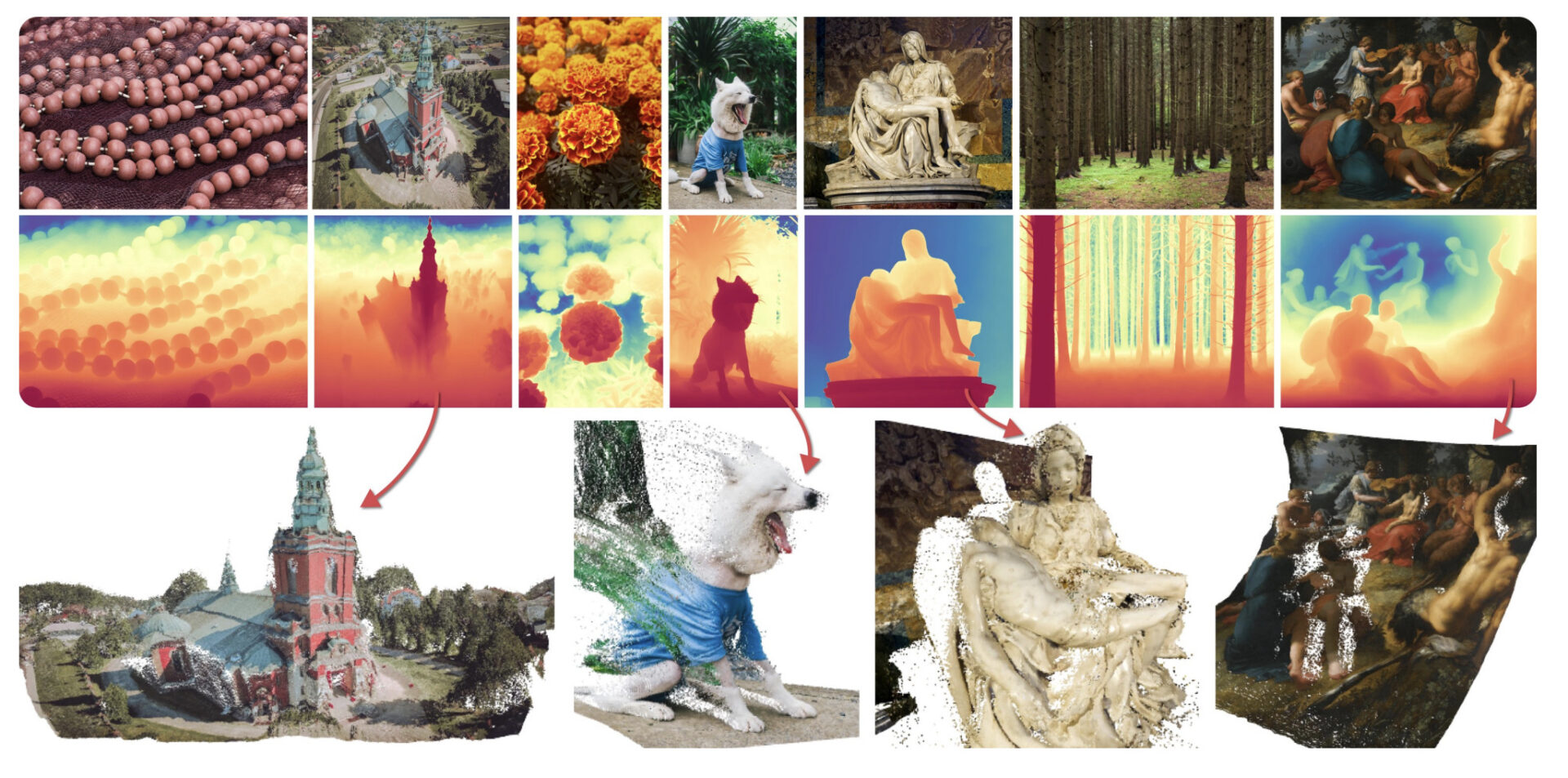

Source: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation (Ke et al., 2023)

Marigold focuses on monocular depth estimation, meaning it can generate a depth map from a single 2D image. To achieve this, a significant amount of information must be accumulated, including experiential data about objects in the image, segmentation between objects, separation of objects from the background, and relative depth perception based on visual size.

The Marigold model was introduced in the paper Marigold: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation, published in December 2023. Marigold is unlike traditional methods. It integrates diffusion-based models, typically used for image generation, into the field of depth estimation. The researchers’ idea was as follows:

If image generation models have already learned high-quality images from various domains uploaded to the internet, could this be applied to depth estimation?

Thus, Marigold leverages the pre-trained capabilities of Stable Diffusion. To adapt this generative model for depth estimation, fine-tuning is required.

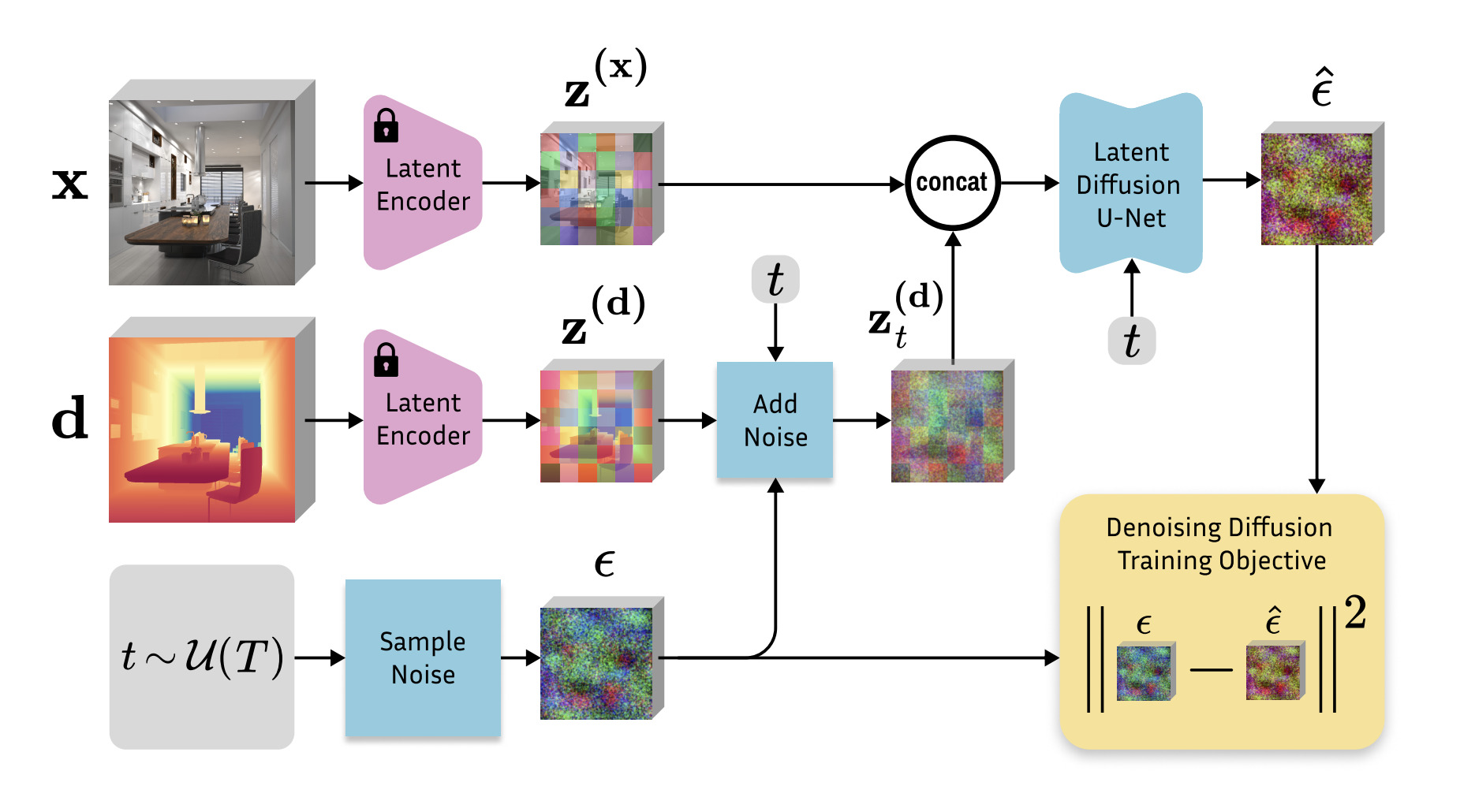

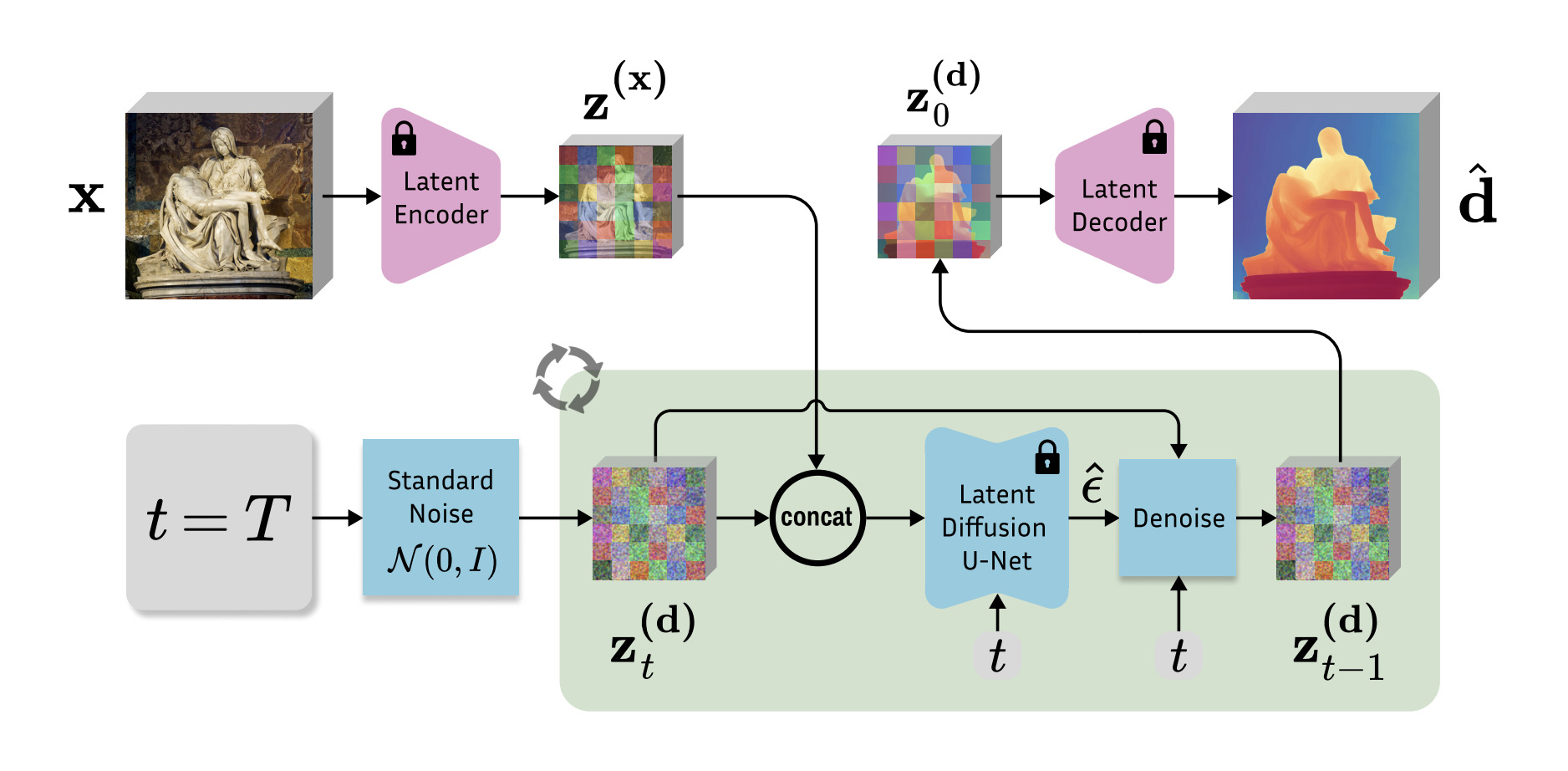

Source: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation (Ke et al., 2023)

Fine-tuning structure

The fine-tuning structure, illustrated in the image above, employs a VAE to process the real image, the depth map, and their respective latent space encodings. After adding noise to the depth map, the system concatenates the noisy map with the real image. The diffusion model then removes the noise, reconstructing the depth map. This approach adapts the latent diffusion model, which forms the basis of Stable Diffusion, specifically for generating depth maps.

To improve training performance, the researchers use synthetic data. Instead of depending on datasets with real depth values, they favor synthetic data, which avoids the physical limitations that often reduce the accuracy of real-world data.

Source: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation (Ke et al., 2023)

Once fine-tuning is complete, the inference structure follows a similar process. Noise is added and removed from the original image, and the final image is decoded to produce a high-resolution depth map.

Marigold has set a new benchmark in depth estimation, achieving state-of-the-art results and excelling in zero-shot performance. This means it delivers remarkable accuracy even on previously unseen data. The depth maps generated by Marigold accurately outline object boundaries within images and align closely with human perception.

The paper’s use of the term “repurposing” highlights a broader implication: the underlying principles of diffusion models can be applied across different tasks. This demonstrates how advancements in one model can pave the way for breakthroughs in other fields. While diffusion models are renowned for their performance in image generation, researchers are now exploring their potential in text generation. Even if these initial applications don’t yield immediate breakthroughs, the foundational research can drive significant progress in other domains over time.