Let’s begin today’s letter by stepping once again into a colossal library. Surrounded by endless shelves of books, we pose the same question as before: “What are the key trends in the tech industry?” In our previous Tech Letter, we likened Graph RAG to a ‘smart librarian’ that quickly finds and summarizes relevant books and resources.

Today, we’ll take a closer look at ToG (Think-on-Graph), which plays the role of the library’s ‘scholar.’ ToG doesn’t stop at retrieving resources—it analyzes the relationships between them, clearly presenting evidence and sources for its findings. Moreover, it offers tools to trace and correct misinformation when detected. By doing so, ToG goes beyond providing information, delivering deep reasoning and responsible, well-founded answers.

Overcoming LLM Limitations

Hallucination: Incorrect information or logically inconsistent answers

Lack of Depth in Reasoning: LLMs struggle with deep reasoning tasks that require following complex logic or identifying implicit relationships, often leading to confusion in multi-step knowledge processes

To overcome these challenges, researchers propose leveraging external Knowledge Graphs (KGs). These graphs, built on structured data, provide clarity and transparency. While traditional approaches like LLM ⊕ KG (used in Graph RAG and similar systems) rely on a “loosely coupled” paradigm where KGs serve as simple lookup tools, this approach has its limitations—it becomes overly dependent on the completeness of the KG.

To address this, researchers introduce a “tightly coupled” paradigm, LLM ⊗ KG. What’s the difference?

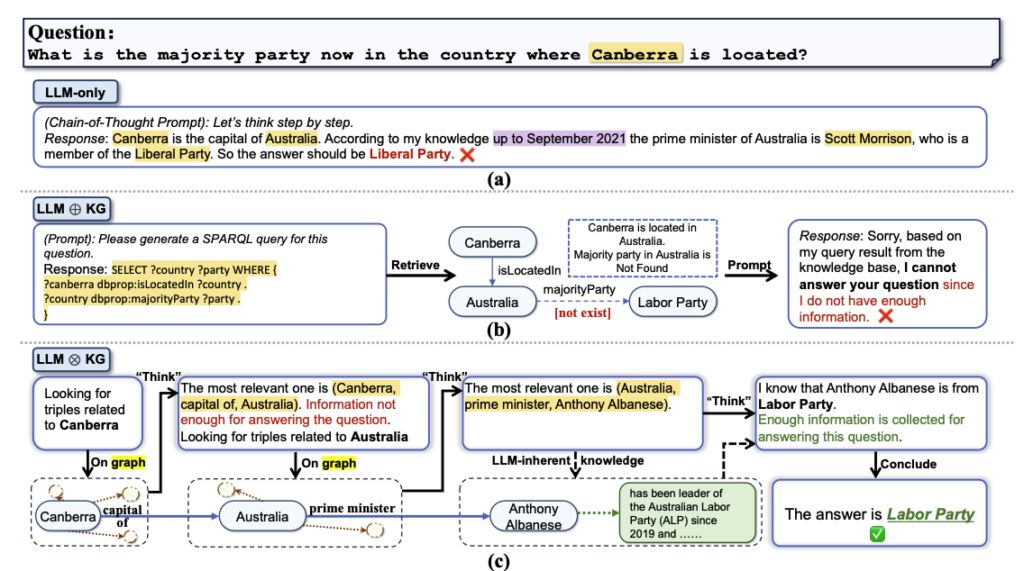

Representative workflow of three LLM reasoning paradigms

- LLM-only operates independently, relying minimally on external data.

- LLM ⊕ KG allows the LLM to use KG as a supplementary lookup tool, but its performance is limited by the quality of the KG.

- LLM ⊗ KG (the ToG approach) enables the LLM and KG to collaborate, allowing for deep reasoning and error correction.

Let’s take a quick look at how ToG, based on the LLM ⊗ KG paradigm, works through the diagram below.

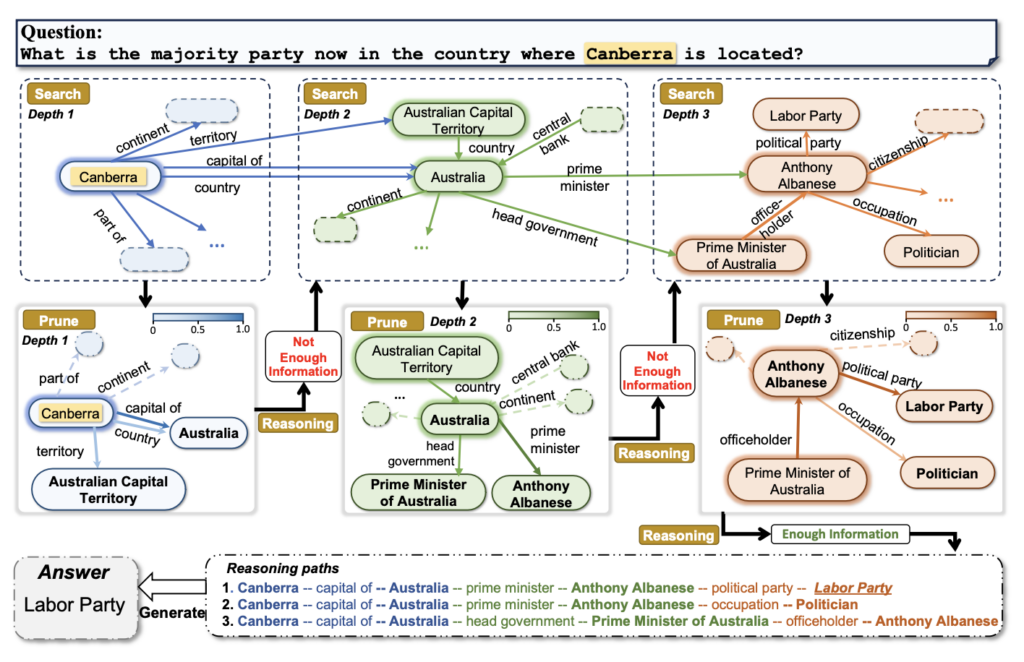

Example workflow of ToG

Does it seem complex? It’s actually quite simple.

- When ToG receives a question, it first identifies the core keywords. For example, in the question “What is the ruling party of the country where Canberra is located?”, the keywords identified would be “Canberra,” “country,” and “ruling party.”

- Next, ToG incrementally explores the knowledge graph for data related to these keywords. First, it finds that “Canberra is the capital of Australia,” then uses this information to locate the next step: “The Prime Minister of Australia is Anthony Albanese.”

- Finally, it retrieves the information that “Anthony Albanese belongs to the Labor Party” and deduces the final answer: “Labor Party.”

By connecting and reasoning through data step by step, ToG can transparently demonstrate its reasoning process.

So, What's New?

ToG is a representative implementation of the new LLM ⊗ KG paradigm, achieving a “tight integration” between LLMs and knowledge graphs. It operates through three main stages:

1. Initialization

- Identifies the topic entity related to the question and sets the initial exploration path. For example, for the question “What is the ruling party of the country where Canberra is located?”, the topic entity would be “Canberra.”

2. Exploration

- Dynamically extracts relevant information from the knowledge graph using beam search, which considers multiple paths simultaneously and retains only the top N most likely paths.

- During this process, pruning is applied to eliminate irrelevant paths and ensure the exploration remains efficient and focused on the most promising routes.

3. Reasoning

- Evaluates whether the extracted information is sufficient to answer the question. If not, the process alternates between exploration and reasoning until a complete inference path is constructed.

- If the maximum depth is reached without resolution, ToG uses the LLM’s internal knowledge to generate an answer.

- Finally, it assesses the reasoning path to determine if it is sufficient. If deemed adequate, the final answer is generated based on this path. If not, the exploration and reasoning cycle continues.

What Makes ToG So Great?

Deep Reasoning

ToG enables LLMs to perform deeper reasoning even in complex, knowledge-based tasks by leveraging knowledge graphs to connect and analyze information across multiple stages.

Responsible Reasoning

ToG provides transparency in the answer-generation process and allows for corrections when errors occur. By tracing reasoning paths and exploring additional information as needed, it delivers more reliable results, enhancing both the trustworthiness and usability of AI.

Flexibility and Efficiency

ToG offers a flexible and efficient framework that adapts to diverse environments. Its plug-and-play approach allows seamless integration with various LLMs and knowledge graphs. Continuous data updates via knowledge graphs reduce the cost and time of updating LLM knowledge. Additionally, ToG optimizes smaller LLMs, like LLAMA2-70B, to perform comparably to larger models like GPT-4, maximizing cost efficiency.

Knowledge Traceability & Correctability

The researchers emphasize that ToG’s knowledge traceability and knowledge correctability make it far more reliable than traditional approaches. These features are crucial for enhancing the practicality and responsibility of AI. Let’s take a closer look at how they work in practice!

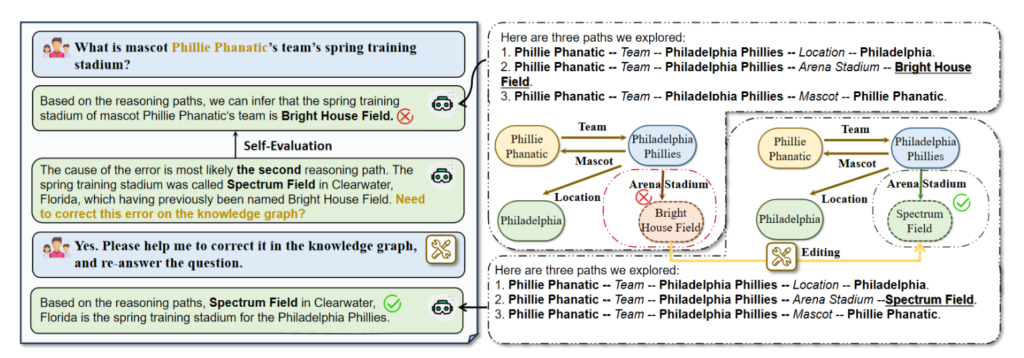

The illustration of knowledge traceability and correctability of ToG

When presented with the input question, “What is mascot Phillie Phanatic’s team’s spring training stadium?”, ToG initially outputs the incorrect answer, “Bright House Field.”

In the subsequent steps, ToG traces all reasoning paths to analyze the potential source of the error. It identifies that the mistake likely originated from the second reasoning path (Phillie Phanatic → Philadelphia Phillies → Bright House Field). This path contains an outdated triple (Philadelphia Phillies, Arena Stadium, Bright House Field), where “Bright House Field” is recorded under its former name, “Spectrum Field.”

Using this clue, the user can instruct the LLM to correct the error. The updated knowledge allows ToG to provide the correct answer for the same question. This process highlights ToG’s ability to enhance the quality of knowledge graphs through knowledge infusion, improving both data accuracy and reliability.