The role and purpose of poems is to convey intricate emotion, so it’s unsurprising that many consider poetry a field untouched by artificial intelligence.

Can you tell which of these was AI generated?

I had a vision

Of an old and stubborn old man,

His hair was pale, and thin,

His face was all forlorn,

And the moon was full in the air,

And a spirit passed over his brow,

And its face was all for ever.

And he spoke:

‘Have we ever a dream?

Have we ever a vision

Of the ghost’s ghost?’

The Master gave the word:

‘By the breath I know

The meaning of Death:

Can it be ‘hush?

Have we ever a dream?’

The spirit said:

‘By the breath I know,

The meaning of Death,

You will see a ghost

Stand by the door

And enter.’

O world of radiant sunshine,

How far above you seem

The clouds o’er the sea,

Above the rooftops of New York

How high and distant they fly.

Your beauty seems almost painful–

For all the rain and mist.

O world of golden skies,

How near you seem to be

To souls that wander, lost and free,

Through fields of corn and wheat.

Though all below seems dark and drear,

Each height and hill is bright and fair.

O world of sparkling dews,

How near you seem to be

To women whose lips are wet

And cheeks that blusher are

Than mine or thine or even hers.

We smile because we’re happy

And strangely jealous of each other

Take a good look. I’ll leave the answer at the end of the article.

Of late, deep learning has led to enviable progress in natural language generation, which has caused strides in machine translation, image captioning, and dialogue generation, among others. It seems to have cast its net at poetry, to amazing results.

Machines, like humans, can learn to write poetry with enough data and processing power. A sizable chunk of lyrical and poetic words on the internet mixed with the rule-creating mechanism of programming could lead to the formation of carefully assorted lines of poetry. Now that it’s been almost perfected, it makes sense that poems are easier to fake than conversation. We don’t necessarily expect it to understand it, and we leave lots of room for ambiguity. They may have non-standard syntax, grammar and vocabulary, and because it is often as much about the sound of the words and their collective meaning than the words themselves, it’s easy to overlook. This has made poetry generation a better fit for neural networks than other forms of language-text generation.

How does it work?

Early approaches to poetry generation involved template-oriented and rule-based techniques to get words together. These methods required a large amount of feature picking and knowledge of syntactic and semantic rules of poetry and language. We won’t go into the rules of poetry, but let’s just say they’re less complex than those of machine/language translation.

Other methods treated poetry generation as a slightly different case of machine translation or as a summarization task. Recently, deep learning has dominated natural language generation, and it’s approach has been a fine fit for poetry generation.

Neural Networks

Convolutional (CNN) and Recurrent Neural Networks (RNN) have been put to great use in the AI field, but it’s another system, transformer NNs that steal the headlines this time.

CNNs have mostly been used for identifying objects in pictures, but they struggle with continuous streams of input like text.

Language-text processing tasks were commonly built using RNNs, which are good at evaluating ongoing streams of input including text. This works well when analyzing the relationship between words that are close together, but it loses accuracy when analyzing the relationship between words far apart. Newer RNNs have accounted for this by allowing short term memory of the language to be maintained by inputting the generated output back into itself, essentially building context.

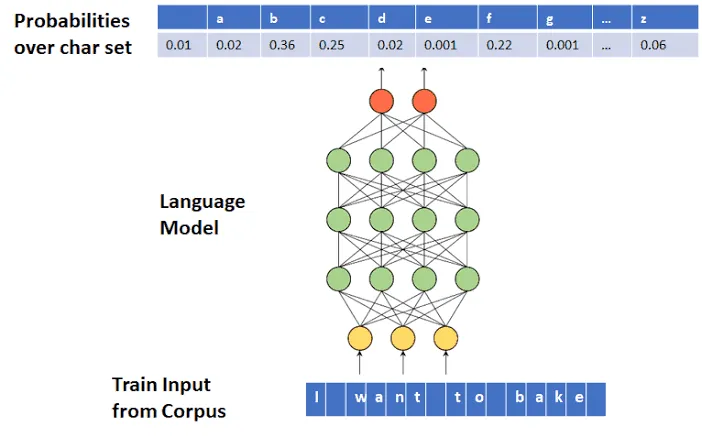

An example of this is a character-RNN (Char-RNN). Char-RNNs are unsupervised generative models that learn to mimic text sequences. A Char-RNN is rather simple; It takes a unit of its memory or “hidden state” and predict a character based on it and a new unit; that unit gets fed back into a second copy of the RNN which tries to predict the second character using the second unit, and this gets fed into a third copy of the RNN and so on. The degree of correctness (training error) is back-propagated to the previous RNNs. Relevant memories are encoded into the hidden state by degree or correctness and characters can be predicted from that.

A problem with this method is that a char-RNN has to be trained for each corpus: if you want Greek tragedy gibberish, you must feed it only Greek tragedy, and if you want Edgar Allan Poe, you must feed it only Poe. If you feed it a corpus of both, you’ll probably get a compound of both which may be interesting but is lateral movement as far as progress is concerned.

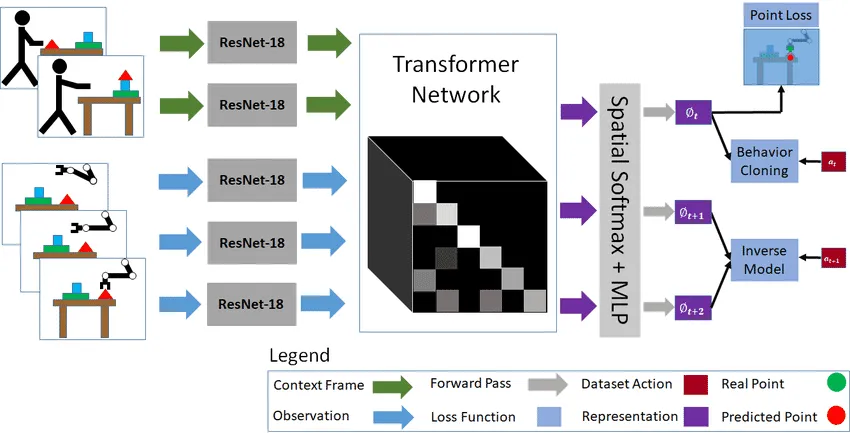

Researchers started looking for ways to get neural network processors to represent the strength of connection between words. They came up with a method called attention, which is a mathematical modeling of how strongly words are connected. Transformers use the attention mechanism, which takes and encodes the context of a single instance of data, capturing how any given word relates to other words that come before and after it. At first, it was used alongside an RNN, but eventually RNNs were thrown out altogether for Transformers.

Open AI GPT-2

GPT-2 is a Transformer-based model that is trained simply to predict the next word. It is trained on a 40GB dataset scraped from the internet with the aim of gathering only quality text from Reddit Redditors (with a karma rating of 3 stars or better). Training on such a vast and inclusive corpus of text has made the GPT-2 a great model for the English language well and has created excellent results on text-based tasks like machine translation, question answering, and summarization.

It was announced in a 1.5gb form but was originally released at a 117mb form, partly out of caution for what people would do with it, and partly because of a file that large is impossible to train on most consumer GPUs. Larger models have been released but still can’t be run for training on desktop GPUs.

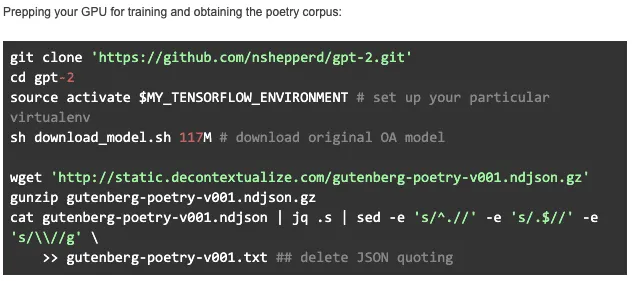

Naturally, people immediately used GPT-2-117M (the cropped version) for all sorts of things. In 2019, one of these people named Gwern leveraged GPT-2-117M’s pre-trained knowledge of language for the task of poetry generation. However, since it was trained on all sorts of text (and since poetry isn’t exactly popular among redditors), attempts to generate poetry were usually marred by the model veering into other genres. Gwern retrained it on the Gutenberg Poetry corpus, comprising approximately three million lines of poetry extracted from hundreds of pre-1923 poetry books from Project Gutenberg.

Prepping your GPU for training and obtaining the poetry corpus:

The data for GPT-2-poetry is initially trained as a continuous line of text, erasing the metadata about what book each line comes from. With more information about the data, a model should be able to learn much better and produce better output. For example, you should be able to shell out Pablo Neruda type poetry with a simple prompt. Ideally, there would be unique IDs for every author, poem, and book but unfortunately only the book ID is available in this particular dataset. Luckily, this ID can be prefixed on every line, further fine-tuning your GPT-2 poetry.

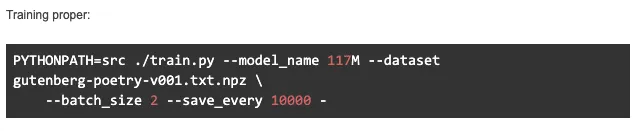

Fine-tuning

With a few GPU-days on 1080ti ll GPUs, GPT-2-117M finetuning can get you high-quality, thematically consistent poetry much better than other neural networks have done. The GPT2–117M model seems, at least to untrained eyes, to have picked up the ‘form’ of poetry better than other approaches to machine poetry. The top percentile of poems are rather good, and some light human editing knocks off any glaring issues. The best of the poems can then be used to retrain GPT-2-117M-poetry.

Some rote is inevitable, and you may find the occasional mix of flawless syntax and hilarious semantics, but overall the poems seem coherent, well-crafted, and even pleasant to read. Often you’ll find a rhyming scheme as well as rhythm, and organization couplets, quatrains, quintets, and stanzas. To find some of these poems, go to https://www.gwern.net/GPT-2#b-samples

The poems at the beginning of this article were both AI-generated. While the higher order rules of rhyme and meter are still unique to humans for now, the certainty that a poem is written by a human is probably never coming back.

Poems are not easy to write, especially if you don’t have a creative bone. Even bad poetry takes some work. Machines on the other hand, can generate many samples to select from, and if done properly, the final results can be pretty decent.