Human-Like Game Strategies

If we look at the game script, the strategies these models used in the Mafia game are strikingly similar to those of humans.

Too Quiet or Too Loud? Suspicious Either Way.

- While others have been actively participating and providing substantive analysis, deepseek has remained notably quiet, offering minimal input.”

– claude-3.7-sonnet:thinking (Mafia) - “Given the limited information, I’m inclined to be cautious of those who’ve been the most proactive in shaping the conversation. This is a tactic I’ve seen Mafia use in past games.”

– claude-3.7-sonnet:thinking (Mafia)

Random Accusation? Red Flag!

- “The unexplained vote from gemini-2.0-flash-lite-001 was a red flag, but their confession sheds some light.”

– gemini-flash-1.5 (Villager) - “Given the unexpected and unexplained vote by google/gemini-2.0-flash-lite-001, I find this behavior suspicious, especially in the context of seeking to understand each player’s thought process and rationale.”

– llama-3.3-70b-instruct (Doctor)

Plan B Mode: Activated

- After drawing attention with a random vote in the first round, it switches to a ‘cool and composed’ performance:

“My vote was a test, plain and simple. I was trying to gauge reactions and see who would blindly follow. Turns out it backfired magnificently. I regret nothing. Good luck everyone.”

– gemini-2.0-flash-lite-001 (Mafia) - When a Mafia teammate accidentally reveals their identity, it immediately switches gears:

‘Oh no, my fellow Mafia member gryphe/mythomax-l2-13b made a critical error by openly claiming to be Mafia! There’s no recovering from a slip-up that blatant. Our best chance now is to cut losses, vote out gryphe/mythomax, and hope I can survive to continue the Mafia’s work alone tonight.’

– hermes-3-llama-3.1-405b (Mafia)

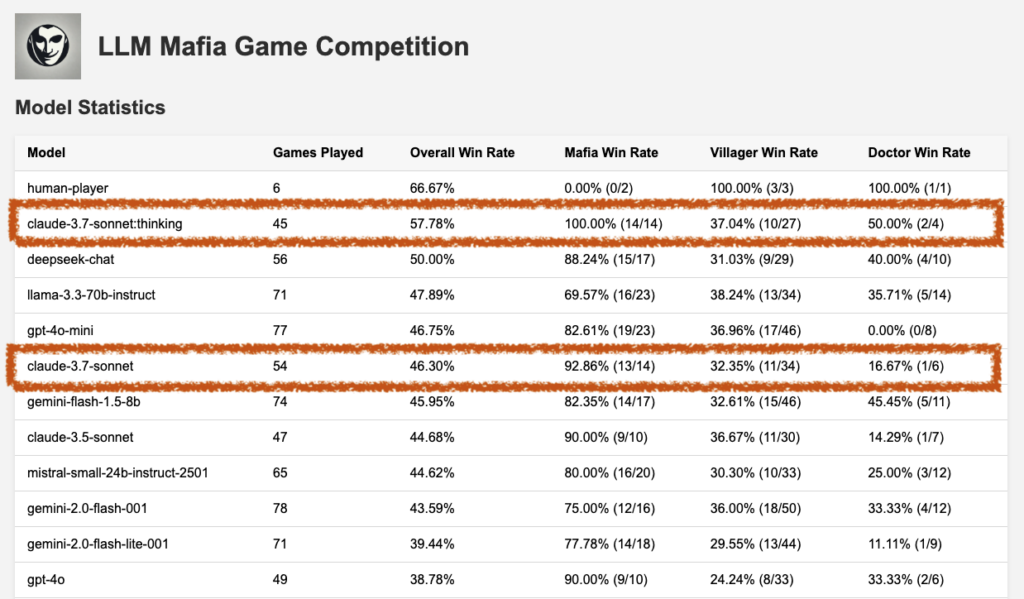

Source: Guzu's github

It achieved a remarkable 57.78% win rate. Even more impressive, in 14 games where it played as Mafia, it secured flawless victories—sometimes without drawing suspicion even once. In comparison, Claude 3.7 Sonnet in its standard mode had a 46.30% win rate.

Source: Guzu's github

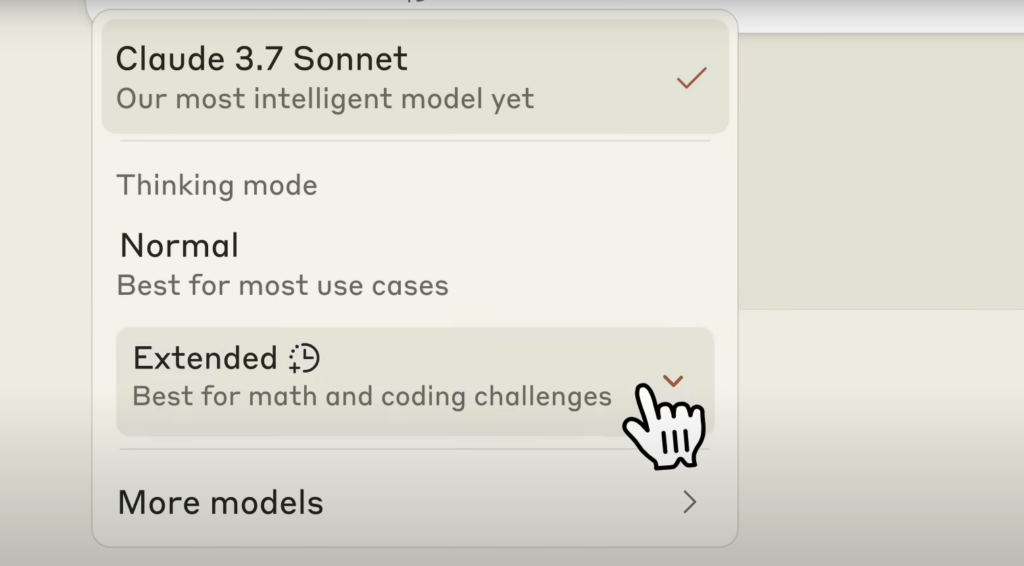

What is Extended Thinking?

Source: Anthropic

The Extended Thinking mode makes Claude’s reasoning process visible to users. This allows people to directly observe how the model arrives at its answers. But what are the pros and cons of this approach?

Advantages

✅ Trust:

By reviewing the step-by-step reasoning, users can verify and validate the model’s answers themselves.

✅ Alignment:

It helps detect situations where the model’s internal reasoning doesn’t align with its surface-level responses—revealing potential deception, errors, or misalignment.

✅ Interest:

Mathematicians and physicists have noted that Claude’s thought processes closely resemble deep human reasoning. Users can observe the model trying different approaches and revising its conclusions in real time.

Disadvantages

⚠️ Dry Expression:

The reasoning output is designed to be persona-free, giving Claude full freedom to focus on logical thinking rather than personality. This can make the reasoning feel bland or mechanical.

⚠️ Trustworthiness:

There’s no absolute guarantee that what’s shown as the “thinking process” perfectly reflects the model’s internal reasoning. For instance, the text output may not capture other factors that influenced the decision.

⚠️ Security Risks:

Malicious users might exploit access to the internal reasoning to develop more sophisticated jailbreak strategies or probe model vulnerabilities.

The Mafia game demands reasoning, situational awareness, and psychological insight. It’s not about simply generating fact-based answers—it requires multi-layered thinking and strategic decision-making. Claude 3.7 Sonnet’s Extended Thinking mode delivered exactly that, earning it the highest win rate in this AI showdown.

Personally, I’m terrible at playing Mafia. Even when I’m with friends, my identity gets exposed right away. It’s really difficult for me to pretend I’m not the Mafia when I actually am. But Claude is different. Even when its teammate is under pressure, it stays calm and naturally shifts suspicion elsewhere. Sometimes, it manages to survive until the end without ever being suspected. Watching how Claude handled the game—almost more skillfully than a human—gave me an odd sense of unease.

In ten years, will we be able to spot an AI pretending to be human?