Keeping up with the rapid pace of AI advancements can be challenging, especially with generative AI at the forefront. Generative AI, familiar to many through tools like ChatGPT, is breaking new ground by processing and generating not just text, but also images, audio, and video. Let’s explore just how far generative AI has come and where it’s headed!

Remarkable Advancements

Generative AI, which started with text generation, has evolved to handle and create diverse data types such as images, audio, and video. This ability to process multiple data types simultaneously and generate interconnected outputs is known as multimodal AI.

For instance, if a user inputs a simple sketch and asks, “Create a promotional poster based on this image,” the AI can generate a polished result tailored to the request. Generative AI is no longer limited to processing static information—it is now actively used in creative tasks.

Just a few years ago, AI-generated images often had awkward hand shapes or unnatural colors. But today, it has reached a level where it can produce highly realistic images. And that’s not all—it can now automatically create high-quality videos, voice narrations, and even film scores.

OpenAI's text-to-video model, Sora. Source: OpenAI

However, this remarkable evolution did not happen overnight. For AI to achieve human-like understanding and expressive capabilities, it requires vast amounts of data and a sophisticated training process to learn efficiently.

The Core: Pre-training and Post-training

Generative AI has reached its current level through vast datasets and sophisticated training processes. Among these, pre-training and post-training are the key factors that determine the performance of AI models.

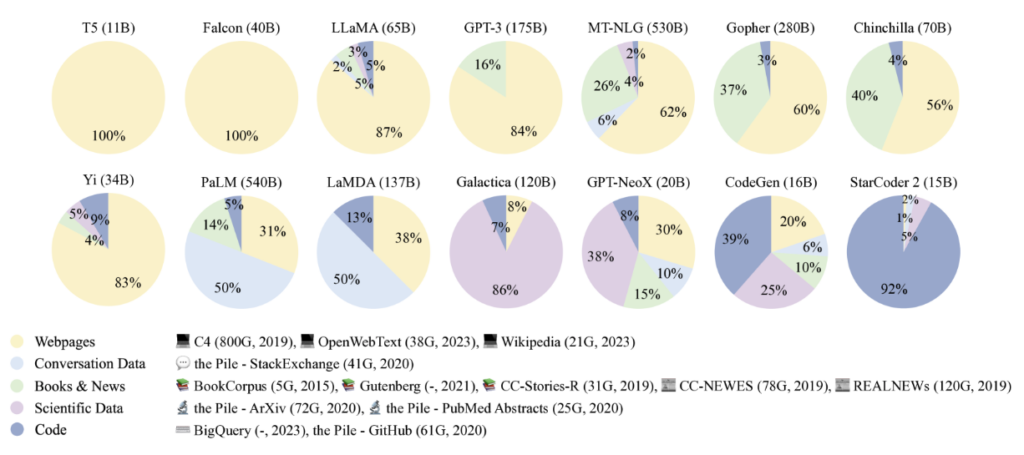

Ratios of various data sources in the pre-training data for existing LLMs. Source: A Survey of Large Language Models

Pre-training

Pre-training is the initial learning phase where a model is trained on massive amounts of data to understand basic language and data structures. During this stage, large corpora—such as news articles, books, and web-crawled content—are used to teach the model how to predict the next word or comprehend context.

One common pre-training method involves predicting the next word in a sequence based on context. For example, in the sentence, “AI is transforming ( ),” the model uses the context to predict likely words such as “the future” or “society.”

- From-Scratch Pre-training:

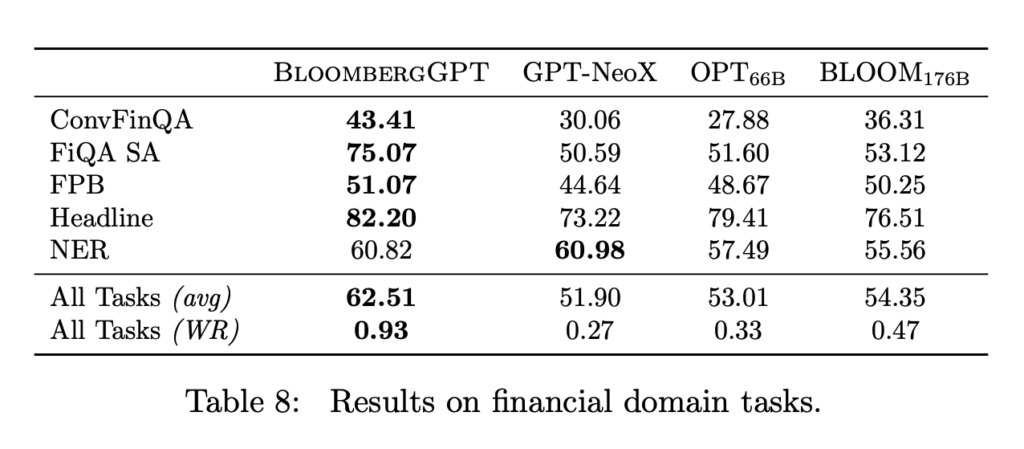

This approach involves training a model from its initial state using extensive datasets. For instance, BloombergGPT, a finance-specialized AI, was trained on financial domain data, enabling it to excel in the finance industry. However, this method requires massive amounts of data (often at the terabyte level), significant GPU resources, and substantial time and cost investments.

BloombergGPT's from-scratch pre-training results on financial domain tasks. Source: BloombergGPT: A Large Language Model for Finance

- Domain-Adaptive Pre-training (DAPT):

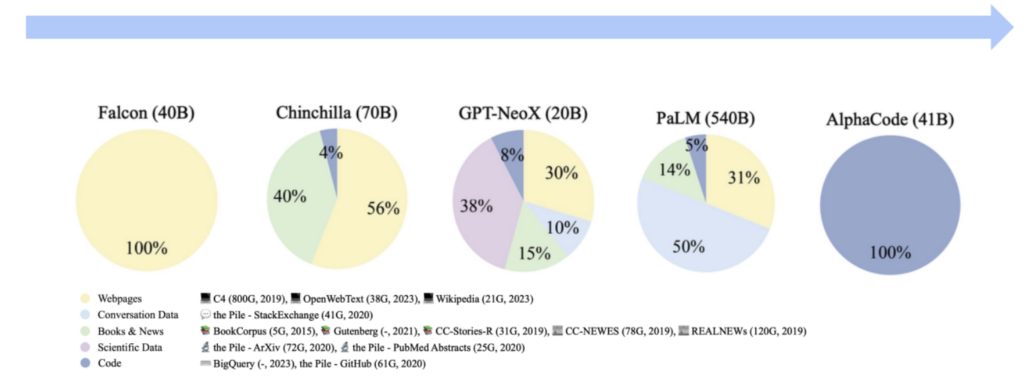

DAPT is a concept designed to create AI models optimized for specific domains (e.g., finance, healthcare, law). This approach involves further training a pre-trained AI model with domain-specific data.

For example, in the medical domain, the model is trained using data such as research papers, medical term dictionaries, and clinical reports, enabling it to specialize in healthcare. Unlike the extensive datasets used in general pre-training, DAPT is more efficient as it utilizes a relatively smaller amount of domain-specific data.

In fact, Datumo has conducted projects where DAPT was applied using specialized datasets in the telecommunications and finance sectors, building AI models tailored to meet client requirements.

More and more are using Task-Adaptive, Domain-Adaptive Data. Source: Source: A Survey of Large Language Models

Post-training

Post-training is the process of fine-tuning a model, which has already been pre-trained, to optimize it for specific tasks. This phase is divided into two main steps:

- Instruction Tuning:

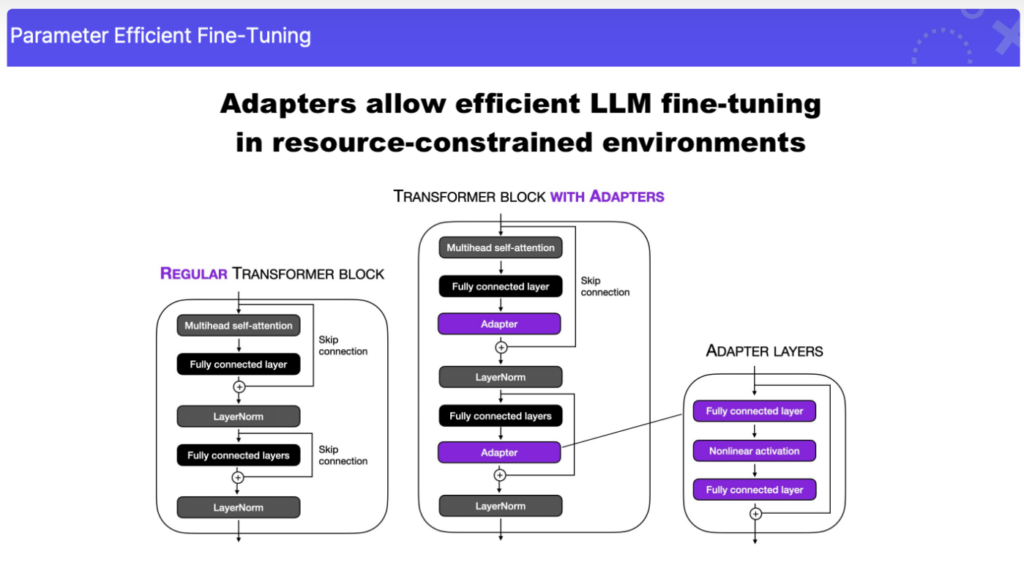

Instruction tuning trains the model to better understand user intent or perform specific tasks effectively. This process often employs the PEFT (Parameter-Efficient Fine-Tuning) technique.

PEFT improves efficiency by updating only a small set of added layers instead of modifying all the parameters in the model. Using labeled data (i.e., data with correct answers), this approach enhances the model’s performance for tasks such as question answering (QA), text summarization, and classification.

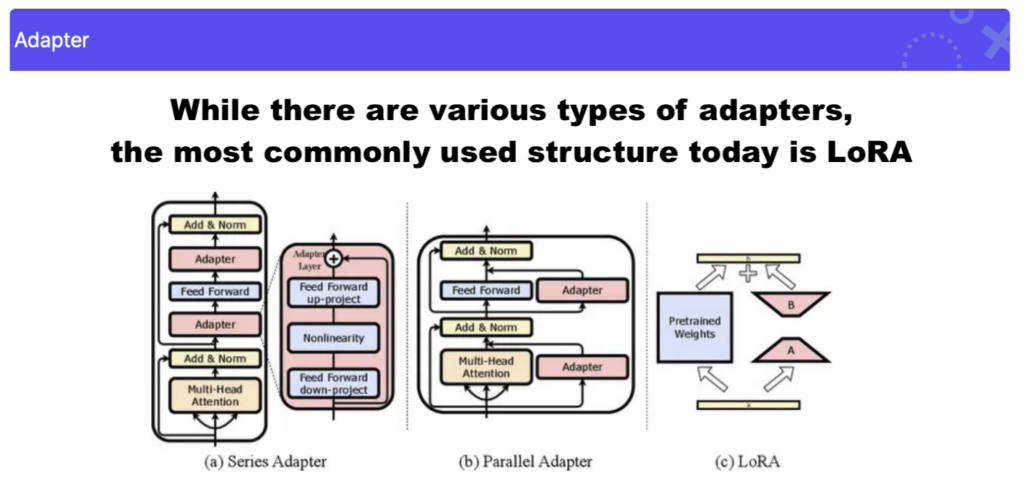

A notable method under PEFT is LoRA (Low-Rank Adaptation), which enables high-performance fine-tuning with minimal data and computational resources.

While pre-training equips the AI model with a fundamental understanding of text patterns and structures, post-training refines this foundation to ensure the model performs specific tasks with precision and efficiency.

RLHF (Reinforcement Learning with Human Feedback):

This stage involves training the model to generate more natural responses using user feedback. Human evaluators rate the model’s outputs as “like” or “dislike,” helping refine its responses. Through this process, AI better understands user intent and produces more human-like answers.

Through these two stages, generative AI evolves to process not only text but also multimodal data, delivering results optimized to meet user needs.

What's next?

For generative AI to become a truly useful tool for people, it must understand human intent and meet expectations. To do so, it needs to deeply understand humans. We’ve already discussed how RLHF helps AI better grasp user intent and generate more human-like responses. In the next article, we’ll dive deeper into the concept of Human Alignment and explore RLHF as one of its core methods. Stay tuned!