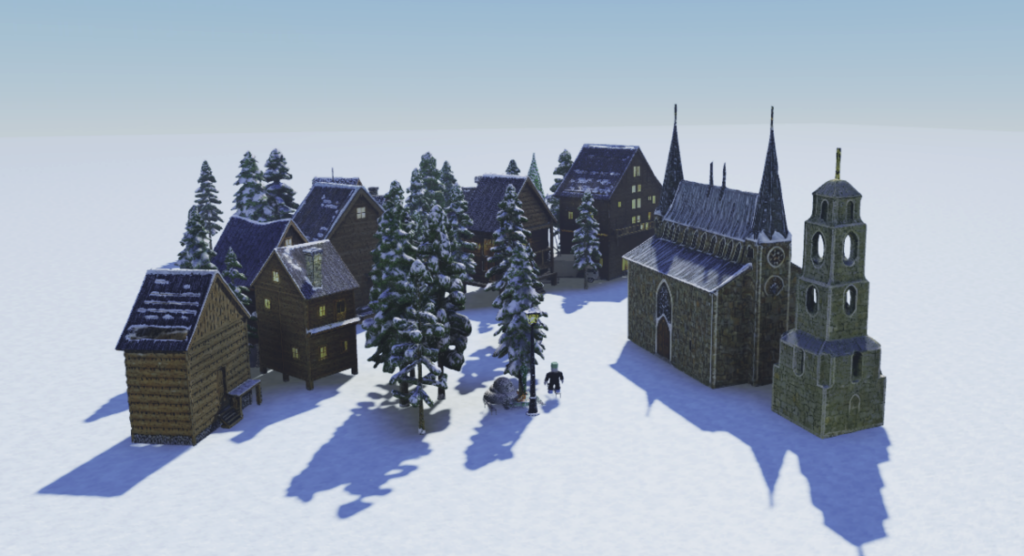

A sample 3D winter scene created through multi-turn dialogue. Source: Roblox.

Core Technology: Treating 3D Like Text

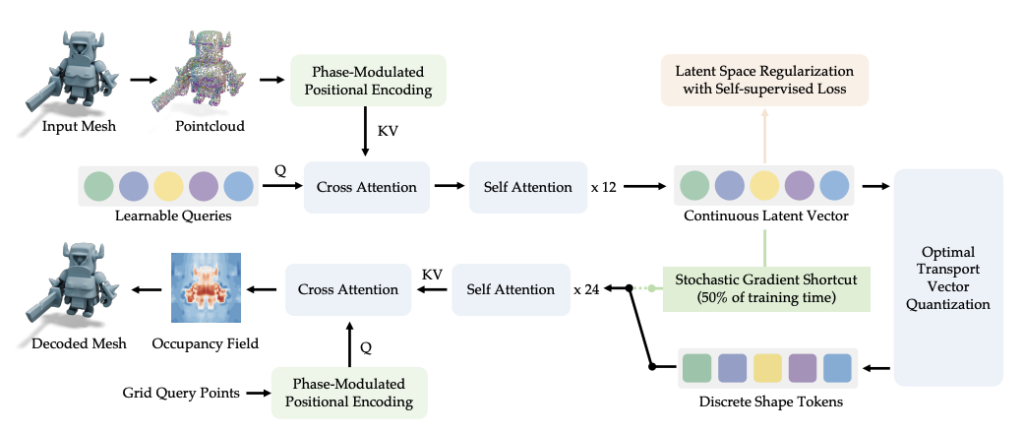

Shape tokenization pipeline. Source: Roblox.

What makes Cube special is its ability to treat 3D data like text. To do this, it first needs to break down a 3D shape (mesh) into tokens.

Just as words form sentences in text, Cube assumes that small 3D fragments can be combined to form a complete object. This is made possible through a Shape Tokenizer—a technique that breaks 3D objects into units an AI can understand. Here’s a quick look at how the tokenization process works:

Sample points from the 3D mesh to create a point cloud

Encode the position of each point

► Using PMPE (Phase-Modulated Positional Encoding—more on this below), spatial relationships like distance and structure are preservedPass the encoded points through a Transformer to generate a latent vector

Apply vector quantization to convert these continuous vectors into discrete tokens

After this process, the 3D object can be represented as a sequence of tokens—just like text. From there, Cube can generate or predict these tokens using GPT-style models.

Use Cases

- Can a single line of text generate a 3D object?

- Can it describe a 3D object in natural language?

- Can it create full 3D scenes—like a campsite, office, or sushi restaurant—from just one line of text?

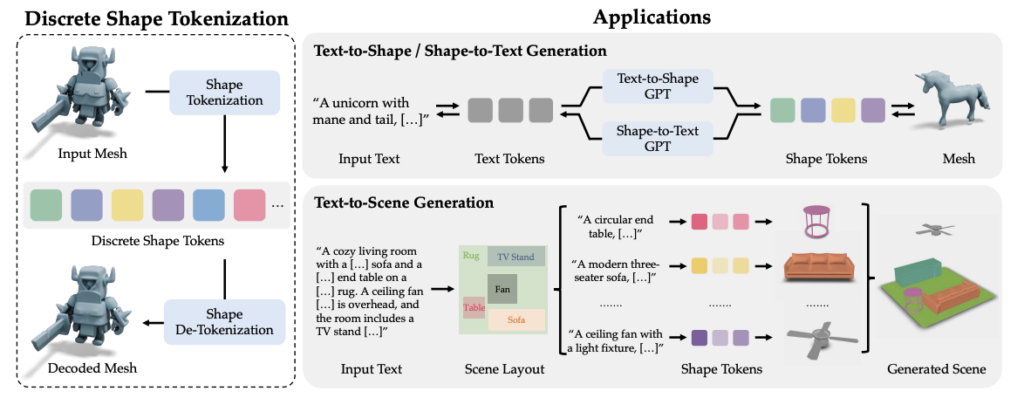

Overview. Source: Roblox.

1️⃣ Text-to-Shape Generation

Example: A crystal blade fantasy sword

The input text is first encoded using a CLIP model. A Transformer then generates Shape Tokens one by one. To reconstruct the mesh, the Marching Cubes algorithm is applied. This algorithm analyzes the point cloud to determine the boundaries of the object and stitches together its surface using triangles—resulting in a complete 3D model.

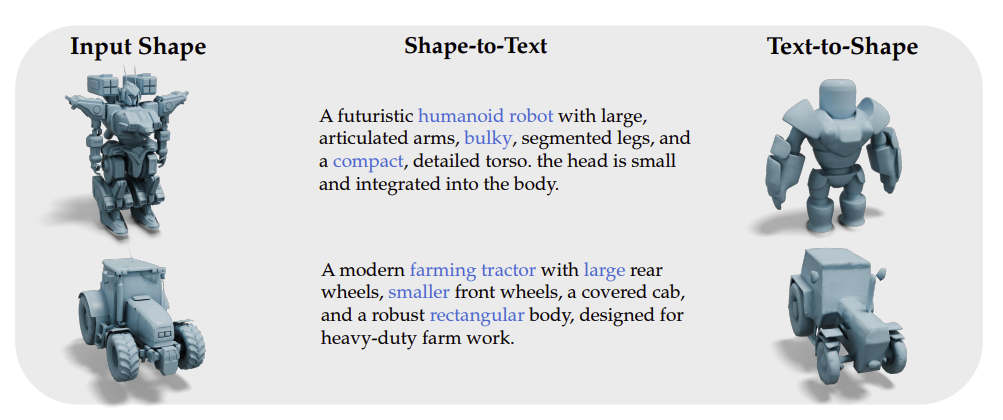

2️⃣ Shape-to-Text Generation

Example: 3D object of a sword → A fantasy sword with a crystal blade and gold handle

This task is crucial for describing 3D objects or enabling LLMs to understand them. A Transformer + LLM + MLP projection pipeline makes this possible. The length of the generated description can be controlled via prompts.

Shape cycle consistency tests were also successful: when a 3D object is turned into text and then regenerated from that text, the resulting object remains largely faithful to the original. While some high-frequency details may be lost, the core geometric structure is well preserved.

Shape cycle consistency. Source: Roblox.

3️⃣ Text-to-Scene Generation

Example: For a prompt like “Make a Japanese garden with a pagoda and a pond”, Cube generates relevant 3D objects (e.g., pagoda, pond) and arranges them in the appropriate positions and orientations to build a full scene. Here’s how it works:- Text Input: The user provides a prompt into an LLM.

- Scene Graph Generation: The LLM converts the prompt into a scene graph (usually in JSON), specifying the layout, size, and shape of each object.

- Text-to-Shape: Each object is reconstructed as a 3D mesh via the Text-to-Shape model.

- Texture Application: The model applies textures to each object, completing the final scene.

- “Where would you like to place condiments?”

- “What kind of seating would fit well here?”

- “What background music would match the vibe?”

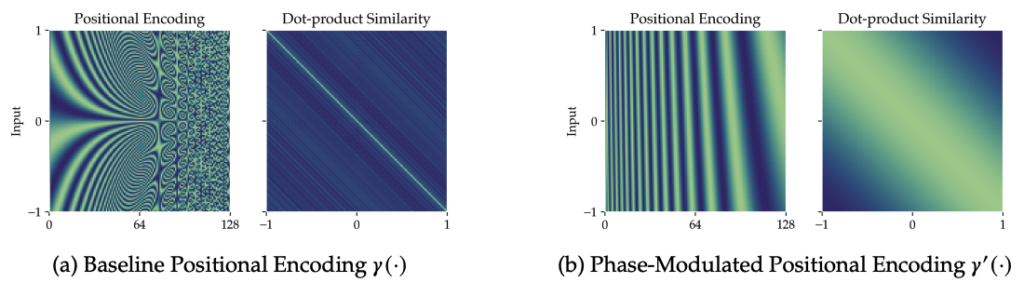

All About PMPE

Let’s take a quick look at PMPE (Phase-Modulated Positional Encoding), the technique mentioned earlier that helps Cube preserve distance and structure between points in a point cloud.

PMPE plays a key role in Cube’s spatial understanding. It was introduced to overcome limitations in traditional positional encoding methods. Those earlier methods often relied on periodic patterns (like sine and cosine waves), which could cause distant points to be encoded similarly, making it difficult to distinguish their spatial relationships. This posed a significant challenge in 3D modeling, where precise understanding of distance and geometry between points is critical.

Phase-Modulated Positional Encoding. Source: Roblox.

As shown in the figure above, (a) traditional positional encoding captures high-frequency details well, but it struggles to accurately reflect distance between points, often treating distant points as similar due to repetitive patterns.

PMPE addresses this issue by modulating phase information to better capture spatial relationships. By adjusting the encoding values based on the actual distance between points, PMPE makes it easier to distinguish far-apart points. This allows for more precise spatial representation and improves shape reconstruction quality in 3D space, maintaining accurate positional information while overcoming limitations of older methods.

Just as AI is making many tasks easier in the real world, it’s also simplifying the creation of virtual worlds. In recent months, concerns have grown over the unintended consequences of rapid AI development, often sparking fear and caution. But with Roblox’s Cube 3D, we’re reminded of the more hopeful side of technology—a future where building immersive digital experiences becomes more accessible, creative, and fun.