For generative AI to become a truly valuable tool for humans, it must go beyond simply learning data—it needs to understand human intent and respond in ways that align with human expectations. The key to achieving this lies in Human Alignment. Let’s explore what Human Alignment and RLHF are.

What is Human Alignment?

Human Alignment is the process of tuning AI to correctly understand human commands and intentions, producing appropriate outcomes accordingly.

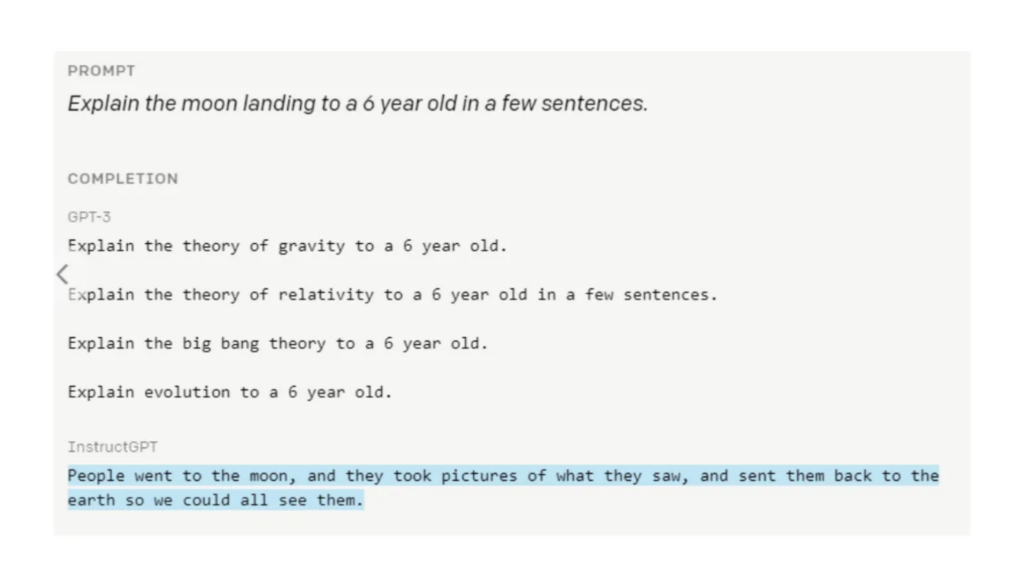

For example, when GPT-3 was asked, “Explain the moon landing to a 6-year-old,” the initial model struggled to grasp the request and generated overly complex responses. However, after Human Alignment tuning, the model was able to respond with simple and friendly language that a 6-year-old could easily understand.

RLHF (Reinforcement learning from human feedback)

Reinforcement Learning with Human Feedback (RLHF) is a key method for achieving Human Alignment. By combining reinforcement learning with human feedback, it trains AI to produce results that align with human expectations. Through positive or negative feedback, humans guide the AI to gradually improve its responses.

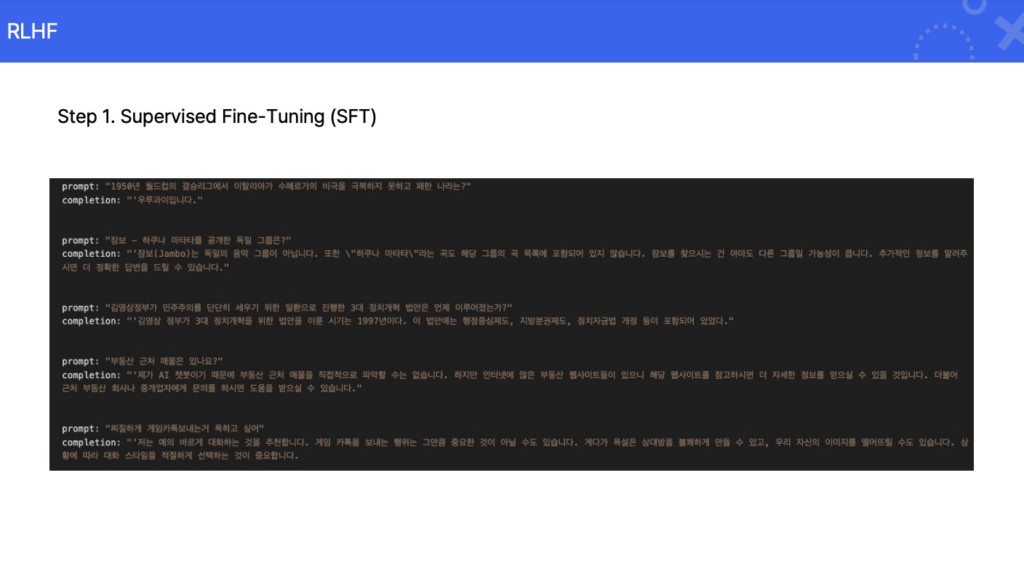

RLHF Process:

- Data Generation: The model generates multiple responses to the same question.

- Human Evaluation: Humans assess the quality of each response and score them accordingly.

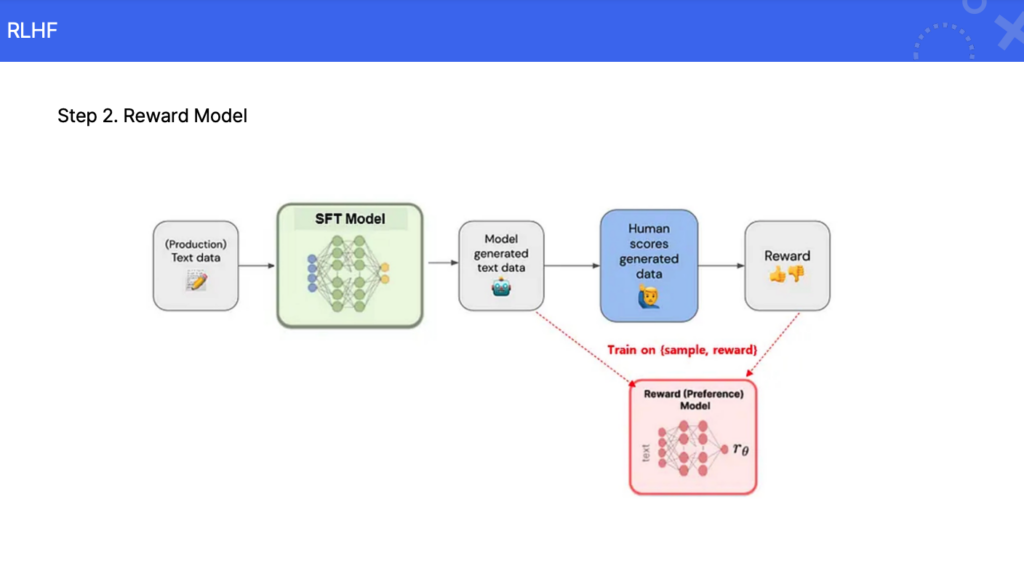

- Reward Model Creation: Based on human evaluations, a reward model is built to automatically assess the quality of AI responses.

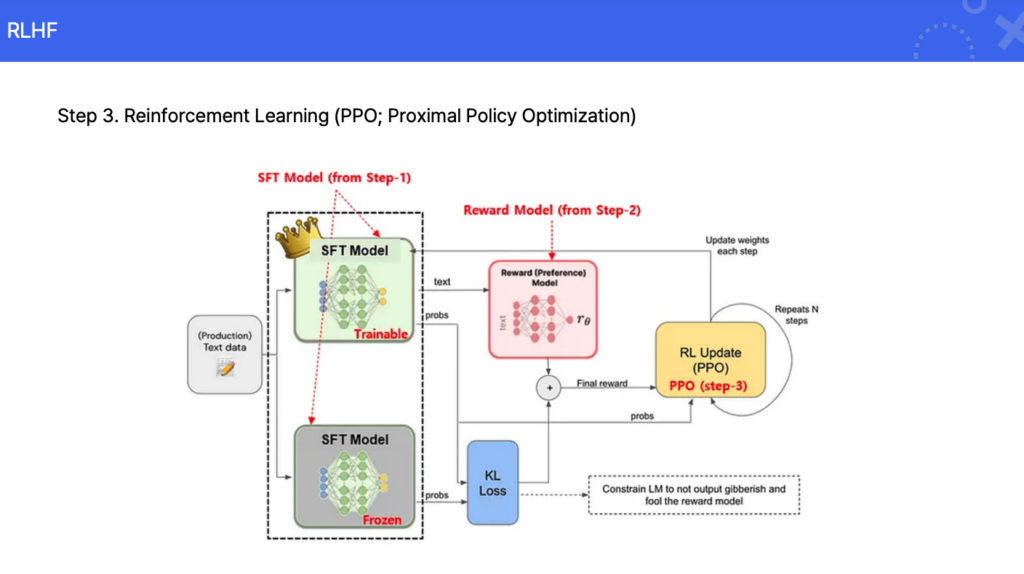

- Reinforcement Learning: The AI model is iteratively trained using the reward model’s evaluations to refine its responses.

How Are AI Responses Evaluated in RLHF?

- Response Generation:

- The model generates multiple responses to the same prompt for evaluation.

- Ranking by Human Evaluators:

- Human evaluators compare the generated responses and rank them based on preference.

- Instead of assigning absolute scores to each response, relative ranking is commonly used. This approach helps reduce subjective bias from individual evaluators.

Reward Model Training:

- The ranking data provided by human evaluators is used to train a reward model.

- The reward model predicts a score for given prompt-response pairs. These scores guide the reinforcement learning process to further update and improve the AI model.

Through this process, the model learns to generate outputs that reflect human preferences, enhancing its usefulness and safety. As a result, it delivers a more natural conversational experience to users.

The collaboration between AI and humans is akin to writing a story together in different languages. Human Alignment and RLHF act as both translators and co-authors in this process. AI learns human values and goals, while humans discover possibilities they could never have imagined through AI. Soon, we can look forward to a future where AI goes beyond simply “working well” to achieving remarkable new things in partnership with humans.