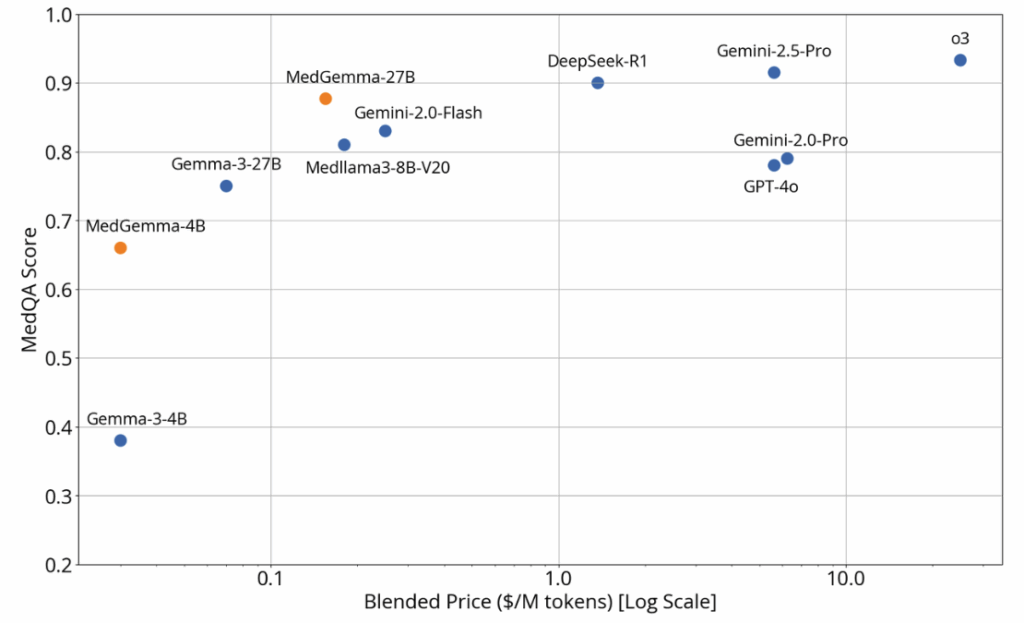

MedGemma: AI for Healthcare

MedGemma’s baseline performance on clinical knowledge and reasoning tasks is similar to that of much larger models. Source: Google

Here are some of MedGemma’s key use cases:

Medical Image Classification

The 4B model can be used for classifying radiology, pathology, fundus, and dermatology images. Thanks to pretraining, it outperforms other models of similar size.

Image Interpretation and Report Generation

Useful for generating image-based medical reports or responding to visual queries in natural language. (Example: Answering questions like “Are there any abnormalities in this chest X-ray?”)

While the base model performs well, additional fine-tuning may be required for clinical-grade applications.

Medical Text Understanding and Clinical Reasoning

The 27B model is optimized for text-based clinical tasks.

Possible applications include:

Generating responses to patient interviews

Triage classification

Clinical decision support

Summarization and report generation

* For all use cases, rigorous evaluation and domain-specific fine-tuning are necessary before real-world deployment.

Stay ahead in AI

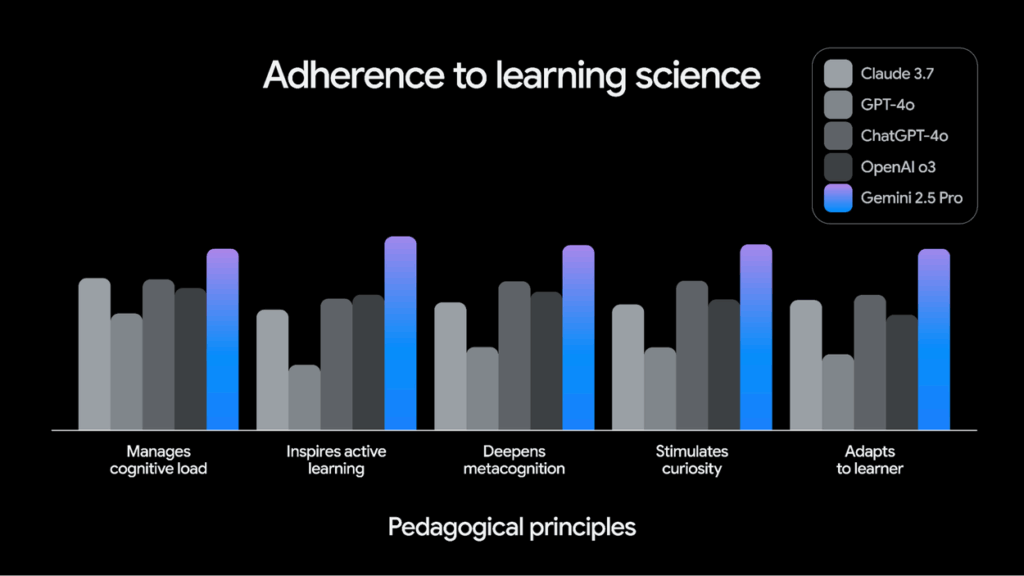

LearnLM: AI for Education

Source: Google

Building on this, LearnLM offers advanced STEM reasoning, personalized quiz and feedback generation, text complexity adjustment, and educator role simulation.

For example, if a student uploads class notes to Gemini, it can generate customized quizzes based on that content and even provide detailed explanations for each answer, which maximizies the learning impact.

FireSat: AI for Wildfires

Google FireSat. Source: Google

Let’s take a quick look at FireSat’s key technical features:

High-resolution multispectral satellite imagery

- Captures wildfire indicators with far greater clarity than conventional systems.

AI-powered detection algorithms

- Compares current satellite images with thousands of historical frames

- Factors in local weather and environmental variables to assess actual fire risk

Global updates every 20 minutes

- Once fully deployed, FireSat will scan the entire planet every 20 minutes

- Capable of detecting even small-scale fires the size of a classroom

At this year’s conference, Google unveiled a wave of announcements as vibrant and diverse as its iconic logo. CEO Sundar Pichai remarked, “Years of research are now becoming part of our everyday lives.” In a time when AI is often associated with concerns like deepfakes and copyright issues, it was refreshing to see such a bright and energetic vision of its potential. Google’s future has never looked more exciting. 💫