The development of advanced chatbots is increasingly being powered by Large Language Models (LLMs). These models have revolutionized how we approach natural language understanding and generation, allowing chatbots to offer more contextually relevant and accurate responses. However, for an LLM-based chatbot to perform effectively, it requires a high-quality dataset to train and robust LLM Evaluation to ensure optimal performance.

The Importance of Dataset for Chatbots

Well-curated question-answer dataset for chatbot training is essential to make a more responsive chatbot. This dataset provides the foundation upon which the LLM learns how to interpret user inputs and generate relevant answers. Whether you’re using general-purpose Q&A datasets or domain-specific ones, the quality and variety of the data directly impact the chatbot’s ability to respond accurately.

How Retrieval Augmented Generation (RAG) Enhances LLMs

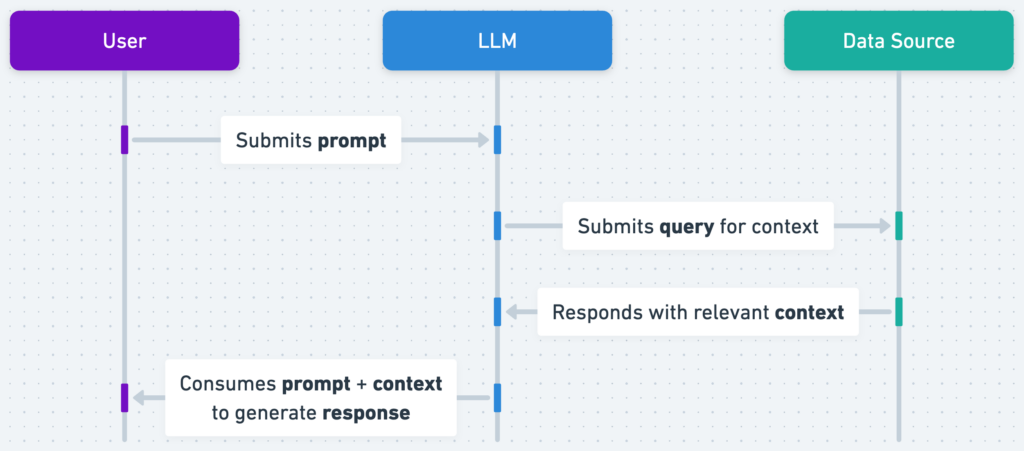

One of the most effective methods to improve chatbot performance is by incorporating Retrieval Augmented Generation (RAG). RAG allows to retrieve relevant information from external datasets or knowledge bases, augmenting the responses generated by the LLM. This process enriches the answers, providing up-to-date, factually accurate responses to user queries.

In customer service, a chatbot using RAG can access a company’s database for recent product information. This allows it to answer questions with accurate, up-to-date details. This approach ensures responses stay relevant and helps reduce “hallucinations,” where LLMs might otherwise generate incorrect or invented information.

Evaluating LLM Performance: The Role of LLM Evaluation

An LLM can generate responses based on its training data, but maintaining quality requires regular evaluation. LLM evaluation plays a critical role in measuring the chatbot’s accuracy, relevance, and efficiency in addressing user queries. Through this process, you can identify areas that need improvement—such as enhancing its understanding of specific types of questions or refining the naturalness and flow of its conversational responses. By conducting continuous evaluations, you ensure that the chatbot adapts to user needs and provides reliable, contextually appropriate answers.

RAG systems also benefit from LLM Evaluation, as this technique helps assess how well the model retrieves and integrates relevant data into its responses. By periodically updating retrieval indices and adjusting the LLM’s response generation strategies, you can maintain high accuracy and relevance in your chatbot’s interactions.

Building Optimal Chatbots: The Key Components

To build an effective chatbot using an LLM with RAG, it’s essential to focus on the following components:

- High-Quality Dataset: Curate a robust dataset, ensuring it covers a wide range of questions and answers, whether for general or domain-specific purposes. (Learn more about necessary datasets here)

- RAG Integration: Implement RAG to retrieve real-time, relevant information, enhancing the LLM’s ability to provide accurate answers.

- Continuous LLM Evaluation: Regularly evaluate the LLM’s performance to ensure reliable and contextually appropriate responses.

By following these steps, you can leverage LLM, LLM Evaluation, and RAG to develop a chatbot that offers seamless, accurate, and context-rich conversations.

*Image credit: OpenAI