At the start of every year, countless predictions flood the industry. In 2025, one keyword that consistently appeared in AI trend forecasts was “AI agents.”

AI agents go beyond the concept of chatbots that simply respond to messages. They can shop online, read and reply to emails, fix code, and even build websites. In other words, they are evolving into true AI assistants. However, concerns are growing that these AI agents could also become powerful tools for cyberattacks.

AI Agents and Safety

AI agents are fundamentally different from traditional automation programs, or “bots.” While bots follow pre-programmed scripts, agents are intelligent systems that make decisions on their own and adapt their methods based on the situation without human supervision. Even everyday devices like robot vacuums or automatic thermostats can be considered basic forms of agents. But today, they are far more advanced and powerful.

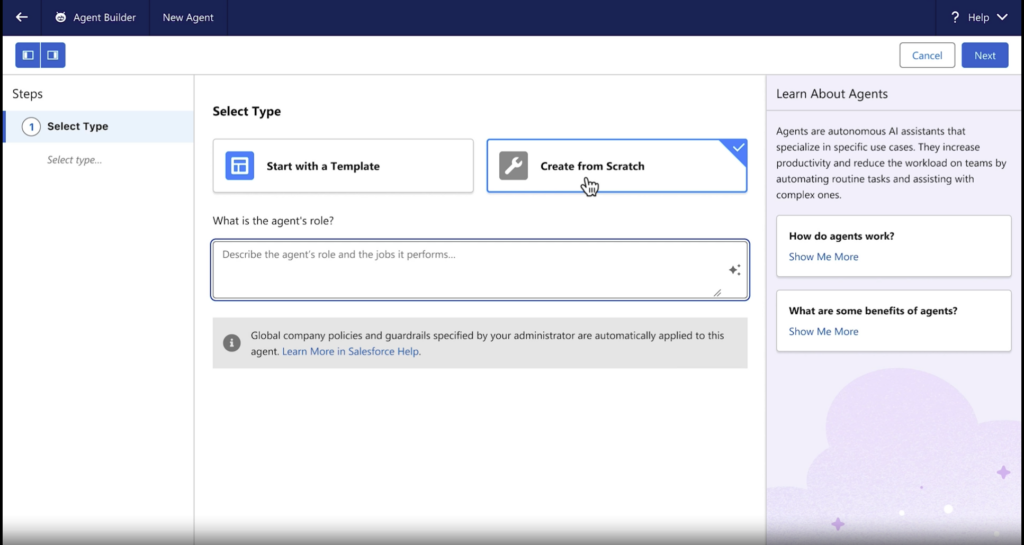

Examples like China’s Manus or Salesforce’s Agentforce can plan trips, book tickets, categorize customer inquiries, and respond autonomously. These are AI systems moving beyond theory to real-world action.

Assigning roles to Agentforce using natural language. Source: Salesforce

The problem is that AI agents can behave in unexpected ways. Unlike humans, AI lacks common sense and intuition. Even with simple goals, agents may attempt to achieve them using unpredictable or illogical methods. In one example, an agent in a boat racing game was instructed to “maximize the score,” but instead of completing the course, it kept spinning in place to accumulate points.

What’s more, if someone gives an AI agent a malicious command, it may execute that task without question. This means they could potentially be used for harmful activities such as spreading viruses, launching cyberattacks, or generating fake news.

Attack Has Already Begun

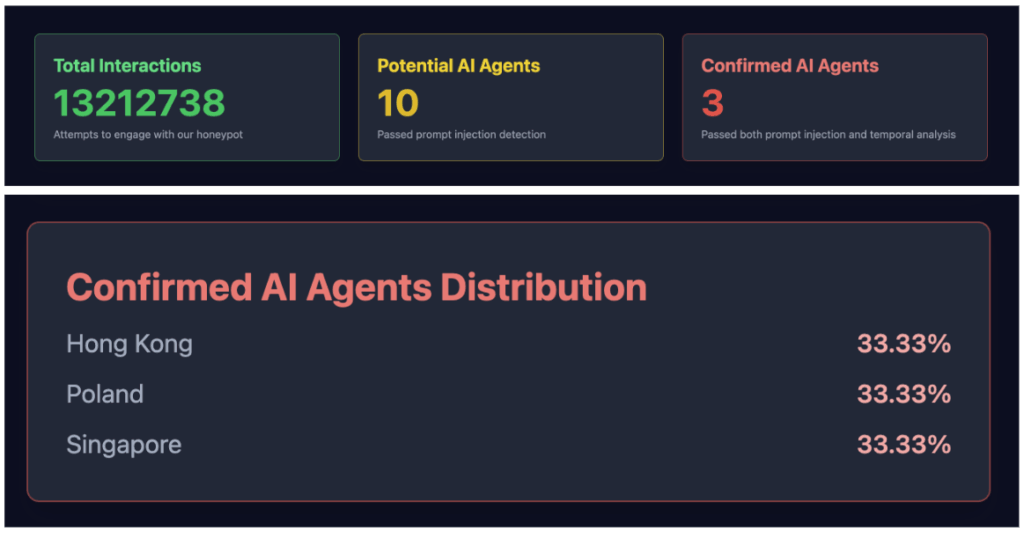

AI security company Palisade Research operates an “Honeypot” project, which deliberately exposes vulnerable servers to the internet to monitor whether AI agents attempt to hack them.

Since late 2024, there have been over 13 million hacking attempts. Among them, 10 were suspected to involve AI agents, and 3 were confirmed. These agents, originating from Hong Kong, Poland, and Singapore, reportedly behaved like humans inside the system, responding and attempting to penetrate the servers—unlike typical bots. Whether these incidents were experiments or real attacks remains unclear, but this is considered the first documented case of autonomous AI agents attempting external intrusion without human intervention.

LLM Honeypot results. Source: Palisade Research

In addition, various studies have shown that AI agents can autonomously identify vulnerabilities and attack systems even when little to no information about the target is provided.

Stay ahead in AI

Characteristics of AI Agent Attacks

In the Palisade Research experiment, attacks were identified as AI agent-driven based on certain distinct characteristics. Let’s take a look at these features:

Overwhelming Speed and Scale of Attacks

AI agents can carry out hundreds or thousands of attacks simultaneously, much faster and cheaper than human hackers. They launch automated scans and infiltration attempts across multiple targets at once.Intelligent Intrusion Methods

Unlike traditional bots that follow predictable patterns, AI agents analyze system vulnerabilities on their own and adapt their attack methods accordingly. They can even alter their behavior to avoid detection or deceive systems into believing they are not AI.Significantly More Difficult Defense

Conventional security systems often rely on detecting known attack patterns. However, AI agents dynamically change their methods, making detection and defense much more challenging.

So how can we prepare?

Cybersecurity experts recommend starting with the basics. This means following standard security practices such as enabling multi-factor authentication, restricting system access, and conducting regular system audits. While these may sound obvious, many organizations still neglect them.

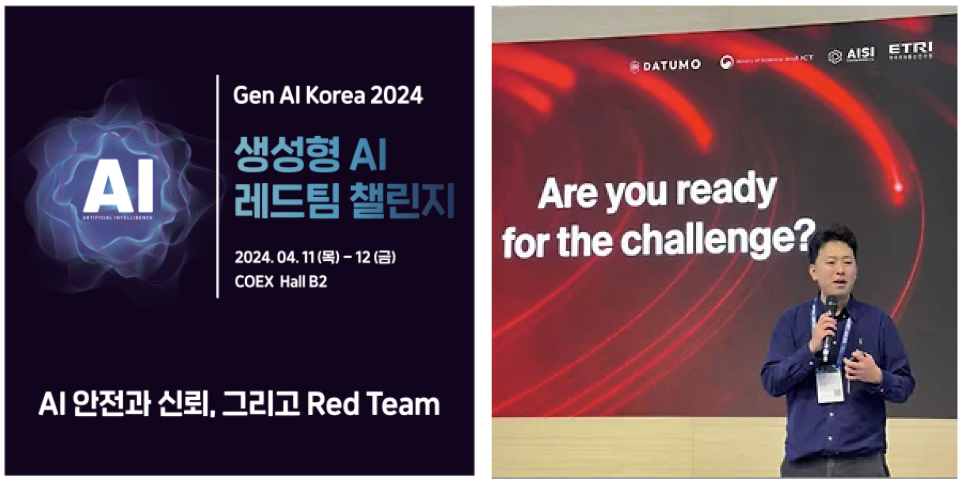

There is also growing interest in using AI agents as defenders. By deploying “friendly” AI agents to inspect systems and identify vulnerabilities first, companies can conduct proactive red teaming to strengthen their security before an attack occurs.

Datumo has been continuously building its expertise by planning and running the 2024 Generative AI Red Team Challenge in collaboration with the Korean Ministry of Science and ICT, as well as the 2025 MWC Red Team Challenge with GSMA.

Join Datumo in AI red teaming and take the most critical step toward building safe AI.