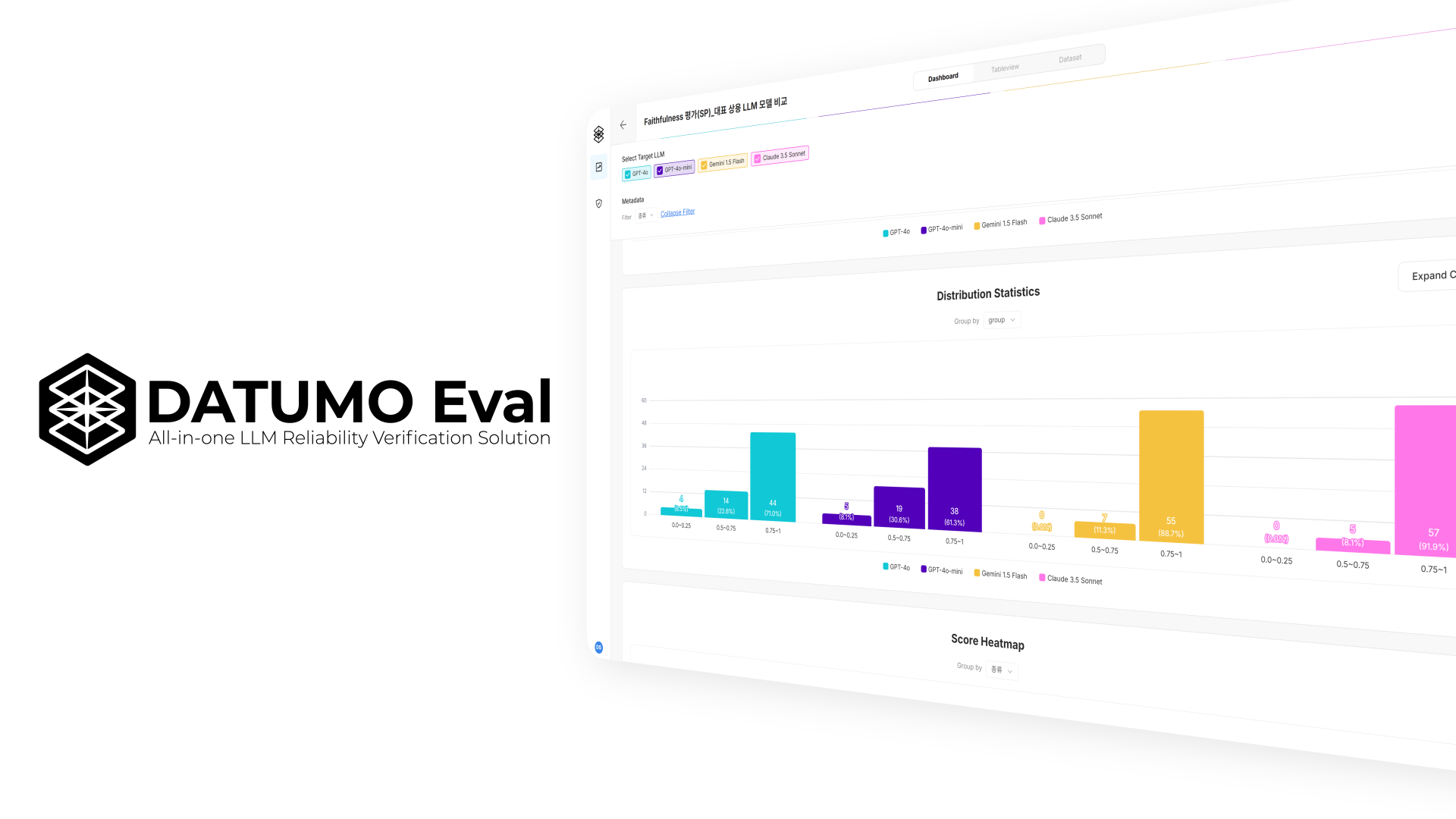

How to Measure LLMs: Key Metrics Explained

Datumo Eval is an AI reliability evaluation platform designed to quantify and monitor the quality of LLM responses. It offers a wide range of evaluation tools to help teams build more trustworthy AI. At the core of the platform is...