“AI developers were spending over 80% of their time on data collection and labeling. That’s when I realized: the real bottleneck wasn’t the algorithm — it was the data.”

– David Kim, CEO & Co-founder of Datumo

We’re excited to announce that Datumo was recently featured in a detailed interview by Founders’ Interviews, shedding light on how we’re addressing one of the most critical challenges in AI today: trust.

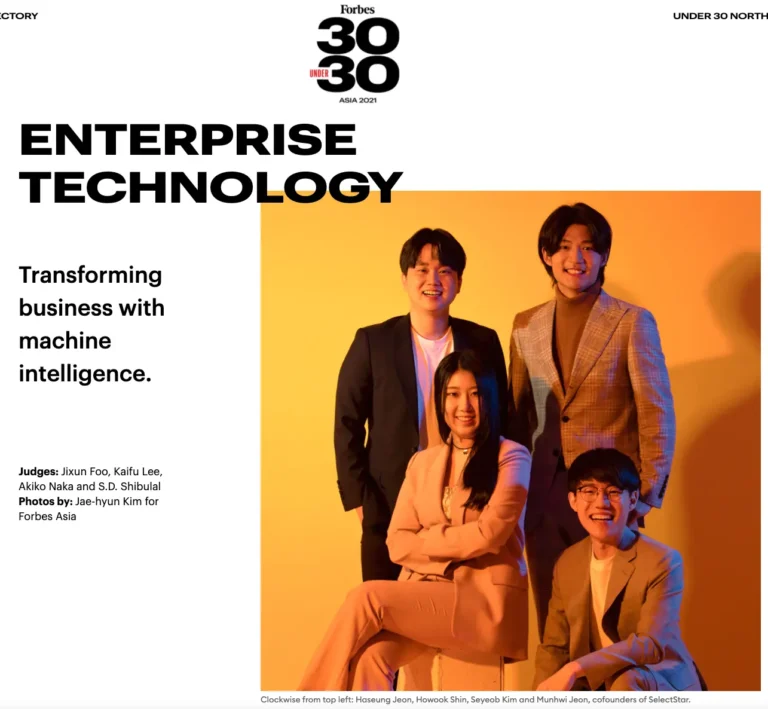

From Data Crowdsourcing to Global AI Evaluation

Datumo started with a clear observation: while models were evolving rapidly, data pipelines weren’t keeping up. In 2018, our CEO David Kim noticed that AI developers were spending the majority of their time gathering and labeling data, not actually building models. That insight sparked the launch of Cash Mission, our first crowdsourcing platform for AI training data.

Fast forward to today, and Datumo has grown into a leading provider of AI data services and trustworthiness evaluation tools, having processed over 200 million data cases and served clients like Samsung, Naver, KT, LG, and SK Telecom.

Why Trustworthiness Matters More Than Ever

Generative AI is evolving quickly, but that progress raises tough questions: Can we trust what these models generate? Are they safe? Are they fair?

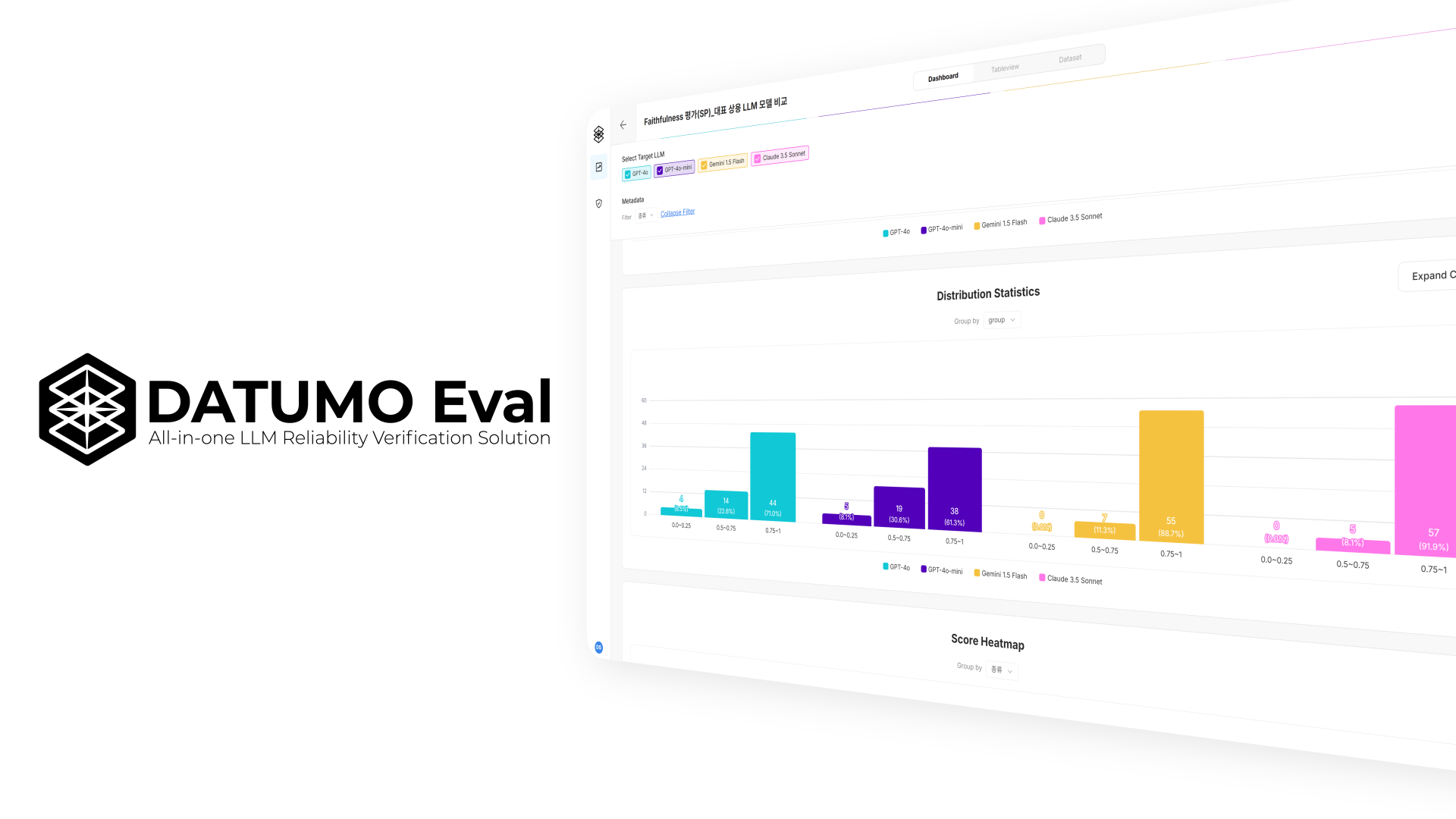

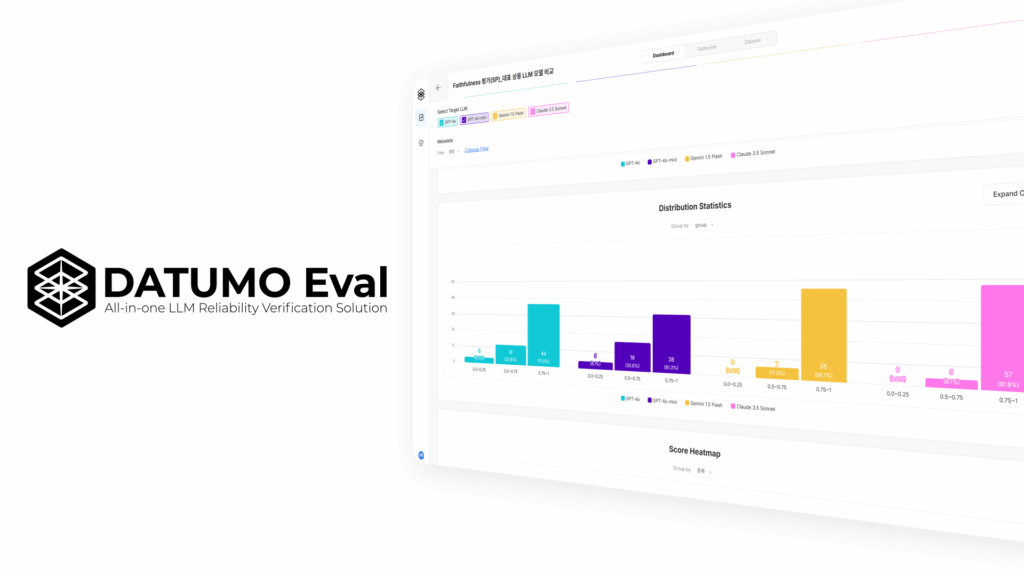

Datumo addresses these concerns head-on with Datumo Eval, our flagship platform that evaluates the performance, safety, and reliability of large language models (LLMs). Unlike traditional manual evaluations, Datumo Eval uses automated, domain-specific test prompts and agentic flow-based assessments to simulate real-world user behavior. The result? Faster, smarter, and more rigorous model validation.

“Our mission isn’t just to make AI powerful — it’s to make it responsible.”

Stay ahead in AI

What Sets Us Apart

While many companies are entering the AI reliability space, Datumo brings unique strengths:

- Proprietary agent-based evaluation technology

Red-teaming features that simulate adversarial prompts

Customizable frameworks aligned with regulatory standards

A design optimized for secure, low-integration environments

A proven track record with both Korean and global enterprise clients

We also generate over 1 million test questions automatically—something that manual evaluation frameworks can’t match in scale or speed.

Want to learn more?

You can read the full interview here:

From Data Bottlenecks to AI Trust – How Datumo is Shaping the Future

We’re honored to be recognized for our work and excited for what’s ahead. Thank you to our team, partners, and clients for making this journey possible. Let’s continue building AI we can trust.