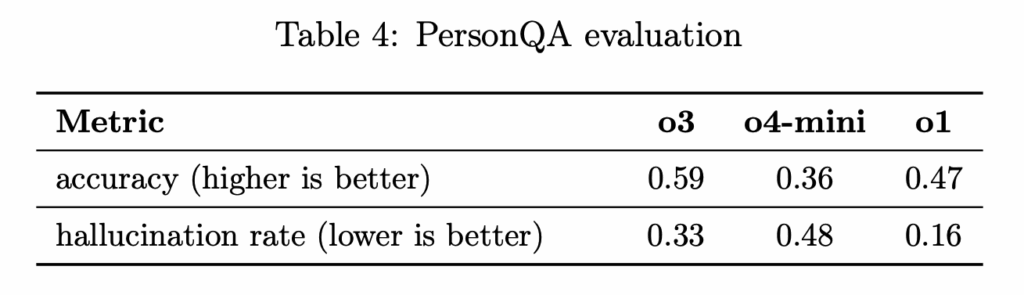

Accuracy and Hallucination rate of o3 & o4-mini

Source: OpenAI

*PersonQA is an internal benchmark developed by OpenAI to evaluate how accurately AI models can answer questions about real-world individuals. It’s primarily used to assess how reliably a model operates when handling factual knowledge.

– Examples: “In what year did Barack Obama win the Nobel Peace Prize?” “Which companies did Elon Musk found?”

Until now, newer models typically showed a decreasing trend in hallucination rates. What’s surprising this time is not just the reversal of that trend, but the fact that even OpenAI admits it doesn’t fully understand why. In the official system card, the company simply speculates as follows:

Specifically, o3 tends to make more claims overall, leading to more accurate claims as well as more inaccurate/hallucinated claims.

More research is needed to understand the cause of this result.

Why release it, then?

Why release o3 and o4-mini when their accuracy didn’t significantly improve—and hallucination rates got worse?

o3: A “more thoughtful” model

o3 is designed as a reasoning-first model. Instead of answering right away, it explores multiple reasoning strategies, identifies its own mistakes, and combines tools to reach a final answer.

OpenAI speculates that o3 tends to make more claims overall, which might lead to an increase in both accurate answers and hallucinations. But since hallucination rate is a relative metric, this explanation is debatable. Still, more correct claims suggest it’s attempting more, indicating deeper effort in reasoning.

o4-mini: A value model, not a flagship

o4-mini was never meant to be the most powerful model. It was built with cost-efficiency and speed in mind, particularly to work well in combination with tools like Python.

Is hallucination unsolvable?

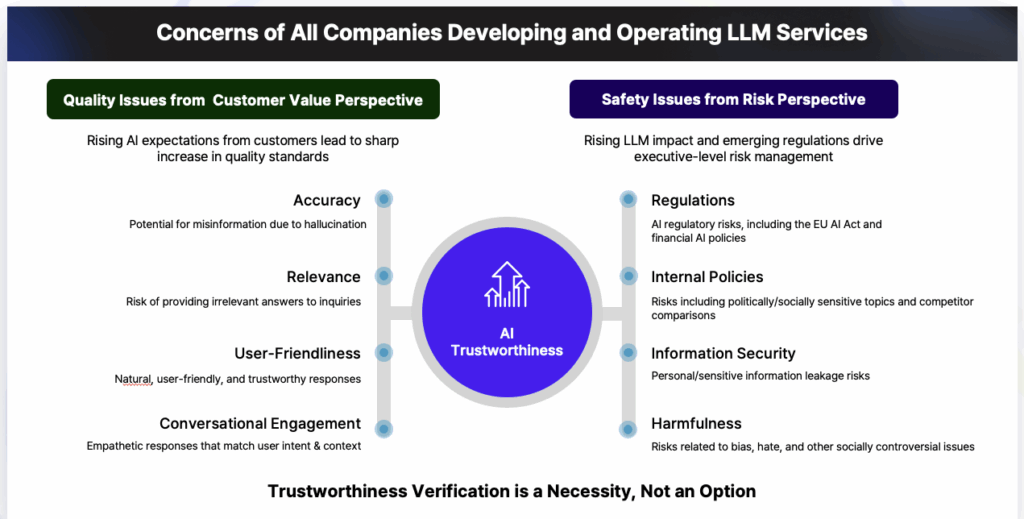

Despite the breakneck speed of AI development, hallucination remains a persistent headache for LLMs. Since an LLM’s accuracy directly impacts service safety and a company’s reputation, reliability testing is no longer optional, but essential.

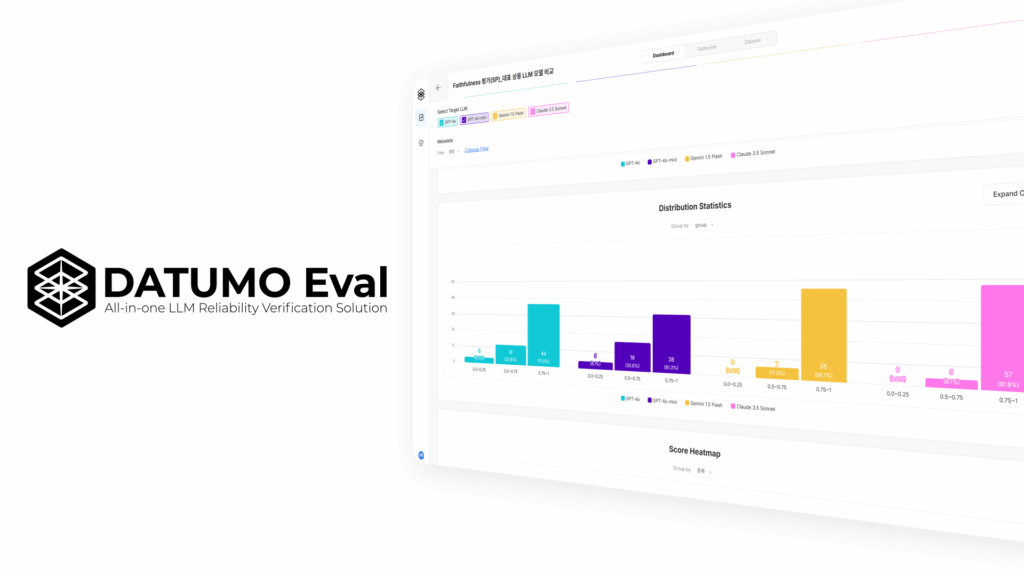

Datumo has developed Datumo Eval, an all-in-one platform to automate the reliability evaluation process for LLMs and LLM-based AI services. |

With Datumo Eval, you can launch AI services with confidence. Beyond hallucinations, the platform allows you to run tailored reliability evaluations, including bias, legality, privacy violations, information completeness, and any custom indicators you define.