Large language models (LLMs) powered by generative AI demonstrate impressive performance across various domains. However, effectively applying AI in real-world scenarios requires a solid understanding of its training methods and strategies for handling data. In this article, we delve into LLM training and Retrieval-Augmented Generation (RAG), exploring why RAG is essential and how structuring data plays a critical role in its success.

The Emergence of RAG: A New Approach

LLM training involves adjusting the model’s internal parameters, which are vast numerical data structures. This process can be compared to configuring synapses between neurons in the human brain. LLM training typically includes stages like pre-training and post-training, which were mentioned earlier. Enhancing an LLM’s performance requires multiple training stages, often consuming massive datasets and significant computational resources.

Recently, companies have started exploring new ways to utilize generative AI more efficiently. In the past, fine-tuning was the go-to approach, involving the preparation of domain-specific datasets (e.g., for detection, summarization, or classification) and training the model to suit specific tasks. However, the trend is shifting towards using prompting with models like GPT to solve problems without additional training.

This raises questions such as:

- “How can we leverage GPT effectively without retraining it?”

- “How can we integrate domain knowledge to make GPT generate accurate answers?”

RAG (Retrieval-Augmented Generation) has emerged as the solution to these challenges. It provides a method to utilize GPT efficiently, delivering the desired results without requiring additional training of the model itself.

Fine-Tuning vs. RAG: Contrasting Approaches

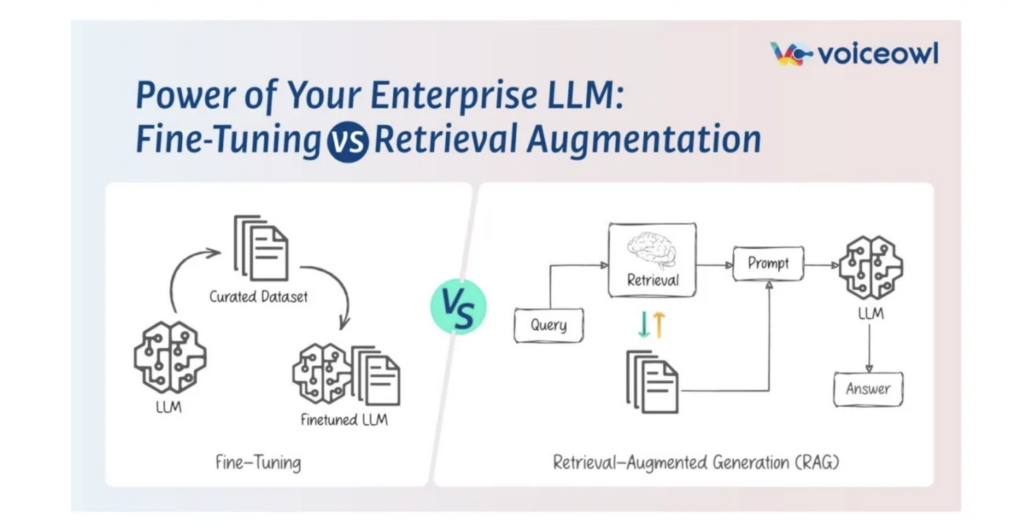

To leverage LLMs for domain-specific tasks, two main approaches are commonly used: Fine-Tuning and RAG (Retrieval-Augmented Generation). While these methods take opposite approaches, each has its own strengths and weaknesses, making them suitable for different scenarios and goals.

Fine-Tuning: Reshaping the Model’s Brain

Fine-tuning involves training and modifying the model’s parameters to optimize its reasoning for specific domains. It’s akin to altering the structure of neurons and synapses in a human brain.

How it Works:

The model learns from new data, adapting its internal structure to align with the domain-specific requirements. The resulting fine-tuned model retains the knowledge it acquired, delivering improved performance on similar tasks in the future.Advantages:

- Creates highly customized models tailored to specific domains.

- Retains learned knowledge for consistent results over time.

Challenges:

- Requires significant time and computational resources.

- Involves retraining the entire model, which can be resource-intensive.

RAG: Generating Answers with an Open-Book Approach

RAG, on the other hand, doesn’t alter the model’s parameters. Instead, it uses external data sources to provide answers, similar to taking an open-book test.

How it Works:

Without additional training, the model references external knowledge, such as a corporate database or online resources, to generate responses.Advantages:

- Fast and efficient, as it bypasses the need for retraining.

- Easily incorporates up-to-date information from internal or external data sources.

Challenges:

- Relies heavily on the quality and availability of external data.

- Requires a robust retrieval mechanism to deliver relevant and accurate context.

Choosing the Right Approach

- Fine-Tuning is ideal for static, specialized tasks that require deep understanding and consistent performance.

- RAG excels in dynamic environments where real-time knowledge retrieval and adaptability are key.

By understanding the differences, organizations can choose the approach that best aligns with their goals and resource availability.

How RAG Works

- Question Input: The user inputs a question.

- Knowledge Retrieval: Relevant information is searched and retrieved from internal databases (e.g., product data, legal documents, medical charts).

- Answer Generation: Based on the retrieved information, the model generates an optimized response.

RAG enables tailored solutions for specific domains like hotels or hospitals. By combining enterprise data with external AI models, it provides instant, domain-specific insights without requiring additional LLM training.

Case Study: Marriott Hotels’ RENAI Chatbot

Marriott Renaissance Hotels developed the GPT-based chatbot RENAI using RAG, even without a dedicated AI team. By integrating internal databases with GPT APIs, RENAI delivers personalized services such as:

- Local attraction recommendations.

- Discount information.

- Connections to local experts.

This personalized approach enhanced customer experiences, increased service utilization within hotels, and boosted revenue. Marriott’s example highlights how RAG empowers businesses to leverage AI effectively, even with limited resources.

Case Study: Salesforce’s Einstein GPT

Salesforce, renowned for its CRM (Customer Relationship Management) solutions, implemented Einstein GPT to enhance customer management and sales efficiency. Powered by GPT, Einstein GPT automates repetitive tasks such as:

- Providing sales insights.

- Writing emails.

- Creating marketing content.

This automation led to notable achievements, including:

- Over a 10% increase in Q4 2023 revenue compared to the same period the previous year.

- Significant improvements in productivity.

Salesforce’s success demonstrates how RAG-driven AI solutions can transform workflows and deliver measurable business outcomes.

Technology holds limited value on its own; its true significance comes from how it is applied. By thoughtfully implementing RAG—considering available data, desired problem-solving approaches, and resource constraints—it can become a powerful tool, especially in environments where resource efficiency and real-time data utilization are critical.

However, RAG is not a universal solution for all challenges. Understanding its complementary relationship with traditional methods like fine-tuning is essential. RAG delivers its true value only when applied in the right situations, alongside other approaches where appropriate.