With the rise of Large Language Models (LLMs), a new capability has emerged in the AI landscape: LLM agents. Distinct from traditional AI models that respond passively to isolated prompts, LLM agents are designed to function as dynamic, autonomous assistants. They are “showing promise” by performing multi-step tasks, interacting with other systems, and retrieving external information to address complex requests.

What Are LLM Agents?

These advanced AI tools extend the capabilities of foundational language models, transforming them from reactive entities into task-oriented tools. By integrating features like data retrieval, planning, and external tool use, they can manage workflows involving various dependencies and decision points. Equipped with functionalities such as access to APIs, databases, and structured knowledge bases, these models can fetch precise information on demand.

For example, imagine one embedded in a customer service platform. Rather than relying solely on its training data, it can retrieve up-to-date account information, review past interactions, and escalate issues needing human attention. This capability not only enhances accuracy but also drives greater operational efficiency, elevating the customer service experience.

Core Capabilities of LLM Agents

LLM agents excel due to three core capabilities that distinguish them from traditional AI:

Task-Oriented Retrieval: Using retrieval-augmented generation (RAG) techniques, these models ground responses in real-time, factual data. This approach is particularly valuable when addressing questions beyond the model’s knowledge cutoff, as it allows access to current, reliable sources instead of relying solely on static training data. As a result, the risk of hallucinations—a common issue where AI generates plausible but incorrect information—is significantly reduced.

Tool Utilization: Many LLM agents are designed to interact with external tools and APIs. In sales, for instance, an agent might autonomously access a CRM system to generate customer insights or create summaries based on live data. By incorporating these tools, they can deliver accurate and relevant outputs, a critical asset in industries where data evolves rapidly.

Contextual Understanding and Workflow Execution: Advanced models maintain context over multiple interactions. In an e-commerce setting, for example, an agent could guide a customer from browsing to purchase, continuously updating recommendations based on each interaction. This contextual memory makes these tools ideal for roles requiring sustained engagement, such as project management or personalized health coaching.

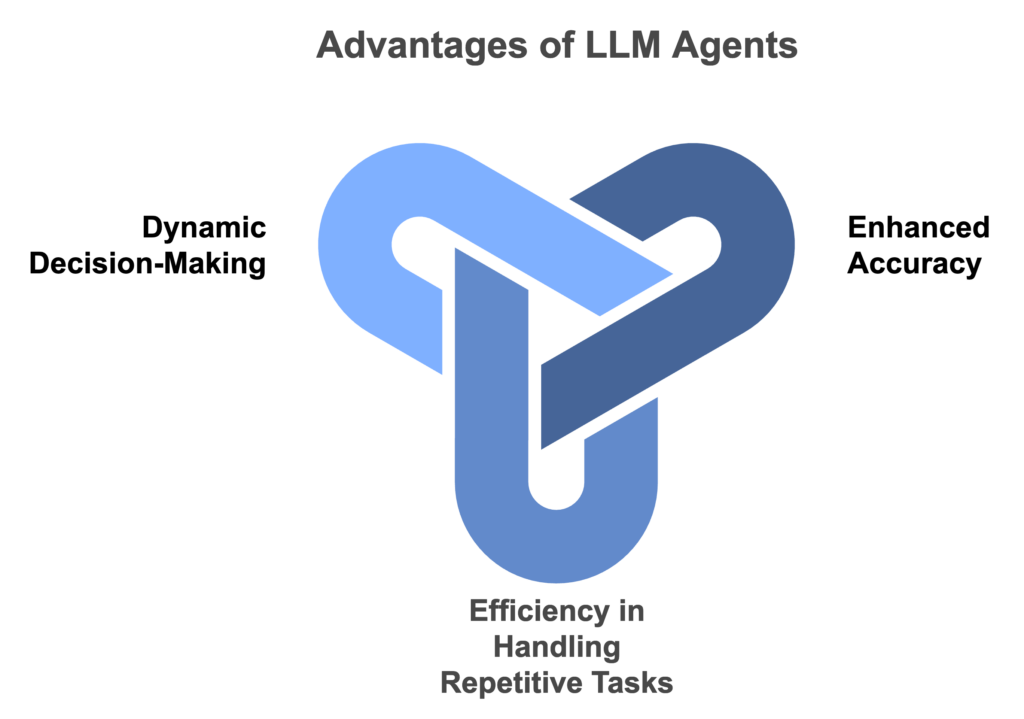

Key Advantages of LLM Agents

LLM agents are gaining traction across industries thanks to several distinct advantages:

Enhanced Accuracy and Reduced Hallucinations: By connecting to external data sources and adhering to RAG principles, hallucinations are minimized. This is particularly critical in high-stakes fields like finance, healthcare, and legal services, where accuracy is paramount.

Efficiency in Handling Repetitive Tasks: LLM agents streamline routine tasks, reducing the need for human intervention. This enhanced efficiency is valuable in settings like customer service, where agents can manage basic inquiries autonomously, freeing human representatives to address more complex issues.

Dynamic Decision-Making: Unlike static AI models, LLM agents can interact with live data and adjust their actions dynamically. In logistics, for example, an agent might reroute deliveries based on real-time traffic conditions, optimizing supply chain efficiency.

Implementing LLM Agents

While LLM agents offer exciting possibilities, their implementation requires careful planning to ensure reliability and safety:

Data Integrity and Security: Since LLM agents often access sensitive data, stringent data access and usage policies are essential. Securing APIs, enforcing encryption, and establishing robust access controls help ensure that LLM agents operate within safe and secure boundaries.

Continuous Monitoring and Feedback Loops: Maintaining accuracy over time calls for constant monitoring and feedback. Human oversight—where actions taken by LLM agents are reviewed, approved, or corrected—helps prevent drift and preserves accuracy standards.

Bias Mitigation: Like all AI models, LLM agents are susceptible to biases in data and interpretation. Establishing bias-detection protocols and regularly auditing outputs can mitigate unintended consequences, which is especially vital in customer-facing applications.

The Future

As LLM agents continue to integrate into various sectors, their influence is likely to grow, particularly as reliability and customization improve. Advances in multimodal capabilities could enable future agents to work seamlessly across text, images, and even voice, creating an enriched and interactive user experience.

Fields like healthcare, finance, and education, where accurate information retrieval and context retention are critical, stand to benefit tremendously. By combining intelligent data access with real-time decision-making, LLM agents are poised to bring us closer to truly autonomous digital assistants.