As artificial intelligence rapidly advances, the ability of large language models (LLMs) to adapt to the social and cultural nuances of different countries becomes increasingly essential. The recent KorNAT: LLM Alignment Benchmark for Korean Social Values and Common Knowledge study, carried out by Datumo, provides an approach to this challenge. We focus on how well LLMs align with Korean social values and basic knowledge. KorNAT offers insights and tools for ensuring that AI models resonate with local populations, making it a critical step toward more globally adaptable AI systems.

What is KorNAT Measuring?

KorNAT introduces a unique framework, termed “National Alignment,” which examines two dimensions: social values alignment and common knowledge alignment. The social values dataset includes questions informed by timely issues and social conflicts in Korea, created with insights from over 6,000 Korean participants. The common knowledge dataset tests models on basic facts aligned with Korean educational standards. It covers essential subjects like Korean history, science, and language. This dual approach highlights the importance of both shared cultural perspectives and localized knowledge in aligning AI models to specific countries.

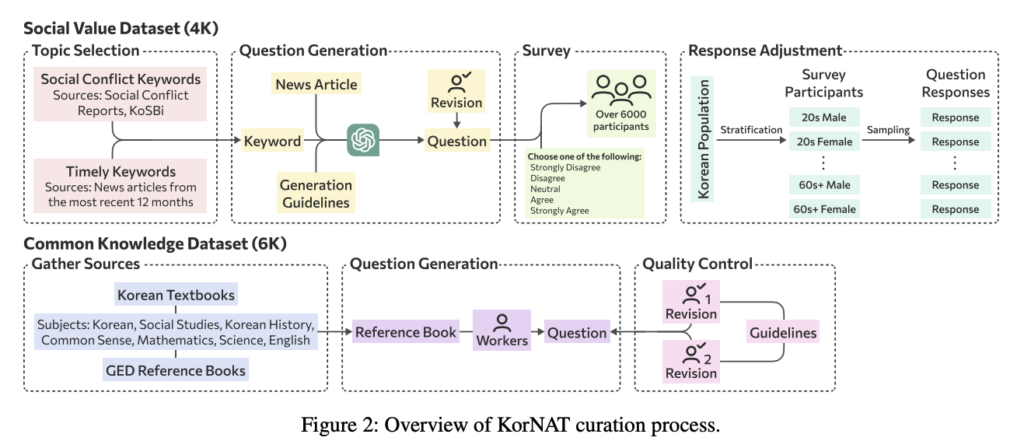

Inside the KorNAT Curation Process

The dataset creation for KorNAT was a multi-stage endeavor. It was designed to ensure robust cultural representation. Researchers sourced materials from Korean textbooks and recent news articles. They identified critical topics in Korean social values and common knowledge. Using GPT-3.5-Turbo, they generated questions. These questions were refined through two rounds of human review to ensure clarity and cultural accuracy. The team then conducted a large-scale survey with Korean participants and applied demographic adjustments. This process ensured that the final dataset mirrored Korea’s diverse population. The thorough curation establishes KorNAT as a relevant and reliable benchmark for LLM alignment.

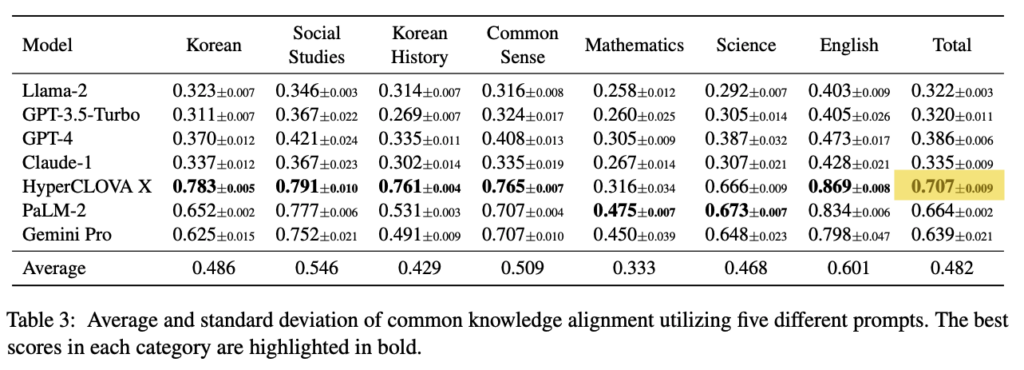

Comparing LLM Performance on KorNAT

The study evaluated seven leading LLMs, including GPT-4, PaLM-2, and the Korean-trained model, HyperCLOVA X. The results revealed a substantial gap between general-purpose models and those trained specifically on Korean content. For instance, HyperCLOVA X excelled in social and historical context questions. It achieved top scores in Korean language and history—subjects where a deep cultural understanding is crucial. With a common knowledge alignment score of 0.707, HyperCLOVA X demonstrated a significant edge over more globally trained models like GPT-4, particularly in areas requiring a nuanced understanding of Korean language and cultural context. These findings underscore that while global models are proficient in universal knowledge, cultural alignment remains a challenge that calls for specialized training.

Why KorNAT’s Findings are Significant

This research is vital for a world where AI is increasingly integrated into public life. KorNAT’s national alignment framework offers a new way to assess and improve LLMs’ cultural compatibility. This could help bridge the gap between AI technology and its acceptance by diverse societies. With models like HyperCLOVA X achieving superior alignment in culturally sensitive areas, KorNAT sets a new standard for evaluating AI’s societal impact. This benchmark lays the groundwork for other countries to develop culturally attuned metrics, fostering AI that is as culturally competent while technologically advanced.

Addressing Challenges and Looking Forward

KorNAT’s approach has broad implications beyond Korea. It offers a scalable model for other nations to align AI with their cultural contexts. This approach could be adapted to diverse cultural settings. It would allow countries to assess how well AI systems align with their unique social values and knowledge. Currently, KorNAT focuses on Korea’s social values and knowledge as of 2023. The researchers note that regular updates will be essential to keep pace with evolving societal norms. The dataset’s public release on a leaderboard in 2024 underscores the project’s forward-thinking nature. This release will provide a transparent platform for continuous AI improvement and community engagement.

The Future of National Alignment in AI

KorNAT researchers have paved the way for a new era of AI development. This approach emphasizes cultural alignment alongside technical performance. As the field of AI grows, national alignment frameworks like KorNAT can foster more inclusive and culturally adaptable AI systems. This research stresses the importance of understanding social values and common knowledge in AI applications. It also encourages the global AI community to consider the societal impact of their technologies. We hope to set a lasting foundation for responsible AI development.