On May 21st, the European Union (EU) officially confirmed the implementation of the world’s first “Artificial Intelligence Act (AI Act).” Set to be fully enforced by 2026, this legislation applies to any AI model used within Europe, regardless of where it was developed. As the first of its kind, it is expected to have a significant influence on future regulations around the world. Let’s take a closer look at this groundbreaking law.

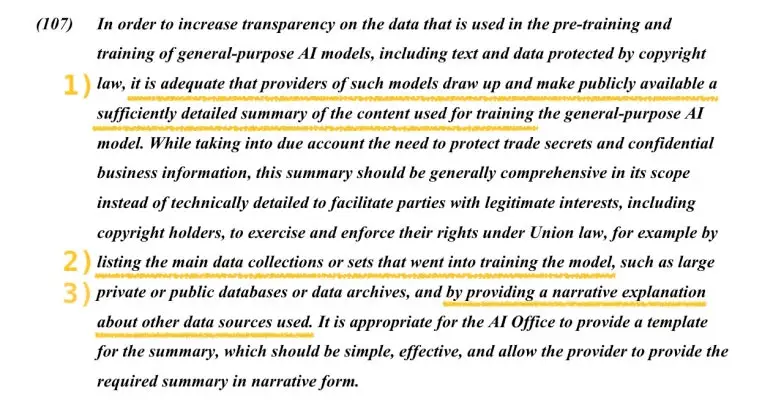

4 Risk Levels of AI

The AI Act classifies the risks associated with AI into four categories: Unacceptable Risk, High Risk, Limited Risk, and Minimal Risk. Each level is determined based on the sensitivity of the data involved and the intended purpose of the AI model.

출처: Shaping Europe's digital future AI Act

AI that falls under the high-risk category, though permissible, is defined as being used in the following areas:

Critical Infrastructure – including transportation issues that could pose risks to life and health.

Education or Vocational Training – systems that could influence decisions regarding access to education or career opportunities.

Safety components of products – such as AI applications in robot-assisted surgeries.

Employment, worker management, and access to self-employment – including software that filters résumés and influences hiring processes.

Essential private and public services – such as credit scoring systems that could deny individuals the opportunity to obtain loans.

Law enforcement – including evidence reliability assessments that could infringe on fundamental rights.

Migration, asylum, and border management – such as the automated review of visa applications.

Administration of justice and democratic processes – including AI solutions for searching court rulings.

So, what exactly is Limited Risk?

Limited Risk refers to the dangers that arise from the lack of transparency in AI usage. The AI Act specifies that it must be clearly disclosed when interacting with AI or when content is generated by AI. This rule also applies to audio and video related to deepfakes, with no exceptions.

General Purpose AI

Examples of general-purpose AI, which can perform a wide range of tasks rather than being tuned for specific functions, include Apple’s Siri and Amazon’s Alexa. These general-purpose AIs are also now required to be more “transparent.” Let’s take a look at Article 107, which outlines the rules that providers of general-purpose AI models must follow.

AI Act

1) It is adequate that providers of such models draw up and make publicly available a sufficiently detailed summary of the content used for training the general-purpose AI model.

2) … listing the main data collections or sets that went into training the model…

3. …by providing a narrative explanation about other data sources used.

This legislation places great emphasis on the disclosure of data sources. As the world’s first AI regulation, it is expected to have a significant impact on future AI regulations. Moving forward, won’t there be stricter global requirements for providing detailed information about the data used to train AI models?

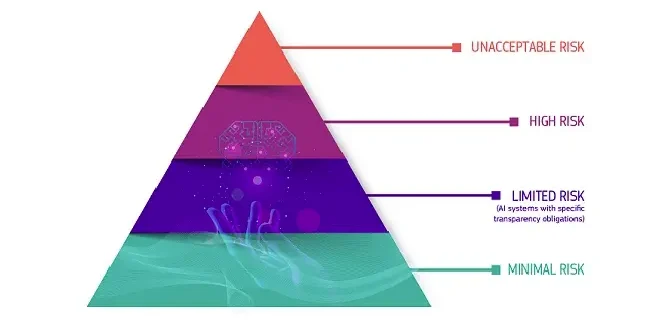

Human-centric AI

What exactly is the purpose of the AI Act?

AI Act

The AI Act describes its purpose as “to promote the uptake of human-centric and trustworthy AI.” A key term here is “Human-centric AI.”

In this legislation, the terms *transparent* and *non-discriminatory* frequently appear. This is because for AI to be human-centric and trustworthy, it must be both transparent and free from discrimination.

We need data-centric AI for the accuracy of a model,

while for safety,

human-centric AI is necessary.

Under the motto “The Data for Smarter AI,” Datumo has consistently provided only high-quality data. Additionally, with a strong commitment to safe AI, we have planned and operated the Red Team Challenge. No matter what regulations arise, we are here to help you build AI models with confidence, using licensed data that is fully transparent about its sources.